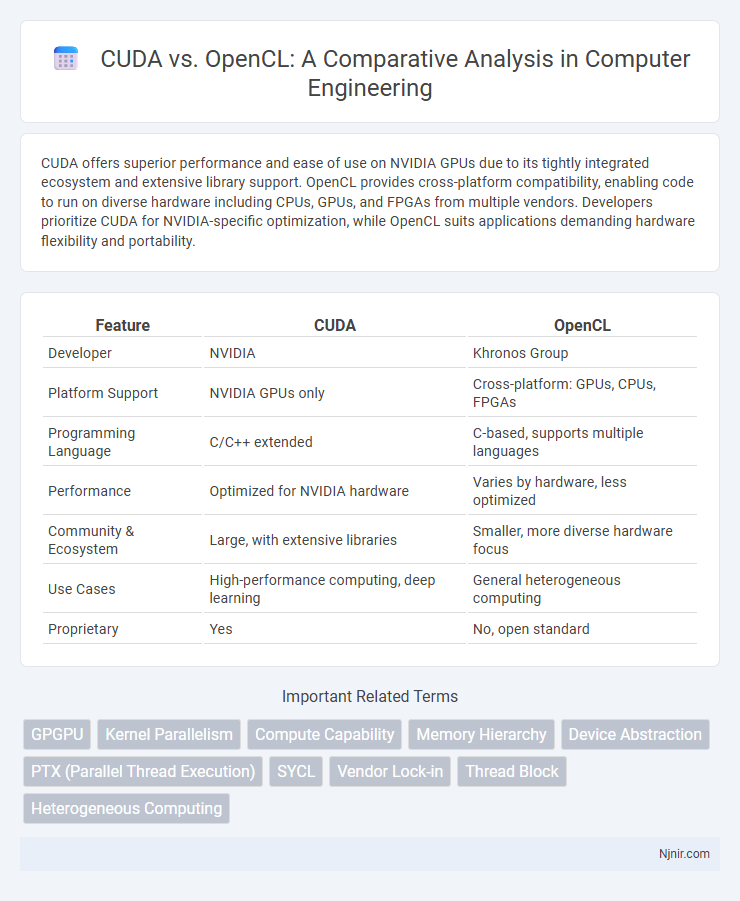

CUDA offers superior performance and ease of use on NVIDIA GPUs due to its tightly integrated ecosystem and extensive library support. OpenCL provides cross-platform compatibility, enabling code to run on diverse hardware including CPUs, GPUs, and FPGAs from multiple vendors. Developers prioritize CUDA for NVIDIA-specific optimization, while OpenCL suits applications demanding hardware flexibility and portability.

Table of Comparison

| Feature | CUDA | OpenCL |

|---|---|---|

| Developer | NVIDIA | Khronos Group |

| Platform Support | NVIDIA GPUs only | Cross-platform: GPUs, CPUs, FPGAs |

| Programming Language | C/C++ extended | C-based, supports multiple languages |

| Performance | Optimized for NVIDIA hardware | Varies by hardware, less optimized |

| Community & Ecosystem | Large, with extensive libraries | Smaller, more diverse hardware focus |

| Use Cases | High-performance computing, deep learning | General heterogeneous computing |

| Proprietary | Yes | No, open standard |

Introduction to Parallel Computing Frameworks

CUDA and OpenCL are leading parallel computing frameworks designed for accelerating computational tasks across GPUs and other processors. CUDA, developed by NVIDIA, provides a rich API tailored for NVIDIA GPUs, enabling efficient execution of parallel algorithms with extensive library support. OpenCL, maintained by the Khronos Group, offers a cross-platform, vendor-neutral programming model that supports heterogeneous computing across CPUs, GPUs, and other processors, facilitating broader hardware compatibility.

What is CUDA?

CUDA is a parallel computing platform and programming model developed by NVIDIA, designed to leverage the power of NVIDIA GPUs for general-purpose computing tasks. It enables developers to write software that executes multiple threads concurrently, drastically accelerating compute-intensive applications such as scientific simulations, machine learning, and video processing. CUDA provides a rich set of libraries, tools, and APIs optimized for NVIDIA hardware, resulting in superior performance compared to more hardware-agnostic solutions like OpenCL.

What is OpenCL?

OpenCL (Open Computing Language) is an open standard framework designed for writing programs that execute across heterogeneous platforms, including CPUs, GPUs, and other processors, enabling parallel computing. Unlike CUDA, which is proprietary and developed by NVIDIA exclusively for its GPUs, OpenCL supports a wide range of hardware vendors and architectures. This flexibility allows developers to write portable code that can run efficiently on multiple devices, promoting cross-platform compatibility in high-performance computing applications.

Architecture and Platform Support

CUDA is a parallel computing platform and programming model developed by NVIDIA, optimized for NVIDIA GPUs with a proprietary architecture that tightly integrates hardware and software for enhanced performance. OpenCL is an open standard for parallel programming across heterogeneous systems, supporting a wide range of devices including CPUs, GPUs, FPGAs, and DSPs from multiple vendors, offering broader platform compatibility. CUDA's architecture enables deep optimization for NVIDIA hardware, while OpenCL prioritizes cross-platform flexibility at the cost of some hardware-specific performance tuning.

Programming Model and Syntax Comparison

CUDA employs a C/C++-based programming model tailored for NVIDIA GPUs, leveraging a hierarchical thread structure with grids and blocks, enabling fine-grained parallelism management. OpenCL uses a more portable, platform-independent C-based kernel language with a less rigid thread hierarchy, supporting diverse hardware including CPUs, GPUs, and FPGAs through command queues and work-items. CUDA's syntax offers specialized memory spaces and built-in functions optimizing GPU-specific operations, while OpenCL requires explicit management of memory buffers and kernel execution, promoting flexibility across varying architectures.

Performance and Optimization

CUDA offers superior performance and optimization on NVIDIA GPUs due to its tailored architecture and extensive compiler optimizations, enabling direct access to hardware features like shared memory and warp-level primitives. OpenCL provides cross-platform compatibility across diverse hardware, but its performance often lags behind CUDA because of generalized drivers and less fine-tuned optimization. Developers targeting maximal computational efficiency on NVIDIA devices typically prefer CUDA for leveraging advanced parallel processing capabilities and mature optimization tools.

Hardware Compatibility

CUDA is designed specifically for NVIDIA GPUs, offering optimized performance and deep integration with NVIDIA hardware features, making it the preferred choice for applications targeting NVIDIA devices. OpenCL supports a wide range of hardware platforms including AMD GPUs, Intel CPUs, ARM processors, and FPGAs, providing greater flexibility across diverse computing environments. Hardware compatibility with OpenCL enables cross-vendor parallel programming, while CUDA remains limited to the NVIDIA ecosystem but delivers superior optimization for compatible hardware.

Development Tools and Ecosystem

CUDA offers a robust development environment with NVIDIA's comprehensive CUDA Toolkit, including the Nsight suite for debugging and profiling, extensive libraries like cuBLAS and cuDNN, and strong integration with popular IDEs such as Visual Studio and Eclipse. OpenCL presents a more platform-agnostic approach, supported by various vendors including AMD, Intel, and ARM, with tooling like CodeXL and Intel VTune, but its ecosystem tends to be less unified and mature compared to CUDA. Developers often find CUDA's specialized tools and optimized libraries more conducive to high-performance GPU programming, while OpenCL provides broader hardware compatibility at the expense of tooling consistency.

Adoption and Industry Use Cases

CUDA dominates the AI and deep learning sectors due to NVIDIA's GPU architecture and strong software ecosystem, with widespread adoption in autonomous vehicles, scientific simulations, and high-performance computing. OpenCL offers broader hardware compatibility, supporting CPUs, GPUs, and FPGAs from various vendors, making it popular in heterogeneous computing environments such as embedded systems and mobile devices. Industry leaders use CUDA for optimized AI workloads in data centers, while OpenCL serves cross-platform applications requiring flexibility across diverse hardware configurations.

Choosing Between CUDA and OpenCL

Choosing between CUDA and OpenCL depends on hardware compatibility and development goals; CUDA offers superior performance and tooling for NVIDIA GPUs, while OpenCL provides broader platform support across various devices including AMD and Intel GPUs. Developers targeting NVIDIA-exclusive environments benefit from CUDA's optimized libraries and extensive ecosystem, whereas OpenCL is ideal for cross-platform applications requiring flexibility. Performance benchmarks consistently show CUDA excels in NVIDIA hardware acceleration, but OpenCL remains preferable for heterogeneous computing environments.

GPGPU

CUDA offers higher performance and better developer support for GPGPU applications on NVIDIA GPUs, while OpenCL provides cross-platform compatibility across diverse hardware vendors.

Kernel Parallelism

CUDA offers superior kernel parallelism with optimized thread management on NVIDIA GPUs compared to OpenCL's broader, less specialized parallel execution model across diverse hardware platforms.

Compute Capability

CUDA offers higher compute capability and optimized hardware-level support on NVIDIA GPUs compared to the cross-platform but generally less performance-tuned OpenCL frameworks.

Memory Hierarchy

CUDA features a multi-level memory hierarchy including global, shared, and local memory optimized for NVIDIA GPUs, while OpenCL provides a more flexible but less specialized memory model supporting diverse hardware architectures.

Device Abstraction

CUDA provides a higher-level, NVIDIA-specific device abstraction optimized for NVIDIA GPUs, while OpenCL offers a more flexible, cross-vendor device abstraction supporting a wider range of heterogeneous devices including CPUs, GPUs, and FPGAs.

PTX (Parallel Thread Execution)

CUDA's PTX (Parallel Thread Execution) offers a low-level virtual machine and instruction set architecture specifically optimized for NVIDIA GPUs, providing finer control and better performance compared to OpenCL's more generic intermediate representations.

SYCL

SYCL enhances OpenCL by providing a higher-level, single-source C++ programming model that enables easier development of heterogeneous applications across CUDA and other platforms.

Vendor Lock-in

CUDA provides high-performance GPU computing primarily on NVIDIA hardware, resulting in vendor lock-in, whereas OpenCL supports cross-platform parallel programming across multiple vendors, reducing dependency on a single hardware provider.

Thread Block

CUDA achieves higher performance than OpenCL by efficiently managing thread blocks with fine-grained control over shared memory and synchronization on NVIDIA GPUs.

Heterogeneous Computing

CUDA delivers optimized performance on NVIDIA GPUs for heterogeneous computing, while OpenCL provides cross-platform support across diverse hardware including CPUs, GPUs, and FPGAs.

CUDA vs OpenCL Infographic

njnir.com

njnir.com