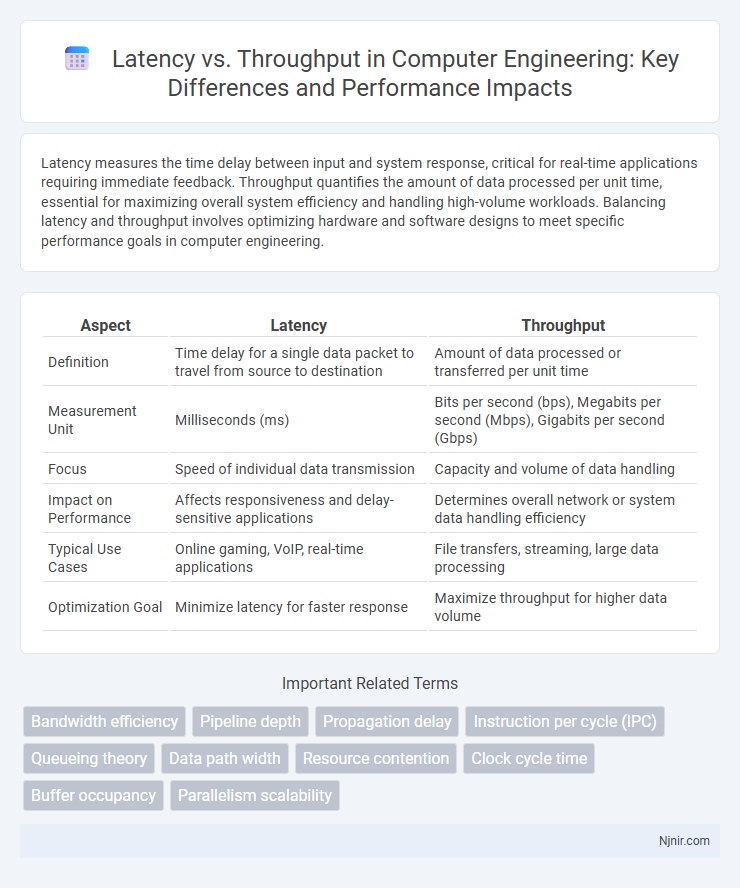

Latency measures the time delay between input and system response, critical for real-time applications requiring immediate feedback. Throughput quantifies the amount of data processed per unit time, essential for maximizing overall system efficiency and handling high-volume workloads. Balancing latency and throughput involves optimizing hardware and software designs to meet specific performance goals in computer engineering.

Table of Comparison

| Aspect | Latency | Throughput |

|---|---|---|

| Definition | Time delay for a single data packet to travel from source to destination | Amount of data processed or transferred per unit time |

| Measurement Unit | Milliseconds (ms) | Bits per second (bps), Megabits per second (Mbps), Gigabits per second (Gbps) |

| Focus | Speed of individual data transmission | Capacity and volume of data handling |

| Impact on Performance | Affects responsiveness and delay-sensitive applications | Determines overall network or system data handling efficiency |

| Typical Use Cases | Online gaming, VoIP, real-time applications | File transfers, streaming, large data processing |

| Optimization Goal | Minimize latency for faster response | Maximize throughput for higher data volume |

Understanding Latency and Throughput in Computer Engineering

Latency measures the time delay between a request and its corresponding response in a computer system, critically impacting performance in real-time applications. Throughput quantifies the amount of data processed or transmitted over a network or system per unit time, reflecting overall system capacity. Balancing low latency and high throughput is essential for optimizing computer engineering designs, especially in network infrastructure and parallel processing environments.

Key Differences Between Latency and Throughput

Latency measures the time delay between a request and the corresponding response, often expressed in milliseconds. Throughput indicates the amount of data successfully transferred over a network or system per unit of time, typically measured in bits per second (bps) or packets per second. Key differences lie in latency focusing on speed and delay, while throughput emphasizes volume and capacity.

The Role of Latency in System Performance

Latency directly impacts system performance by determining the time delay between a request and its corresponding response, which is critical in real-time applications such as online gaming and financial trading. Low latency ensures rapid data processing and minimal waiting periods, enhancing user experience and operational efficiency. High throughput alone cannot compensate for latency issues because prolonged delays can bottleneck data flow and degrade overall system responsiveness.

Throughput: Measuring System Capacity

Throughput measures the maximum number of tasks or data units a system can process within a given time frame, reflecting its overall capacity and efficiency. High throughput indicates robust resource utilization and effective parallel processing capabilities, essential for handling large workloads in networking, databases, and computing systems. Monitoring throughput helps identify performance bottlenecks and optimize system configurations to maintain balanced and scalable operation under varying load conditions.

Latency vs Throughput: Real-World Examples

Latency and throughput measure different aspects of network performance, where latency is the delay before a transfer begins, and throughput is the amount of data transferred over time. In real-world examples, online gaming requires low latency to ensure immediate response, while video streaming prioritizes high throughput to maintain smooth playback. Data centers often optimize for both by reducing latency with faster hardware and increasing throughput through bandwidth enhancements.

Factors Affecting Latency and Throughput

Latency and throughput are influenced by several key factors, including network bandwidth, data packet size, and routing efficiency. High bandwidth increases throughput by allowing more data to be transmitted simultaneously, whereas latency is primarily affected by propagation delay, processing time, and congestion levels in the network. Optimizing hardware performance and reducing protocol overhead also play critical roles in minimizing latency and maximizing throughput in data communication systems.

Optimizing Systems for Low Latency

Optimizing systems for low latency involves minimizing the time delay between input and response, crucial for real-time applications such as gaming, financial trading, and autonomous vehicles. Techniques include reducing network hops, employing faster data serialization, using event-driven architectures, and leveraging hardware accelerations like GPUs or FPGAs. Prioritizing low latency often requires balancing throughput demands to avoid bottlenecks while ensuring rapid, efficient data processing.

Strategies to Maximize Throughput

Maximizing throughput requires optimizing data flow to reduce bottlenecks and improve resource utilization, such as implementing efficient load balancing and parallel processing techniques. Leveraging high-capacity network infrastructure combined with effective caching strategies helps maintain sustained data transfer rates. Employing scalable hardware and software architectures that support concurrent processing ensures that systems can handle increased workloads without sacrificing performance.

Latency and Throughput Trade-offs in Architecture Design

Latency and throughput represent critical performance metrics in architecture design, where reducing latency often requires sacrificing throughput and vice versa. Low-latency systems prioritize rapid response times by minimizing delays, often through lightweight protocols or caching mechanisms, while high-throughput architectures focus on maximizing data processing volumes by employing parallelism and batch processing techniques. Balancing these trade-offs involves optimizing hardware resources, network configurations, and software algorithms to align system capabilities with specific application requirements.

Balancing Latency and Throughput for Performance Optimization

Balancing latency and throughput is crucial for optimizing system performance, as low latency ensures quick response times while high throughput maximizes data processing capacity. Techniques such as load balancing, efficient queuing mechanisms, and parallel processing help achieve an optimal trade-off between these metrics. Monitoring tools and adaptive algorithms dynamically adjust resource allocation to maintain this balance under varying workloads.

Bandwidth efficiency

Maximizing bandwidth efficiency requires balancing low latency with high throughput to optimize data transmission speed and network performance.

Pipeline depth

Increasing pipeline depth reduces latency by allowing overlapping instruction execution but can limit throughput due to higher pipeline hazards and stalls.

Propagation delay

Propagation delay directly affects latency by determining the time it takes for a signal to travel from sender to receiver, thereby influencing overall network performance independently of throughput.

Instruction per cycle (IPC)

Higher Instruction Per Cycle (IPC) improves throughput by executing more instructions simultaneously, while lower latency reduces the time each instruction takes, highlighting the trade-off between IPC-driven throughput and latency in processor performance.

Queueing theory

Queueing theory analyzes latency as the average waiting time in the system, while throughput measures the rate of completed tasks, highlighting the trade-off where increasing throughput often raises latency due to queuing delays.

Data path width

Increasing data path width reduces latency by enabling simultaneous data transfers and enhances throughput by processing more bits per cycle.

Resource contention

Resource contention increases latency and reduces throughput by causing delays and bottlenecks in system performance.

Clock cycle time

Lower clock cycle time reduces latency by enabling faster instruction execution, while higher throughput depends on maximizing parallel operations within each cycle.

Buffer occupancy

High buffer occupancy increases latency but can improve throughput by allowing more data to be processed continuously.

Parallelism scalability

Parallelism scalability enhances throughput by executing multiple tasks concurrently while minimizing latency through efficient resource allocation and synchronization.

latency vs throughput Infographic

njnir.com

njnir.com