In-memory computing drastically reduces latency by performing data processing directly within memory units, bypassing the bottlenecks associated with data transfer in traditional computing architectures. This approach significantly enhances processing speed and energy efficiency, particularly for data-intensive tasks such as artificial intelligence and real-time analytics. Traditional computing relies heavily on CPU-memory communication, which limits performance due to slower data movement and increased power consumption.

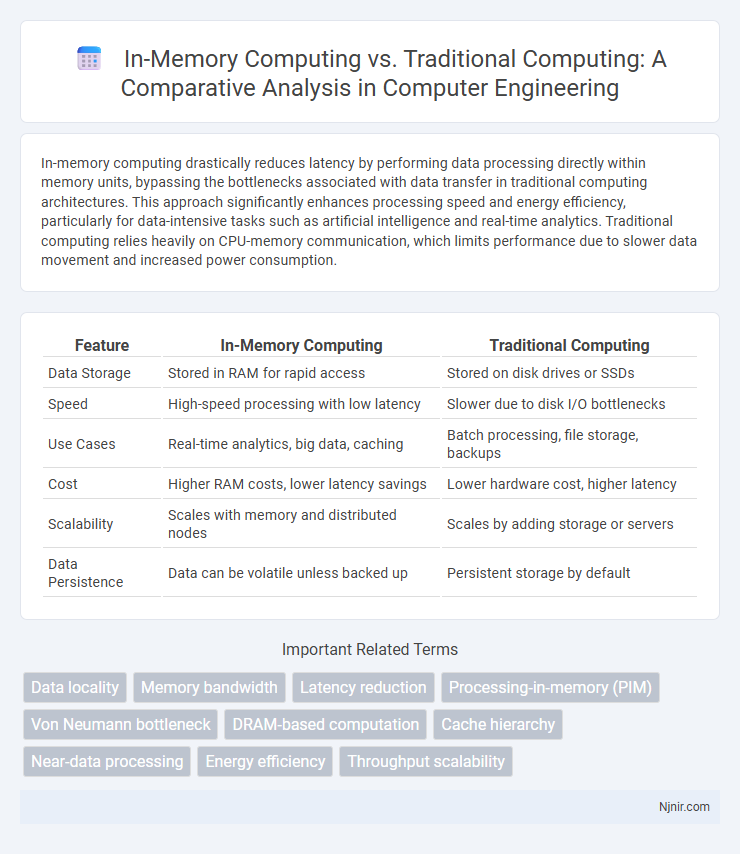

Table of Comparison

| Feature | In-Memory Computing | Traditional Computing |

|---|---|---|

| Data Storage | Stored in RAM for rapid access | Stored on disk drives or SSDs |

| Speed | High-speed processing with low latency | Slower due to disk I/O bottlenecks |

| Use Cases | Real-time analytics, big data, caching | Batch processing, file storage, backups |

| Cost | Higher RAM costs, lower latency savings | Lower hardware cost, higher latency |

| Scalability | Scales with memory and distributed nodes | Scales by adding storage or servers |

| Data Persistence | Data can be volatile unless backed up | Persistent storage by default |

Introduction to In-Memory Computing and Traditional Computing

In-memory computing revolutionizes data processing by storing information directly in the RAM, enabling ultra-fast access and real-time analytics compared to traditional computing, which relies on slower disk-based storage systems. Traditional computing processes data sequentially from hard drives or SSDs, resulting in latency due to frequent read/write operations and limited by I/O bottlenecks. In-memory computing leverages high-speed memory to dramatically reduce response times and improve performance for big data and complex applications.

Architecture Overview: In-Memory vs. Traditional Computing

In-memory computing architecture stores data directly in RAM, enabling rapid data access and processing by minimizing latency compared to traditional disk-based storage systems. Traditional computing relies heavily on hierarchical storage with slower data retrieval from hard drives or SSDs, causing bottlenecks in data-intensive applications. The shift to in-memory computing enhances real-time analytics and high-speed transaction processing by eliminating the input/output constraints inherent in traditional storage architectures.

Data Storage and Access Mechanisms

In-memory computing stores data directly in the system's RAM, enabling ultra-fast data access and real-time processing by eliminating the latency associated with disk I/O operations common in traditional computing. Traditional computing relies on persistent storage devices like hard drives or SSDs, which involve slower data retrieval speeds due to mechanical or electronic read/write constraints. The shift to RAM-based storage in in-memory computing fundamentally enhances performance metrics, including throughput and latency, making it ideal for big data analytics and high-frequency transaction processing.

Performance and Latency Comparison

In-memory computing dramatically reduces latency by storing data directly in RAM, enabling faster access compared to traditional computing, which relies on slower disk-based storage systems. Performance increases significantly as in-memory systems process transactions and queries at speeds up to 100 times faster, minimizing input/output bottlenecks. This optimized data retrieval and processing architecture makes in-memory computing ideal for real-time analytics and high-throughput environments where low latency is critical.

Scalability and Resource Utilization

In-memory computing offers superior scalability by processing data directly in RAM, reducing latency and enabling real-time analytics even with large datasets. Traditional computing relies on disk-based storage, resulting in slower data retrieval and limited scalability due to increased I/O bottlenecks. Resource utilization in in-memory systems is more efficient as it minimizes data movement and leverages high-speed memory, whereas traditional architectures often experience underutilized processing power and higher energy consumption.

Energy Efficiency and Power Consumption

In-memory computing significantly reduces energy consumption by processing data directly within memory arrays, minimizing data movement and associated power costs compared to traditional computing architectures reliant on separate memory and processing units. Traditional computing systems incur higher power consumption due to frequent data transfers between CPU and memory, leading to increased latency and energy wastage. The energy efficiency of in-memory computing not only lowers operational costs but also enhances sustainability in large-scale data centers and edge devices.

Application Suitability and Use Cases

In-memory computing excels in real-time data processing applications such as financial trading, fraud detection, and personalized customer experiences where rapid analytics and low latency are critical. Traditional computing remains suitable for batch processing, large-scale data storage, and applications requiring extensive historical data analysis with lower time sensitivity. Enterprises choose in-memory solutions to support AI, IoT, and big data use cases demanding immediate insights, while traditional architectures support ERP, billing systems, and archival data management.

Implementation Challenges and Considerations

In-memory computing demands substantial investment in high-cost RAM and specialized hardware, creating significant scalability challenges compared to traditional computing's reliance on slower disk storage. Implementation requires careful data architecture redesign to optimize real-time analytics and minimize latency, contrasting with conventional batch processing workflows. Security measures must adapt to protect volatile memory environments, while legacy system integration often presents compatibility obstacles unique to in-memory solutions.

Security and Data Integrity in Both Paradigms

In-memory computing enhances security by minimizing data movement, reducing exposure to interception compared to traditional computing which relies heavily on disk-based storage vulnerable to breaches. Data integrity in in-memory systems benefits from real-time processing and continuous validation, while traditional computing often faces latency-induced risks impacting consistency during data transfers. Both paradigms require robust encryption and authentication protocols, but in-memory computing's volatile data environment demands advanced recovery mechanisms to prevent loss from power failures or system crashes.

Future Trends and Industry Adoption

In-memory computing leverages RAM to process data at unprecedented speeds, driving innovations in real-time analytics, AI, and IoT applications, contrasting with traditional computing's disk-based data management. Future trends emphasize hybrid architectures combining in-memory and persistent storage for scalability and cost-efficiency, attracting diverse industry sectors such as finance, healthcare, and telecommunications. Enterprise adoption demonstrates increasing investments in in-memory platforms like SAP HANA and Apache Ignite, showcasing transformational impacts on big data processing and decision-making agility.

Data locality

In-memory computing enhances data locality by storing and processing data directly in RAM, significantly reducing latency compared to traditional computing architectures that rely on slower, disk-based data access.

Memory bandwidth

In-memory computing maximizes memory bandwidth by processing data directly within RAM, significantly reducing latency and boosting throughput compared to traditional computing architectures that rely on slower data transfers between memory and CPU.

Latency reduction

In-memory computing reduces latency by processing data directly within RAM, enabling real-time analytics and faster response times compared to traditional computing that relies on slower disk-based storage access.

Processing-in-memory (PIM)

Processing-in-memory (PIM) enhances in-memory computing by integrating computation directly within memory chips, significantly reducing data transfer latency and increasing processing speed compared to traditional computing architectures reliant on separate CPU and memory units.

Von Neumann bottleneck

In-memory computing overcomes the Von Neumann bottleneck by processing data directly within memory, eliminating the latency and bandwidth limitations caused by constant data transfer between the CPU and memory in traditional computing architectures.

DRAM-based computation

DRAM-based in-memory computing significantly reduces data transfer latency and energy consumption compared to traditional computing architectures by processing data directly within memory arrays.

Cache hierarchy

In-memory computing significantly reduces latency and increases data processing speed by minimizing reliance on traditional cache hierarchies, unlike traditional computing which depends heavily on multi-level cache systems to bridge the gap between slow memory and fast CPU operations.

Near-data processing

Near-data processing in in-memory computing significantly reduces latency and increases data throughput by performing computations directly within or adjacent to memory, outperforming traditional computing architectures that separate processing units from storage.

Energy efficiency

In-memory computing significantly reduces energy consumption by minimizing data movement between memory and processors compared to traditional computing architectures.

Throughput scalability

In-memory computing achieves significantly higher throughput scalability than traditional computing by processing data directly in RAM, reducing latency and enabling faster real-time analytics at large scale.

In-memory computing vs Traditional computing Infographic

njnir.com

njnir.com