TCP Offload Engine (TOE) reduces CPU overhead by handling TCP processing directly on the network interface card, improving throughput and lowering latency. Kernel networking relies on the operating system for TCP/IP stack processing, which can increase CPU usage but offers greater flexibility and easier debugging. Selecting between TCP Offload and kernel networking depends on balancing performance needs against system complexity and application requirements.

Table of Comparison

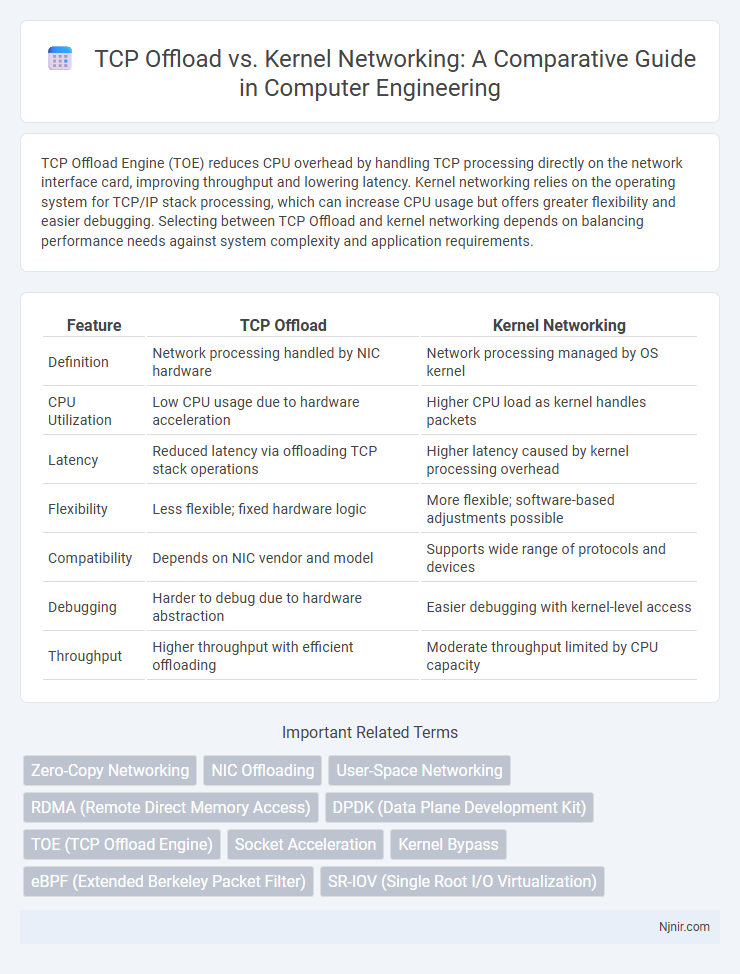

| Feature | TCP Offload | Kernel Networking |

|---|---|---|

| Definition | Network processing handled by NIC hardware | Network processing managed by OS kernel |

| CPU Utilization | Low CPU usage due to hardware acceleration | Higher CPU load as kernel handles packets |

| Latency | Reduced latency via offloading TCP stack operations | Higher latency caused by kernel processing overhead |

| Flexibility | Less flexible; fixed hardware logic | More flexible; software-based adjustments possible |

| Compatibility | Depends on NIC vendor and model | Supports wide range of protocols and devices |

| Debugging | Harder to debug due to hardware abstraction | Easier debugging with kernel-level access |

| Throughput | Higher throughput with efficient offloading | Moderate throughput limited by CPU capacity |

Introduction to TCP Offload and Kernel Networking

TCP Offload refers to the process where networking tasks typically handled by the CPU are transferred to specialized hardware, such as a Network Interface Card (NIC) with TCP Offload Engine (TOE) capabilities, reducing CPU load and improving throughput. Kernel Networking involves the operating system's kernel managing network protocols like TCP/IP directly, ensuring robust control and flexibility for processing network packets. Comparing both approaches highlights a trade-off between hardware acceleration efficiency with TCP Offload and the adaptability and extensive protocol support provided by Kernel Networking.

Architecture of TCP Offload Engines (TOE)

TCP Offload Engines (TOE) integrate dedicated hardware within network interface cards (NICs) to process TCP/IP stack operations, significantly reducing CPU load and improving network efficiency. These architectures implement protocol logic directly on the NIC's specialized processors, handling connection management, segmentation, and reassembly internally, which contrasts with kernel networking that relies on the host CPU for protocol processing. TOE design includes DMA engines and firmware that execute offload tasks, enabling faster data throughput and lower latency by minimizing kernel involvement in network packet processing.

Overview of Kernel-Based Networking Stack

The kernel-based networking stack in modern operating systems handles core TCP/IP processing tasks such as packet routing, error checking, and congestion control, leveraging in-kernel resources to optimize performance and system stability. This stack enables tight integration with system components, allowing efficient context switching and minimal latency during network operations. In contrast, TCP offload engines shift protocol processing from the CPU to specialized hardware but may introduce compatibility and management overhead compared to the kernel's centralized control and flexibility.

Performance Comparison: Throughput and Latency

TCP offload engines (TOE) enhance throughput by offloading TCP/IP stack processing from the CPU to specialized hardware, reducing CPU load and enabling higher packet processing rates compared to kernel networking. Kernel networking relies on general-purpose CPU processing, which introduces higher latency and lower throughput under heavy network traffic due to context switching and interrupt handling overhead. Benchmark studies show TCP offload achieving up to 30% throughput improvement and latency reductions of 20-40% in data center environments compared to traditional kernel-based network stacks.

CPU Utilization and Resource Efficiency

TCP Offload Engine (TOE) significantly reduces CPU utilization by handling TCP/IP processing directly on the network interface card, freeing the kernel from intensive protocol stack tasks. Kernel networking relies on the CPU to process all network protocol layers, leading to higher CPU load, especially under high traffic conditions. By offloading TCP processing, TOE enhances resource efficiency, enabling systems to allocate more CPU cycles to application workloads and improve overall network throughput.

Security Implications of TCP Offload

TCP Offload Engine (TOE) reduces CPU load by shifting TCP/IP processing to network hardware, improving throughput but introducing potential security risks. Offloading TCP processing to network interface cards can obscure packet inspection and complicate firewall rule enforcement, increasing vulnerability to malicious traffic undetected by host-based security tools. Kernel networking maintains full control over TCP stack processing, enabling comprehensive monitoring, logging, and security policy enforcement critical for detecting and mitigating network threats.

Scalability in High-Performance Environments

TCP Offload Engine (TOE) improves scalability in high-performance environments by offloading TCP/IP stack processing from the CPU to dedicated hardware, reducing CPU overhead and latency. Kernel networking relies on the host CPU for packet processing, which can become a bottleneck under heavy network loads, limiting scalability. TOE enables higher throughput and lower CPU utilization, making it suitable for data centers and cloud environments requiring efficient, scalable network performance.

Compatibility and Integration Challenges

TCP Offload Engine (TOE) enhances network performance by offloading TCP processing to dedicated hardware, yet it faces compatibility challenges with certain operating systems and legacy applications due to proprietary drivers and limited kernel support. Kernel networking, being integral to the OS, offers broader compatibility and seamless integration with existing networking stacks but may incur higher CPU utilization under heavy loads. Integrating TOE solutions requires careful alignment with the kernel's networking subsystems and thorough testing to avoid disruptions and ensure stable operation across diverse environments.

Use Cases and Industry Adoption Scenarios

TCP Offload Engine (TOE) accelerates network processing by shifting TCP/IP stack operations to specialized hardware, optimizing high-performance use cases such as data centers, cloud infrastructures, and financial services demanding low latency and high throughput. Kernel Networking relies on the OS CPU for TCP/IP processing, favored in general-purpose servers, legacy systems, and environments prioritizing flexibility and ease of software updates. Industry adoption leans towards TOE in scenarios requiring offloaded workloads for efficiency and reduced CPU overhead, while kernel-based networking remains prevalent for modularity and broader application compatibility.

Future Trends in Networking Acceleration

TCP Offload Engine (TOE) technology increasingly integrates with kernel networking stacks to enhance performance by reducing CPU load and latency. Emerging trends emphasize programmable hardware, such as SmartNICs and DPUs, enabling customizable offloading and advanced packet processing at line rate. Future networking acceleration will rely on these adaptive solutions to support demanding workloads like AI, edge computing, and 5G infrastructure, ensuring scalable and efficient data transfer.

Zero-Copy Networking

Zero-copy networking in TCP Offload Engine (TOE) significantly reduces CPU overhead by bypassing kernel networking stacks, enabling direct data transfer between application memory and network hardware.

NIC Offloading

NIC offloading enhances TCP performance by shifting packet processing tasks from the kernel to the network interface card, reducing CPU load and improving throughput.

User-Space Networking

User-space networking enhances performance by bypassing kernel networking stack overhead, enabling direct TCP offload processing and reducing latency for high-speed data transmission.

RDMA (Remote Direct Memory Access)

RDMA-enabled TCP offload engines significantly reduce CPU overhead and latency by bypassing kernel networking stacks, enabling direct memory access for high-throughput, low-latency data transfers.

DPDK (Data Plane Development Kit)

DPDK accelerates packet processing by bypassing kernel networking stack and TCP Offload Engine responsibilities, delivering high-performance, low-latency data plane operations.

TOE (TCP Offload Engine)

TCP Offload Engine (TOE) enhances network performance by transferring TCP/IP processing from the operating system kernel to dedicated hardware, reducing CPU utilization and improving throughput compared to traditional kernel networking.

Socket Acceleration

TCP Offload Engine (TOE) enhances socket acceleration by offloading TCP/IP processing from the kernel to specialized hardware, significantly reducing CPU load and improving network throughput.

Kernel Bypass

Kernel bypass techniques significantly enhance network performance by allowing applications to directly access hardware, reducing latency and CPU overhead compared to traditional TCP offload methods managed by the kernel.

eBPF (Extended Berkeley Packet Filter)

eBPF enhances kernel networking by enabling programmable, high-performance TCP offload capabilities that reduce CPU overhead and improve packet processing efficiency compared to traditional kernel-based TCP handling.

SR-IOV (Single Root I/O Virtualization)

SR-IOV enhances TCP Offload performance by enabling direct device assignment to virtual machines, bypassing kernel networking overhead and significantly reducing CPU utilization.

TCP Offload vs Kernel Networking Infographic

njnir.com

njnir.com