VLIW (Very Long Instruction Word) architecture relies on the compiler to schedule instructions statically, enabling multiple operations to execute in parallel within a single long instruction word. Superscalar processors dynamically issue multiple instructions per cycle using hardware-based scheduling and out-of-order execution, improving instruction-level parallelism adaptively. VLIW simplifies hardware complexity but demands sophisticated compiler design, while superscalar designs increase hardware complexity to achieve higher performance with flexible instruction dispatch.

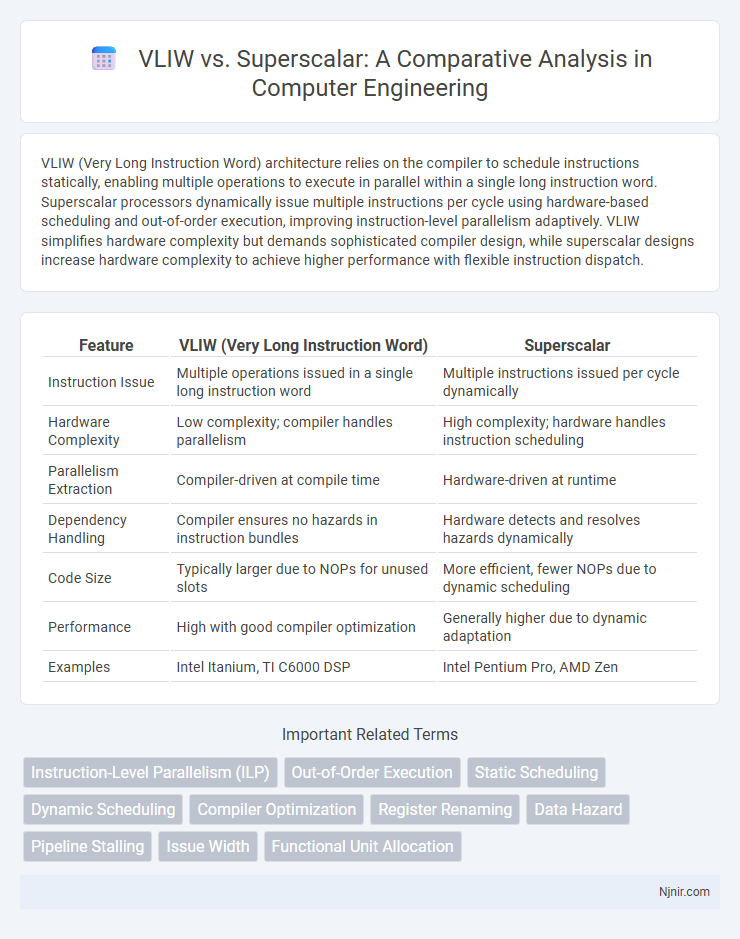

Table of Comparison

| Feature | VLIW (Very Long Instruction Word) | Superscalar |

|---|---|---|

| Instruction Issue | Multiple operations issued in a single long instruction word | Multiple instructions issued per cycle dynamically |

| Hardware Complexity | Low complexity; compiler handles parallelism | High complexity; hardware handles instruction scheduling |

| Parallelism Extraction | Compiler-driven at compile time | Hardware-driven at runtime |

| Dependency Handling | Compiler ensures no hazards in instruction bundles | Hardware detects and resolves hazards dynamically |

| Code Size | Typically larger due to NOPs for unused slots | More efficient, fewer NOPs due to dynamic scheduling |

| Performance | High with good compiler optimization | Generally higher due to dynamic adaptation |

| Examples | Intel Itanium, TI C6000 DSP | Intel Pentium Pro, AMD Zen |

Introduction to VLIW and Superscalar Architectures

VLIW (Very Long Instruction Word) architecture relies on the compiler to identify instruction-level parallelism, packing multiple operations into a single long instruction word executed simultaneously by multiple functional units. Superscalar architecture dynamically detects and issues multiple instructions per clock cycle using hardware-based scheduling and out-of-order execution to maximize parallelism. Both architectures aim to enhance CPU performance by exploiting instruction-level parallelism but differ fundamentally in complexity, with VLIW simplifying hardware design at the cost of compiler responsibility, while superscalar designs require sophisticated hardware logic for dynamic scheduling.

Core Concepts: VLIW vs Superscalar

VLIW (Very Long Instruction Word) architectures rely on the compiler to explicitly schedule multiple operations within a single long instruction word, enabling parallel execution without complex hardware-based dynamic scheduling. Superscalar processors dynamically issue multiple instructions per cycle using hardware mechanisms like out-of-order execution and register renaming to exploit instruction-level parallelism. The core distinction lies in VLIW's static scheduling by the compiler versus Superscalar's dynamic instruction dispatch and hazard resolution performed at runtime.

Instruction-Level Parallelism: Mechanisms and Techniques

VLIW (Very Long Instruction Word) architecture leverages static instruction-level parallelism by packing multiple operations into a single long instruction word, relying on the compiler to schedule parallel execution and eliminate hazards at compile time. In contrast, Superscalar processors exploit dynamic instruction-level parallelism by issuing multiple instructions per cycle through hardware-based scheduling, dependency checking, and out-of-order execution to optimize runtime throughput. The key distinction lies in VLIW's compiler-driven parallelism extraction versus Superscalar's hardware-driven dynamic scheduling, impacting design complexity and adaptability to varying instruction streams.

Compiler Role in VLIW vs Superscalar

In VLIW architectures, the compiler plays a crucial role by performing extensive static instruction scheduling, explicitly determining parallelism and packing multiple operations into a single long instruction word. Superscalar processors rely more on dynamic hardware mechanisms like instruction dispatch, out-of-order execution, and dependency checking to extract parallelism at runtime, reducing the compiler's burden. Consequently, VLIW compilers require sophisticated analysis and optimization to maximize instruction-level parallelism, whereas superscalar designs shift complexity towards hardware for dynamic instruction-level parallelism management.

Hardware Complexity and Scalability

VLIW (Very Long Instruction Word) architectures simplify hardware complexity by relying on the compiler to schedule instructions, which reduces the need for complex dynamic instruction scheduling and out-of-order execution hardware found in Superscalar designs. Superscalar processors require sophisticated hardware mechanisms such as multiple issue units, branch predictors, and register renaming to dynamically extract instruction-level parallelism, significantly increasing hardware complexity and power consumption. Scalability in VLIW improves with wider issue widths since complexity grows linearly with the number of operations per cycle, whereas Superscalar scalability is limited by the exponential increase in hardware complexity needed to maintain dynamic scheduling and hazard resolution.

Performance Analysis and Benchmarks

VLIW (Very Long Instruction Word) architectures achieve high instruction-level parallelism by relying on the compiler to schedule multiple operations in a single long instruction, resulting in predictable performance but limited dynamic optimization. Superscalar processors dynamically issue multiple instructions per cycle using hardware mechanisms like out-of-order execution and branch prediction, often yielding superior performance on diverse workloads. Benchmark results such as SPEC CPU and Dhrystone commonly show superscalar designs outperforming VLIW in real-world applications due to their adaptive execution capabilities and better handling of instruction dependencies.

Power Efficiency and Resource Utilization

VLIW architectures achieve power efficiency by simplifying hardware control logic through static instruction scheduling, minimizing dynamic power consumption but increasing code size. Superscalar processors improve resource utilization with dynamic instruction scheduling and out-of-order execution, allowing better parallelism but at the cost of higher power due to complex control hardware. Power efficiency in VLIW is favored in embedded systems, while superscalar designs excel in performance-critical applications with flexible resource management despite increased power usage.

Suitability for Different Workloads

VLIW architectures excel in predictable workloads with high instruction-level parallelism, such as multimedia and scientific computations, due to their static scheduling and simplified hardware. Superscalar processors are more versatile for dynamic and irregular workloads like general-purpose computing and gaming, leveraging dynamic scheduling and out-of-order execution to optimize performance. The choice depends on workload characteristics: VLIW favors deterministic code with compiler-optimized parallelism, while superscalar suits diverse and unpredictable instruction streams requiring runtime flexibility.

Industry Adoption and Real-World Examples

VLIW architectures, notably used in Intel's Itanium processors, offer simpler hardware by delegating instruction scheduling to compilers, but have seen limited industry adoption due to challenges in compiler design and less flexibility in dynamic execution. Superscalar processors, adopted widely by companies like AMD and Intel in mainstream CPUs such as the AMD Ryzen and Intel Core series, dynamically issue multiple instructions per cycle using complex hardware to optimize performance across diverse workloads. The dominance of superscalar designs in consumer and enterprise markets reflects their adaptability and robust support in software ecosystems, making them the prevailing choice for high-performance general-purpose computing.

Future Trends in Processor Design

Future trends in processor design emphasize enhanced parallelism with VLIW (Very Long Instruction Word) architectures leveraging static scheduling for simpler hardware and energy efficiency, while superscalar processors continue adopting dynamic scheduling for improved instruction-level parallelism and adaptability. Emerging hybrid models combining VLIW's predictable execution with superscalar's flexibility aim to optimize performance and power consumption. Advances in machine learning-driven compiler optimization and speculation techniques further shape the evolution of VLIW and superscalar designs, targeting next-generation high-performance and low-power computing environments.

Instruction-Level Parallelism (ILP)

VLIW architectures achieve higher Instruction-Level Parallelism by relying on the compiler to statically schedule multiple operations per instruction, whereas Superscalar processors dynamically extract ILP at runtime through hardware-based instruction dispatch and out-of-order execution.

Out-of-Order Execution

VLIW architecture relies on compiler-scheduled instruction-level parallelism without hardware out-of-order execution, while superscalar processors dynamically execute instructions out-of-order using complex hardware mechanisms to optimize performance.

Static Scheduling

VLIW architectures use static scheduling by the compiler to explicitly define instruction-level parallelism, whereas superscalar processors rely on dynamic scheduling hardware to detect and execute instructions in parallel at runtime.

Dynamic Scheduling

Dynamic scheduling in superscalar architectures enables out-of-order instruction execution for improved performance, whereas VLIW relies on static scheduling determined at compile-time, limiting runtime flexibility.

Compiler Optimization

VLIW architectures rely heavily on advanced compiler optimization to statically schedule parallel instruction execution, whereas superscalar processors depend on dynamic hardware-level scheduling to optimize instruction-level parallelism.

Register Renaming

VLIW architectures rely on the compiler for instruction scheduling and do not typically implement hardware register renaming, while superscalar processors use dynamic hardware register renaming to eliminate false data dependencies and improve parallel instruction execution.

Data Hazard

VLIW architectures mitigate data hazards through static scheduling by the compiler, whereas Superscalar processors handle data hazards dynamically using hardware-based techniques like scoreboarding and register renaming.

Pipeline Stalling

VLIW architectures reduce pipeline stalling by relying on compiler-scheduled parallelism to minimize hazards, while superscalar processors dynamically schedule instructions but face increased pipeline stalls due to runtime dependency and resource conflicts.

Issue Width

VLIW architecture issues multiple fixed-width instructions per cycle maximizing parallelism, while superscalar dynamically issues variable-width multiple instructions per cycle based on runtime hardware availability.

Functional Unit Allocation

VLIW architectures allocate multiple functional units statically at compile-time for parallel instruction execution, whereas superscalar processors dynamically schedule and allocate functional units at runtime to maximize instruction-level parallelism.

VLIW vs Superscalar Infographic

njnir.com

njnir.com