Parallelism involves executing multiple processes simultaneously to speed up computation, often leveraging multi-core processors or distributed systems. Concurrency, on the other hand, refers to managing multiple tasks in overlapping time periods, improving resource utilization and responsiveness without necessarily running tasks simultaneously. Understanding the distinction between parallelism and concurrency is essential for designing efficient algorithms and optimizing system performance in computer engineering.

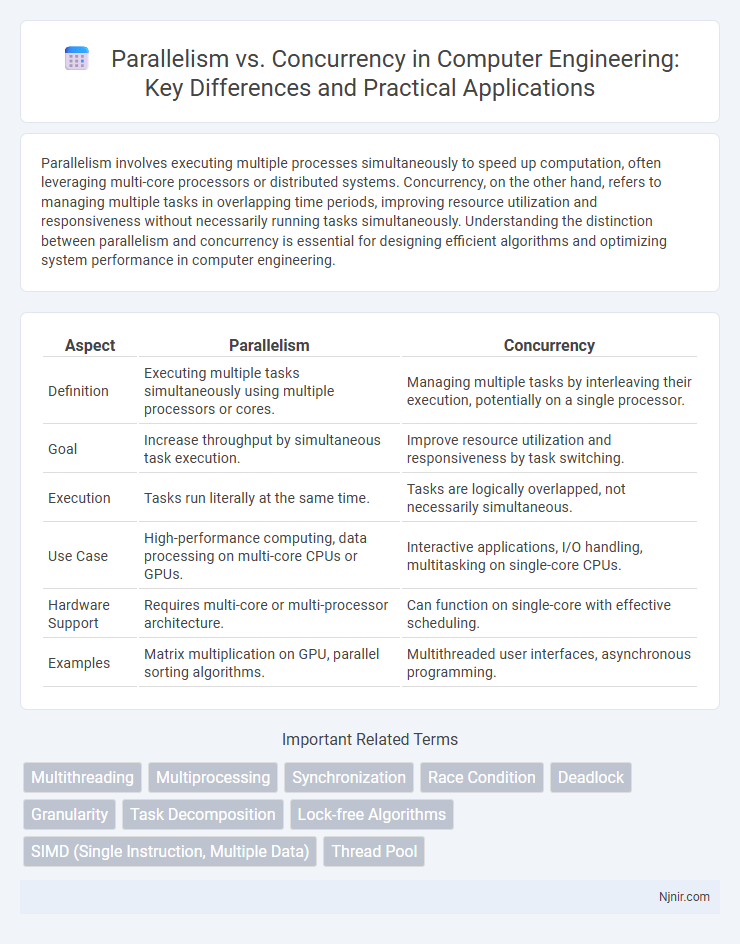

Table of Comparison

| Aspect | Parallelism | Concurrency |

|---|---|---|

| Definition | Executing multiple tasks simultaneously using multiple processors or cores. | Managing multiple tasks by interleaving their execution, potentially on a single processor. |

| Goal | Increase throughput by simultaneous task execution. | Improve resource utilization and responsiveness by task switching. |

| Execution | Tasks run literally at the same time. | Tasks are logically overlapped, not necessarily simultaneous. |

| Use Case | High-performance computing, data processing on multi-core CPUs or GPUs. | Interactive applications, I/O handling, multitasking on single-core CPUs. |

| Hardware Support | Requires multi-core or multi-processor architecture. | Can function on single-core with effective scheduling. |

| Examples | Matrix multiplication on GPU, parallel sorting algorithms. | Multithreaded user interfaces, asynchronous programming. |

Introduction to Parallelism and Concurrency

Parallelism involves executing multiple tasks simultaneously by utilizing multiple processors or cores to improve computational speed, while concurrency refers to managing multiple tasks by interleaving their execution, enabling efficient resource use without necessarily running tasks at the same time. Parallelism is a subset of concurrency focused on performance enhancement through simultaneous execution, whereas concurrency emphasizes task scheduling and coordination to handle multiple activities effectively. Understanding the distinction facilitates designing systems that optimize processing power and responsiveness in various computing environments.

Defining Parallelism in Computer Engineering

Parallelism in computer engineering refers to the simultaneous execution of multiple computations or processes to increase computational speed and efficiency. It involves dividing tasks into smaller sub-tasks that can be processed concurrently on multiple processors or cores within a single system, leveraging hardware capabilities like multi-core CPUs and GPU architectures. This technique contrasts with concurrency, which focuses on managing multiple tasks in overlapping time periods rather than strictly simultaneously.

Understanding Concurrency in Computing Systems

Concurrency in computing systems refers to the execution of multiple tasks or processes overlapping in time, allowing efficient resource utilization and improved system responsiveness. It involves managing shared resources, synchronization, and communication between processes to prevent conflicts and ensure correct program behavior. Concurrency enables better handling of I/O-bound and high-latency operations, enhancing overall system throughput and scalability.

Key Differences Between Parallelism and Concurrency

Parallelism involves executing multiple tasks simultaneously by utilizing multiple processors or cores, whereas concurrency refers to managing multiple tasks by interleaving execution within a single processor, improving system responsiveness. Parallelism requires hardware support for true simultaneous execution, while concurrency relies on scheduling and task management to handle multiple operations. Performance gains in parallelism arise from dividing workloads, whereas concurrency enhances efficiency through time-slicing and resource sharing.

Advantages of Parallelism in Processing

Parallelism enhances processing speed by dividing tasks into smaller sub-tasks that run simultaneously across multiple processors or cores, significantly reducing execution time. It improves resource utilization by efficiently distributing workloads, leading to better system throughput and performance in complex computations or data-intensive applications. Parallelism also boosts scalability by allowing systems to handle increasing workloads by simply adding more processing units without compromising performance.

Benefits of Concurrency for System Design

Concurrency enhances system design by enabling multiple tasks to make progress independently, improving resource utilization and responsiveness. It allows for better handling of I/O-bound operations by overlapping waiting times with productive computation. This approach reduces latency and increases throughput, making systems more efficient and scalable under varying workloads.

Challenges in Implementing Parallelism and Concurrency

Challenges in implementing parallelism and concurrency include managing data synchronization and avoiding race conditions, which can lead to inconsistent program states. Ensuring efficient task scheduling and load balancing is critical to maximize CPU utilization while minimizing overhead caused by context switching. Debugging and testing concurrent programs remain complex due to non-deterministic behavior and potential deadlocks.

Real-World Applications: Parallelism vs. Concurrency

Parallelism enables simultaneous execution of multiple tasks, significantly improving performance in data-intensive applications such as scientific simulations, image processing, and real-time analytics. Concurrency enhances system responsiveness by managing multiple tasks at overlapping time intervals, crucial for web servers, interactive applications, and database management systems. High-performance computing relies on parallelism to accelerate complex computations, while operating systems use concurrency to efficiently handle multiple processes and user inputs.

Hardware and Software Support for Parallel and Concurrent Systems

Hardware support for parallel systems includes multicore processors and SIMD (Single Instruction, Multiple Data) architectures that enable simultaneous execution of multiple tasks, while concurrency relies on hardware features like interrupts and multithreading to manage overlapping task execution. Software support for parallelism involves parallel programming models such as OpenMP and CUDA that facilitate task decomposition and synchronization, whereas concurrency is typically managed through threading libraries and event-driven frameworks that handle task scheduling and coordination. Effective design of parallel and concurrent systems requires integrating hardware capabilities with software constructs to optimize resource utilization and minimize latency.

Choosing the Right Approach: Factors and Best Practices

Choosing between parallelism and concurrency depends on the problem's nature and hardware capabilities; parallelism suits CPU-bound tasks requiring simultaneous execution, while concurrency excels in managing multiple tasks by interleaving processes for responsiveness. Factors such as task dependency, resource availability, and latency tolerance guide the decision-making process. Best practices include analyzing workload characteristics, leveraging appropriate programming models like multithreading for concurrency, and parallel libraries like OpenMP or CUDA for parallelism to optimize performance effectively.

Multithreading

Multithreading enhances concurrency by enabling multiple threads to execute overlapping tasks within a single program, while parallelism involves simultaneously running multiple threads on multiple processors to improve performance.

Multiprocessing

Multiprocessing enhances parallelism by utilizing multiple CPU cores to execute processes simultaneously, improving performance compared to concurrency, which manages multiple tasks by interleaving execution on a single core.

Synchronization

Synchronization ensures coordinated access to shared resources, preventing race conditions in both parallelism and concurrency environments.

Race Condition

Race conditions occur in concurrent systems when multiple processes access shared resources simultaneously, whereas parallelism involves executing tasks simultaneously without necessarily causing race conditions.

Deadlock

Deadlock occurs in both parallelism and concurrency when processes or threads wait indefinitely for resources, preventing system progress and requiring careful management of resource allocation and synchronization to resolve.

Granularity

Parallelism enhances performance by executing fine-grained tasks simultaneously, while concurrency manages coarse-grained tasks through efficient task scheduling and resource sharing.

Task Decomposition

Task decomposition in parallelism splits a problem into independent subtasks executed simultaneously, while in concurrency, it breaks tasks into interleaved units managed to optimize resource sharing and responsiveness.

Lock-free Algorithms

Lock-free algorithms enhance concurrency by enabling multiple threads to operate on shared data without blocking, whereas parallelism focuses on executing multiple tasks simultaneously, often relying on synchronization mechanisms that can introduce delays.

SIMD (Single Instruction, Multiple Data)

SIMD (Single Instruction, Multiple Data) enhances parallelism by executing the same instruction simultaneously across multiple data points, optimizing performance in data-parallel tasks compared to general concurrency models.

Thread Pool

Thread pools improve concurrency by managing multiple threads efficiently but do not guarantee parallelism, which requires multiple processors executing threads simultaneously.

parallelism vs concurrency Infographic

njnir.com

njnir.com