Qubits leverage quantum superposition and entanglement, enabling simultaneous representation of both 0 and 1 states, which exponentially increases computational power compared to classical bits. Unlike bits that operate in binary states of either 0 or 1, qubits perform complex calculations faster by processing multiple possibilities concurrently. This fundamental difference allows quantum computers to solve certain problems much more efficiently than traditional computers.

Table of Comparison

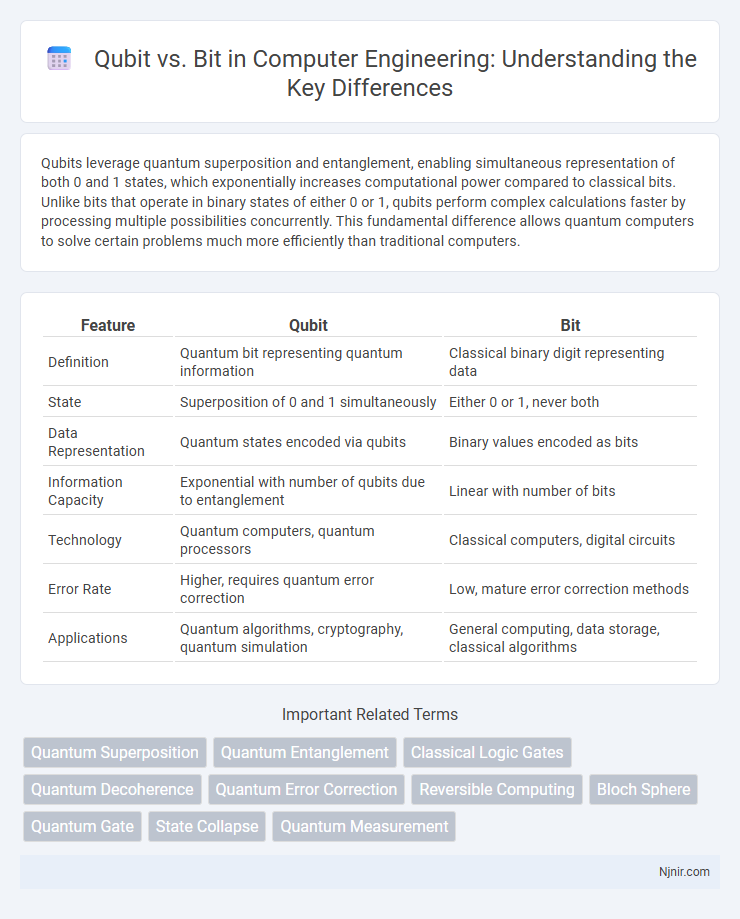

| Feature | Qubit | Bit |

|---|---|---|

| Definition | Quantum bit representing quantum information | Classical binary digit representing data |

| State | Superposition of 0 and 1 simultaneously | Either 0 or 1, never both |

| Data Representation | Quantum states encoded via qubits | Binary values encoded as bits |

| Information Capacity | Exponential with number of qubits due to entanglement | Linear with number of bits |

| Technology | Quantum computers, quantum processors | Classical computers, digital circuits |

| Error Rate | Higher, requires quantum error correction | Low, mature error correction methods |

| Applications | Quantum algorithms, cryptography, quantum simulation | General computing, data storage, classical algorithms |

Introduction to Qubits and Classical Bits

Classical bits represent information as either 0 or 1, forming the foundation of traditional computing systems, while qubits exploit quantum superposition to exist simultaneously as 0 and 1, enabling exponentially greater computational power. Unlike bits, qubits use quantum phenomena such as entanglement and interference to process complex data sets more efficiently. This fundamental difference allows quantum computers to tackle problems in cryptography, optimization, and simulation that are infeasible for classical computers.

Fundamental Differences: Qubit vs Bit

A qubit, or quantum bit, differs fundamentally from a classical bit by existing in superposition, allowing it to represent both 0 and 1 simultaneously, unlike a bit which holds a single binary value of either 0 or 1. Qubits leverage quantum phenomena such as entanglement and coherence, enabling exponentially greater computational power for certain algorithms compared to the deterministic operations of classical bits. The measurement of a qubit collapses its state to a definite 0 or 1, while classical bits maintain a stable and definite state throughout computation.

Representation and Storage of Information

Qubits represent information using quantum states such as superposition and entanglement, enabling the storage of multiple values simultaneously, unlike classical bits that store a single binary value of 0 or 1. This quantum property allows qubits to perform complex computations more efficiently by encoding exponentially more information compared to bits. Storage of qubits relies on fragile quantum systems like ion traps or superconducting circuits, requiring specialized environments to maintain coherence and prevent information loss.

Quantum Superposition vs Binary States

Quantum bits, or qubits, leverage quantum superposition, enabling them to represent both 0 and 1 simultaneously, unlike classical bits restricted to binary states of either 0 or 1. This property allows qubits to perform complex computations exponentially faster by processing multiple possibilities at once. Superposition forms the foundation of quantum parallelism, crucial for advancing quantum computing beyond classical limitations.

Quantum Entanglement and Its Implications

Quantum entanglement significantly differentiates qubits from classical bits by enabling instantaneous correlations between entangled particles regardless of distance, thereby enhancing quantum computing power beyond classical limitations. This phenomenon allows qubits to exist in superposition states and perform complex calculations simultaneously, which classical bits, restricted to binary states of 0 or 1, cannot achieve. Entanglement's implications extend to secure quantum communication protocols and potentially revolutionary advances in cryptography and information processing.

Processing Power: Quantum vs Classical Computation

Quantum computation exploits qubits, which leverage superposition and entanglement to process multiple states simultaneously, vastly outperforming classical bits that represent binary states (0 or 1) in traditional computing. This quantum parallelism enables exponential speedups in solving complex problems like factoring large numbers and simulating molecular interactions, tasks that classical bits struggle with due to sequential processing limitations. Classical computation, constrained by bits' binary nature, relies on linear processing speeds and cannot match the processing power achieved through the probabilistic and multi-state capabilities of qubits.

Error Rates and Stability

Qubits demonstrate significantly higher error rates compared to classical bits due to their susceptibility to decoherence and quantum noise, impacting quantum computation reliability. Techniques such as quantum error correction and fault-tolerant algorithms are critical in mitigating these instabilities. Stability in qubits remains a major challenge, with coherence times typically measured in microseconds, whereas classical bits maintain near-perfect stability over extended periods.

Applications in Modern Computing

Qubits enable quantum computers to perform complex simulations and cryptographic algorithms beyond classical bit capabilities, revolutionizing fields like drug discovery, financial modeling, and optimization problems. Classical bits remain fundamental for everyday computing tasks, data storage, and conventional software applications due to their stability and widespread infrastructure. Quantum computing's unique superposition and entanglement properties allow qubits to solve specific problems exponentially faster than classical bits in targeted applications.

Future of Quantum and Classical Information Units

Quantum bits (qubits) leverage superposition and entanglement to process complex computations exponentially faster than classical bits, which represent data as binary 0s or 1s. The future of computing lies in hybrid systems integrating quantum and classical information units, enabling breakthroughs in cryptography, optimization, and machine learning. As qubit coherence times improve and error correction advances, quantum units will increasingly complement traditional bits to solve problems beyond classical capabilities.

Qubit vs Bit: Challenges and Opportunities

Qubits, unlike classical bits, leverage quantum superposition and entanglement to perform complex computations that classical bits cannot, presenting opportunities for breakthroughs in cryptography and optimization problems. However, qubits face significant challenges such as decoherence, error rates, and the need for extremely low temperatures to maintain quantum states, limiting scalability and practical implementation. Advancements in quantum error correction and hardware development are critical to overcoming these obstacles and unlocking the full potential of quantum computing compared to traditional binary systems.

Quantum Superposition

Qubits leverage quantum superposition to represent both 0 and 1 simultaneously, enabling exponentially faster computation compared to classical bits that exist only as 0 or 1.

Quantum Entanglement

Quantum entanglement allows qubits to represent and process exponentially more information than classical bits by enabling correlated states that cannot be described independently.

Classical Logic Gates

Classical logic gates process information using bits represented by binary states 0 and 1, while qubits in quantum computing enable superposition and entanglement, vastly expanding computational possibilities beyond classical binary logic.

Quantum Decoherence

Quantum decoherence limits qubit stability by causing loss of quantum information, whereas classical bits remain stable without such interference.

Quantum Error Correction

Quantum error correction protocols enable qubits to maintain coherence and reduce error rates far beyond classical bit error correction capabilities, making qubits essential for reliable quantum computing.

Reversible Computing

Qubits enable reversible computing by maintaining quantum coherence and superposition, drastically reducing energy loss compared to classical bits in irreversible logic operations.

Bloch Sphere

The Bloch Sphere visually represents a qubit's quantum state, enabling the superposition of 0 and 1 unlike a classical bit which only exists in one binary state.

Quantum Gate

Quantum gates manipulate qubits by leveraging superposition and entanglement, enabling exponentially more complex computations compared to classical bits processed through conventional logic gates.

State Collapse

Qubits leverage superposition allowing multiple states simultaneously, but measurement causes instantaneous state collapse to a definite bit value, contrasting classical bits which inherently exist in a single state.

Quantum Measurement

Quantum measurement collapses a qubit's superposition state into a definite bit value, distinguishing qubits from classical bits that inherently hold a fixed state.

Qubit vs Bit Infographic

njnir.com

njnir.com