AI accelerators are specialized hardware designed to optimize machine learning workloads by providing higher efficiency and lower power consumption compared to general-purpose GPUs. While GPUs offer flexibility and strong performance for a wide range of parallel processing tasks, AI accelerators deliver tailored architectures that accelerate specific neural network operations, reducing latency and increasing throughput. This specialization makes AI accelerators ideal for embedded systems and edge devices where energy efficiency and real-time inference are critical.

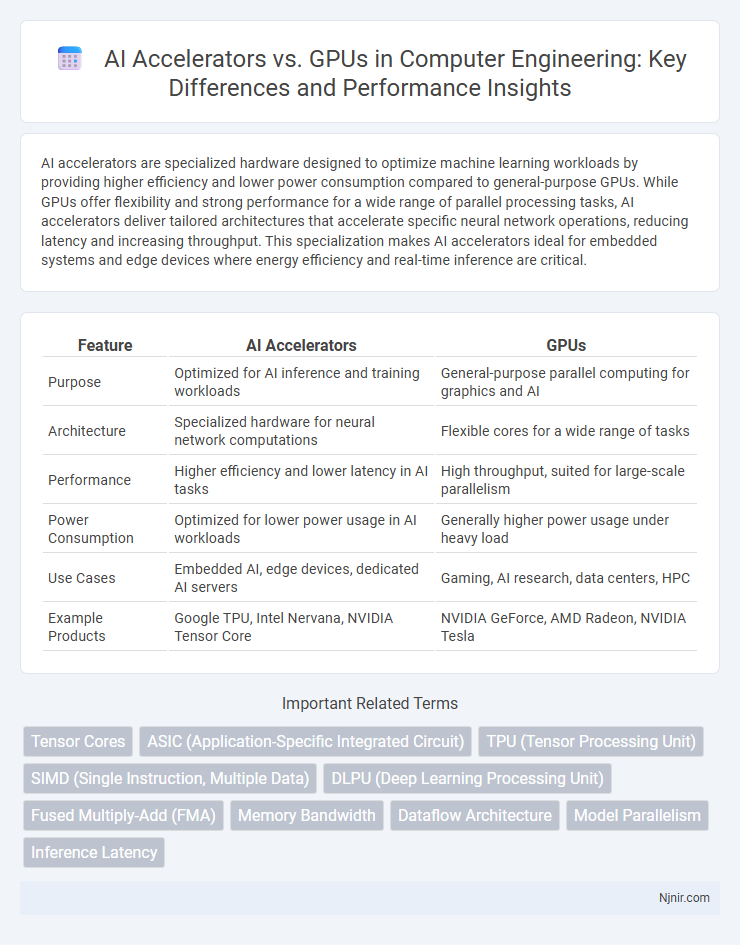

Table of Comparison

| Feature | AI Accelerators | GPUs |

|---|---|---|

| Purpose | Optimized for AI inference and training workloads | General-purpose parallel computing for graphics and AI |

| Architecture | Specialized hardware for neural network computations | Flexible cores for a wide range of tasks |

| Performance | Higher efficiency and lower latency in AI tasks | High throughput, suited for large-scale parallelism |

| Power Consumption | Optimized for lower power usage in AI workloads | Generally higher power usage under heavy load |

| Use Cases | Embedded AI, edge devices, dedicated AI servers | Gaming, AI research, data centers, HPC |

| Example Products | Google TPU, Intel Nervana, NVIDIA Tensor Core | NVIDIA GeForce, AMD Radeon, NVIDIA Tesla |

Introduction to AI Accelerators and GPUs

AI accelerators are specialized hardware designed to optimize machine learning tasks by enhancing parallel processing and reducing latency, often outperforming general-purpose GPUs in efficiency and speed for specific AI workloads. GPUs, originally developed for rendering graphics, have become versatile processors widely adopted for AI computations due to their high throughput in matrix operations essential for neural networks. The distinct architectural designs of AI accelerators and GPUs target improved performance in deep learning applications, with accelerators emphasizing energy efficiency and low-level optimization tailored for AI models.

Architectural Differences: AI Accelerators vs GPUs

AI accelerators are specialized hardware designed for efficient matrix multiplication and deep learning operations, leveraging custom architectures like Tensor Cores and systolic arrays, whereas GPUs utilize a versatile parallel processing architecture optimized for graphics rendering and general-purpose computation. AI accelerators employ dataflow architectures that minimize memory movement and maximize throughput for neural network workloads, contrasting with the SIMD (Single Instruction, Multiple Data) design of GPUs that handle diverse computational tasks. Memory hierarchy in AI accelerators is tightly integrated to reduce latency and power consumption, while GPUs rely on more flexible but less specialized memory systems supporting a broad range of applications.

Performance Comparison in AI Workloads

AI accelerators, such as TPUs and dedicated neural processing units, often outperform GPUs in AI workloads due to their specialized architecture designed for matrix multiplication and tensor operations. GPUs provide versatility and strong parallel processing capabilities, excelling in tasks requiring high computational throughput and flexibility across various AI models. Performance benchmarks reveal AI accelerators deliver lower latency and higher energy efficiency for large-scale deep learning training and inference compared to general-purpose GPUs.

Power Efficiency and Thermal Considerations

AI accelerators outperform GPUs in power efficiency by delivering higher computational throughput per watt, enabling prolonged operation under constrained energy budgets typical in edge and mobile AI applications. These specialized chips incorporate optimized architectures and low-precision arithmetic tailored for AI workloads, reducing heat generation compared to GPUs, which are traditionally designed for diverse, high-power computing tasks. Thermal management in AI accelerators is simplified due to lower power density, allowing for more compact cooling solutions and quieter system designs, whereas GPUs often require robust cooling mechanisms to handle higher thermal output.

Programming Models and Software Ecosystems

AI accelerators often utilize specialized programming models such as TensorFlow Lite, TVM, or custom APIs tailored for specific hardware, enabling highly optimized neural network inferencing with low latency and power consumption. GPUs rely on mature, versatile ecosystems like CUDA and ROCm that support a wide range of AI frameworks including TensorFlow, PyTorch, and MXNet, offering extensive developer tools and libraries for deep learning training and inference. The software ecosystems for AI accelerators tend to be more specialized and less flexible but deliver superior performance for targeted AI workloads, while GPUs provide broader programming model support and ecosystem maturity for diverse AI applications.

Scalability and Integration in Data Centers

AI accelerators offer superior scalability in data centers due to their specialized architecture designed specifically for machine learning workloads, enabling efficient parallel processing and lower power consumption compared to general-purpose GPUs. Integration of AI accelerators into existing data center infrastructure often requires tailored software frameworks and hardware compatibility considerations, whereas GPUs benefit from broader ecosystem support and established integration tools. Scalability in AI accelerators allows data centers to deploy large-scale AI models with optimized performance per watt, making them ideal for expanding AI workloads without drastically increasing operational costs.

Cost Analysis: Total Cost of Ownership

AI accelerators often offer a lower total cost of ownership (TCO) than GPUs due to their specialized architecture tailored for machine learning workloads, which reduces energy consumption and improves efficiency. While GPUs provide versatility across various applications, their higher power usage and cooling requirements increase operational expenses over time. Factoring in hardware acquisition, maintenance, and power costs, AI accelerators can deliver more cost-effective performance for large-scale, intensive AI deployments.

Application Suitability: Edge vs Cloud Deployments

AI accelerators are specifically designed for edge deployments, offering efficient, low-power inference capabilities ideal for real-time applications in smartphones, IoT devices, and autonomous systems. GPUs excel in cloud environments where high-throughput training and large-scale AI model processing require extensive parallelism and memory bandwidth. The choice between AI accelerators and GPUs depends heavily on the deployment context, with accelerators optimized for edge inference and GPUs preferred for cloud-based AI training and large-scale inference tasks.

Future Trends in AI Hardware Development

AI accelerators are expected to surpass traditional GPUs in efficiency and specialization by leveraging custom architectures tailored for machine learning workloads. Future trends indicate a rise in domain-specific accelerators optimizing performance for neural networks, such as tensor processing units (TPUs) and neuromorphic chips. Advances in semiconductor technology, including 3nm process nodes, will drive enhanced power efficiency and computational density, shaping the next generation of AI hardware.

Choosing the Right Hardware for AI Applications

AI accelerators optimize machine learning tasks by leveraging specialized architectures like tensor cores and systolic arrays, delivering superior efficiency and lower latency compared to general-purpose GPUs. GPUs excel in parallel processing and versatility, supporting a wide range of AI workloads from training large models to inference, but often consume more power and generate higher heat. Selecting the right hardware depends on specific AI application requirements such as model complexity, latency constraints, power budget, and cost-effectiveness, with AI accelerators ideal for inference-focused tasks and GPUs preferred for extensive training phases.

Tensor Cores

Tensor Cores in AI accelerators provide superior matrix multiplication performance and efficiency compared to traditional GPUs, significantly accelerating deep learning training and inference tasks.

ASIC (Application-Specific Integrated Circuit)

ASIC-based AI accelerators deliver higher efficiency and performance than GPUs by tailoring hardware specifically for machine learning workloads, significantly reducing power consumption and latency.

TPU (Tensor Processing Unit)

TPUs (Tensor Processing Units) are specialized AI accelerators designed by Google to optimize deep learning workloads by delivering higher performance and energy efficiency compared to general-purpose GPUs.

SIMD (Single Instruction, Multiple Data)

AI accelerators outperform GPUs in SIMD efficiency by using specialized architectures optimized for parallel processing of AI workloads.

DLPU (Deep Learning Processing Unit)

DLPU (Deep Learning Processing Unit) offers specialized architecture tailored for neural network computations, delivering higher efficiency and lower latency in AI accelerator tasks compared to general-purpose GPUs.

Fused Multiply-Add (FMA)

AI accelerators outperform GPUs in Fused Multiply-Add (FMA) operations by optimizing parallel processing and reducing latency for deep learning workloads.

Memory Bandwidth

AI accelerators often feature specialized high-bandwidth memory architectures, delivering significantly greater memory bandwidth than traditional GPUs to optimize deep learning model training and inference performance.

Dataflow Architecture

Dataflow architecture in AI accelerators optimizes parallel processing and reduces data movement, outperforming traditional GPUs in energy efficiency and latency for deep learning workloads.

Model Parallelism

AI accelerators optimize model parallelism by offering specialized hardware tailored for efficient distribution and execution of large neural network layers, surpassing general-purpose GPUs in scalability and energy efficiency.

Inference Latency

AI accelerators reduce inference latency by providing specialized hardware optimized for neural network computations, delivering faster and more efficient performance compared to general-purpose GPUs.

AI accelerators vs GPUs Infographic

njnir.com

njnir.com