DPUs (Data Processing Units) specialize in managing data-centric tasks such as networking, storage, and security, offloading these workloads from the CPU and improving system efficiency. GPUs (Graphics Processing Units) excel at parallel processing and are optimized for rendering graphics and accelerating compute-intensive applications like AI and machine learning. While GPUs focus on computation-heavy tasks, DPUs enhance data movement and infrastructure management, making them complementary components in modern data centers.

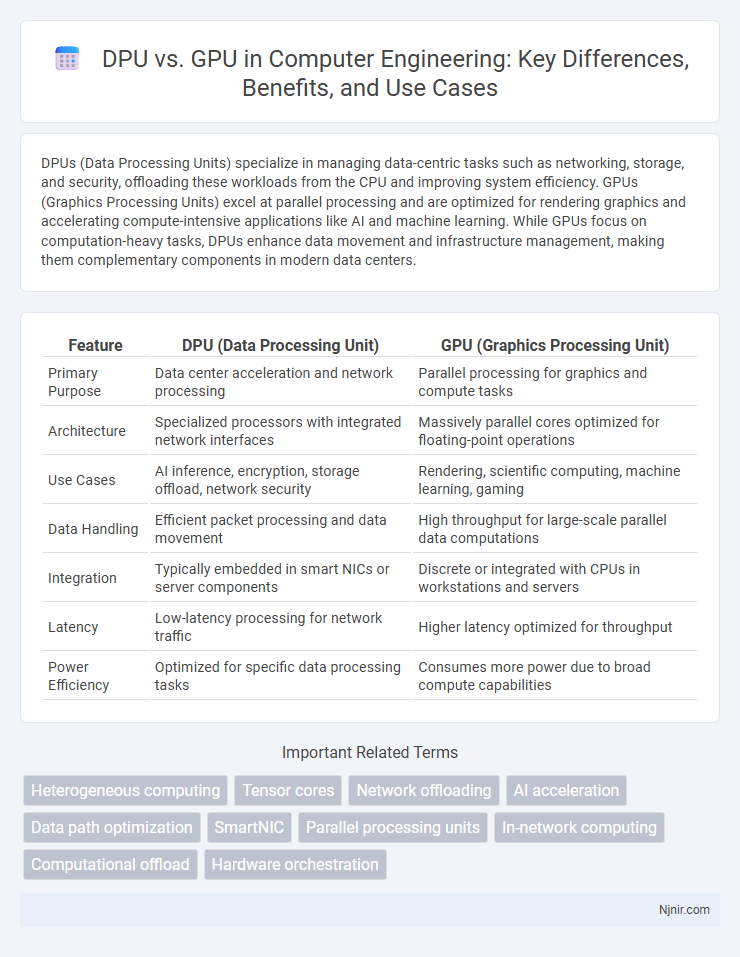

Table of Comparison

| Feature | DPU (Data Processing Unit) | GPU (Graphics Processing Unit) |

|---|---|---|

| Primary Purpose | Data center acceleration and network processing | Parallel processing for graphics and compute tasks |

| Architecture | Specialized processors with integrated network interfaces | Massively parallel cores optimized for floating-point operations |

| Use Cases | AI inference, encryption, storage offload, network security | Rendering, scientific computing, machine learning, gaming |

| Data Handling | Efficient packet processing and data movement | High throughput for large-scale parallel data computations |

| Integration | Typically embedded in smart NICs or server components | Discrete or integrated with CPUs in workstations and servers |

| Latency | Low-latency processing for network traffic | Higher latency optimized for throughput |

| Power Efficiency | Optimized for specific data processing tasks | Consumes more power due to broad compute capabilities |

Introduction to DPU and GPU

Data Processing Units (DPUs) are specialized processors designed to manage data-centric tasks such as networking, storage, and security offloading, optimizing system efficiency by handling data movement and processing independently of the CPU. Graphics Processing Units (GPUs) are parallel processors originally developed for rendering images and videos, now widely used for accelerating compute-intensive workloads in artificial intelligence, machine learning, and scientific simulations. While GPUs focus on parallel computation for large-scale data processing, DPUs enhance infrastructure performance through intelligent data handling and workload offloading.

Core Architectural Differences

DPU (Data Processing Unit) is designed with specialized cores optimized for data-centric tasks such as networking, storage, and security acceleration, whereas GPU (Graphics Processing Unit) consists of massively parallel cores tailored for high-throughput graphical and compute workloads. The architectural focus of DPUs emphasizes programmable hardware accelerators and offloading capabilities to enhance data plane efficiency, while GPUs rely on SIMD (Single Instruction, Multiple Data) architecture for parallel processing of vectorized operations. Core design in DPUs integrates elements like network interface controllers and programmable logic, contrasting with GPUs' emphasis on floating-point performance and tensor cores for machine learning workloads.

Key Functions and Workloads

DPUs (Data Processing Units) specialize in offloading and accelerating data center networking, storage, and security tasks, enabling efficient data movement and infrastructure management. GPUs (Graphics Processing Units) excel in parallel processing workloads, such as AI model training, scientific simulations, and high-performance computing, by leveraging massive cores for intensive computation. While DPUs handle data-centric workloads like packet processing and encryption, GPUs focus on compute-heavy tasks, making each essential for specific aspects of modern cloud and enterprise environments.

Performance Comparison

DPUs (Data Processing Units) excel in offloading and accelerating data-centric tasks such as network processing, security, and storage management, resulting in reduced CPU load and enhanced overall system efficiency. GPUs (Graphics Processing Units) outperform DPUs in parallel processing of complex computations and graphics rendering, particularly in AI training, scientific simulations, and high-performance computing workloads. Performance comparison shows GPUs delivering superior floating-point computation throughput, while DPUs optimize data path performance and latency-sensitive networking tasks, making each ideal for specialized roles in modern data centers.

Energy Efficiency and Power Consumption

DPUs (Data Processing Units) offer superior energy efficiency compared to GPUs (Graphics Processing Units) by offloading specialized networking and storage tasks from the CPU, reducing overall system power consumption. GPUs consume significantly more power due to their parallel processing architecture designed for high-performance computing and graphics rendering, leading to higher thermal output. In data center environments, DPUs enable optimized power usage through hardware acceleration for data movement, lowering energy costs and enhancing sustainability.

Integration in Modern Data Centers

DPUs (Data Processing Units) offer specialized hardware acceleration for data-centric tasks, enabling efficient offloading of networking, storage, and security functions from CPUs and GPUs. Unlike GPUs primarily designed for parallel compute workloads like AI and graphics rendering, DPUs integrate with modern data centers to enhance overall system performance by managing data movement and infrastructure tasks. This integration results in optimized resource utilization, reduced latency, and improved scalability in cloud and enterprise environments.

Programming Models and Ecosystems

DPUs (Data Processing Units) utilize specialized programming models such as P4, DPDK, and custom SDKs designed for network and data-centric tasks, whereas GPUs rely on CUDA, OpenCL, and ROCm ecosystems optimized for parallel computing and graphics workloads. The DPU ecosystem emphasizes offloading networking, storage, and security functions to dedicated hardware accelerators, enabling programmable data plane operations, while GPU ecosystems support extensive machine learning frameworks, scientific computing libraries, and graphics APIs. Developers targeting DPUs must adapt to domain-specific languages and real-time packet processing paradigms, contrasting with GPU programming models that focus on massive parallelism and compute-intensive algorithms.

Use Cases: When to Choose DPU or GPU

DPUs excel in data center environments requiring optimized networking, security, and storage offloading, making them ideal for cloud infrastructure and AI inference workloads with high data throughput. GPUs are preferred for parallel computing tasks demanding massive floating-point arithmetic, such as deep learning training, scientific simulations, and high-performance graphics rendering. Selecting between DPU and GPU depends on whether the use case prioritizes data-centric acceleration (DPU) or compute-intensive parallel processing (GPU).

Market Trends and Industry Adoption

The growing demand for data-intensive workloads accelerates the adoption of Data Processing Units (DPUs) in cloud data centers and enterprise networks, as they offload networking, storage, and security tasks from CPUs. GPUs maintain dominance in AI training and high-performance computing markets, but DPUs gain traction by optimizing infrastructure efficiency and reducing latency. Industry leaders like NVIDIA and Intel are investing heavily in DPU technology, signalling a significant shift towards heterogeneous computing architectures.

Future Prospects and Innovations

Data Processing Units (DPUs) are poised to revolutionize cloud infrastructure by offloading networking, storage, and security tasks from CPUs, enabling enhanced data center efficiency and scalability. GPUs continue to drive advancements in AI and machine learning through unparalleled parallel processing capabilities, but DPUs are innovating with smart NICs and programmable acceleration for real-time data management. The future of computing sees synergistic integration of DPUs and GPUs, optimizing workload distribution and accelerating next-generation applications in edge computing, AI inference, and high-performance networking.

Heterogeneous computing

Heterogeneous computing leverages DPUs for data-centric tasks and GPUs for parallel processing to optimize overall system performance and efficiency.

Tensor cores

Tensor cores in GPUs are specialized hardware units designed to accelerate mixed-precision matrix operations, whereas DPUs primarily focus on data-centric processing tasks without dedicated tensor cores for AI workloads.

Network offloading

DPUs specialize in network offloading by efficiently handling data packet processing and security tasks, significantly reducing the load on GPUs and optimizing overall system performance.

AI acceleration

DPU architectures optimize AI acceleration by offloading data-centric tasks from GPUs, enhancing efficiency and throughput in machine learning workloads.

Data path optimization

DPUs optimize data paths by offloading and accelerating networking, storage, and security tasks directly within the data center infrastructure, whereas GPUs focus on parallel processing for compute-intensive workloads, making DPUs more efficient for data path acceleration and management.

SmartNIC

SmartNICs leverage DPUs to accelerate data processing and offload network functions more efficiently than GPUs, enhancing cloud and edge computing performance.

Parallel processing units

DPUs optimize parallel processing for data-centric tasks with specialized hardware, while GPUs excel at massive parallelism for graphics rendering and general-purpose computing.

In-network computing

DPUs accelerate in-network computing by offloading data processing tasks from GPUs, enabling low-latency, high-throughput networking and enhanced performance in distributed AI workloads.

Computational offload

DPUs specialize in computational offload by accelerating data-centric tasks such as networking and security, freeing GPUs to focus solely on parallel processing and graphics-intensive workloads.

Hardware orchestration

DPU excels in hardware orchestration by offloading and managing data-centric tasks such as networking, storage, and security, whereas GPU primarily focuses on parallel processing for compute-intensive workloads.

DPU vs GPU Infographic

njnir.com

njnir.com