On-chip memory offers faster access speeds and lower latency compared to off-chip memory, making it ideal for storing critical data and instructions in computer engineering. Off-chip memory provides larger storage capacity but suffers from slower access times and higher power consumption due to physical distance from the processor core. Designing efficient systems requires balancing the high-speed benefits of on-chip memory with the expanded capacity of off-chip memory to optimize performance and energy efficiency.

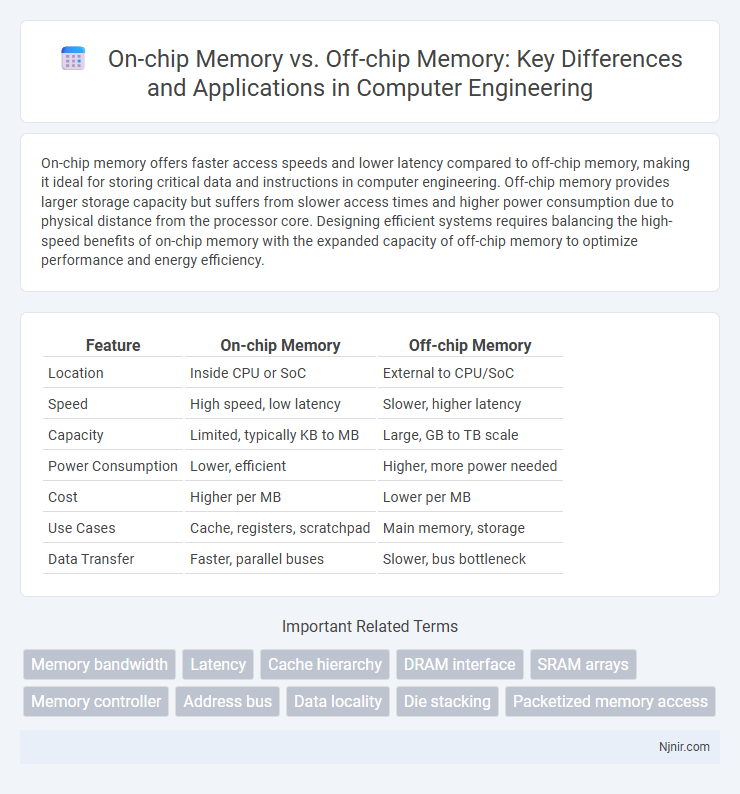

Table of Comparison

| Feature | On-chip Memory | Off-chip Memory |

|---|---|---|

| Location | Inside CPU or SoC | External to CPU/SoC |

| Speed | High speed, low latency | Slower, higher latency |

| Capacity | Limited, typically KB to MB | Large, GB to TB scale |

| Power Consumption | Lower, efficient | Higher, more power needed |

| Cost | Higher per MB | Lower per MB |

| Use Cases | Cache, registers, scratchpad | Main memory, storage |

| Data Transfer | Faster, parallel buses | Slower, bus bottleneck |

Introduction to On-chip and Off-chip Memory

On-chip memory refers to the integrated storage located within the same semiconductor chip as the processor, offering faster access speeds and lower latency due to its proximity to the CPU cores. Off-chip memory, typically DRAM or external flash, resides outside the processor chip and provides larger storage capacity but with higher latency and increased power consumption. The trade-off between on-chip and off-chip memory lies in balancing speed, capacity, and energy efficiency for optimal system performance.

Architectural Differences: On-chip vs Off-chip Memory

On-chip memory is integrated directly within the processor chip, offering low latency and high bandwidth access due to its close proximity to the CPU cores, whereas off-chip memory resides externally, connected through buses or memory controllers, resulting in higher access latency and lower bandwidth. Architecturally, on-chip memory typically includes cache levels such as L1, L2, and sometimes L3, designed for speed and minimal energy consumption, while off-chip memory encompasses DRAM modules that provide larger storage capacity but slower response times. The physical integration of on-chip memory reduces signal travel distance, enhancing performance, whereas off-chip memory requires complex interfacing and memory management to mitigate latency effects.

Access Speed and Latency Comparison

On-chip memory, such as SRAM integrated within the processor, offers significantly faster access speeds and lower latency compared to off-chip memory like DRAM, due to its close proximity to the CPU core and absence of bus delays. On-chip memory typically operates at the processor's clock speed, enabling near-instantaneous data retrieval, while off-chip memory access involves longer signal travel times and increased latency from external memory controllers. This speed differential makes on-chip memory ideal for cache storage and critical data, whereas off-chip memory serves as a larger but slower main memory resource.

Power Consumption and Efficiency

On-chip memory offers significantly lower power consumption compared to off-chip memory due to reduced signal driving requirements and shorter interconnects, leading to enhanced energy efficiency in integrated circuits. Off-chip memory, such as DRAM modules, consumes more power primarily because of longer data paths and higher latency, which increases dynamic power dissipation during data transfers. Efficient system design often leverages on-chip cache memories to minimize costly accesses to off-chip memory, optimizing both power usage and operational performance.

Data Bandwidth and Throughput

On-chip memory offers significantly higher data bandwidth and throughput compared to off-chip memory due to its proximity to the processor and integration within the same silicon die, reducing latency and enabling faster data access. Off-chip memory, such as DRAM, suffers from slower data transfer rates and higher latency due to physical distance and bus constraints, limiting overall throughput. Maximizing performance in high-speed computing applications relies heavily on optimizing on-chip memory utilization to reduce dependence on slower off-chip memory transfers.

Integration and Design Complexity

On-chip memory offers seamless integration within the processor chip, significantly reducing latency and power consumption due to proximity, whereas off-chip memory requires complex bus interfaces and longer signal paths that increase design complexity. Incorporating on-chip memory demands careful layout and thermal management to optimize space, while off-chip memory adds challenges in timing closure and signal integrity across the PCB. Choosing between the two involves balancing faster access speeds and reduced power for on-chip solutions against the scalability and cost benefits of larger off-chip memory modules.

Cost Implications in System Design

On-chip memory offers lower latency and higher bandwidth at a premium cost due to limited silicon area and complex fabrication, making it ideal for performance-critical tasks in system design. Off-chip memory provides greater capacity and cost efficiency per bit but incurs higher access latency and increased power consumption, influencing overall system cost and performance trade-offs. Designers must balance these cost implications to optimize system architecture, prioritizing on-chip memory for speed-sensitive applications and off-chip memory for bulk storage needs.

Impact on System Scalability

On-chip memory, integrated within the processor, offers low latency and high bandwidth, significantly enhancing system scalability by reducing data access bottlenecks. Off-chip memory, typically DRAM, provides larger storage capacity but incurs higher latency and contention, limiting scalability in large-scale systems. Efficient system design balances the fast access of on-chip memory with the extensive capacity of off-chip memory to optimize overall scalability and performance.

Application Use Cases and Suitability

On-chip memory offers ultra-fast access speeds and low latency, making it ideal for cache storage in CPUs, real-time data processing in embedded systems, and high-performance GPU operations where immediate data retrieval is critical. Off-chip memory provides larger capacity and flexibility, suitable for applications requiring extensive data storage such as database servers, gaming consoles, and mobile devices running complex operating systems with multitasking demands. Choosing between on-chip and off-chip memory depends on balancing speed requirements with storage capacity, where latency-sensitive tasks benefit from on-chip memory while large-scale data handling relies on off-chip solutions.

Future Trends in Memory Integration

Future trends in memory integration emphasize the increasing adoption of on-chip memory technologies such as embedded DRAM (eDRAM) and non-volatile memories like MRAM and ReRAM to enhance speed and energy efficiency. Advances in 3D integration and heterogeneous packaging enable closer coupling between processors and off-chip memory, reducing latency and increasing bandwidth compared to traditional off-chip solutions like DDR and HBM. Emerging architectures prioritize the fusion of compute and memory elements, supporting AI accelerators and edge devices with higher performance-per-watt through innovative memory hierarchies and integration strategies.

Memory bandwidth

On-chip memory offers significantly higher memory bandwidth and lower latency compared to off-chip memory, enabling faster data access and improved overall system performance.

Latency

On-chip memory offers significantly lower latency, typically a few nanoseconds, compared to off-chip memory latency which ranges from tens to hundreds of nanoseconds due to physical distance and bus speed limitations.

Cache hierarchy

On-chip memory, integrated within the processor, offers faster access speeds and lower latency essential for efficient cache hierarchy performance, whereas off-chip memory provides larger storage capacity but suffers from higher latency due to physical separation.

DRAM interface

On-chip memory offers faster access speeds and lower latency by integrating directly with the processor, while off-chip memory like DRAM interfaces provide larger capacity but incur higher latency and power consumption due to slower signal propagation and external bus communication.

SRAM arrays

On-chip SRAM arrays offer faster access times and lower latency compared to off-chip memory, enabling higher performance and energy efficiency in integrated circuits.

Memory controller

The memory controller efficiently manages data transfer and timing between on-chip memory, which offers faster access and lower latency, and off-chip memory, which provides greater capacity but requires more complex control for higher latency communication.

Address bus

On-chip memory utilizes a narrower address bus integrated within the processor for faster access and lower latency, while off-chip memory requires a wider external address bus, resulting in increased latency and higher power consumption.

Data locality

On-chip memory offers faster access and improved data locality by storing critical data closer to the processor, while off-chip memory provides larger capacity but incurs higher latency due to physical distance.

Die stacking

Die stacking enhances on-chip memory capacity and bandwidth by vertically integrating memory layers within the same silicon package, significantly reducing latency compared to traditional off-chip memory solutions.

Packetized memory access

On-chip memory offers low-latency, high-bandwidth packetized memory access enabling faster data transfer and reduced bottlenecks compared to off-chip memory, which suffers from higher latency and limited bandwidth due to longer physical distances and external interface constraints.

On-chip Memory vs Off-chip Memory Infographic

njnir.com

njnir.com