Cache memory offers faster data access speeds compared to main memory by storing frequently used instructions and data closer to the processor, reducing latency. Main memory, or RAM, provides larger storage capacity but with slower access times, handling bulk data and program storage for system operations. Optimizing the balance between cache and main memory size directly influences overall system performance and efficiency in computer engineering.

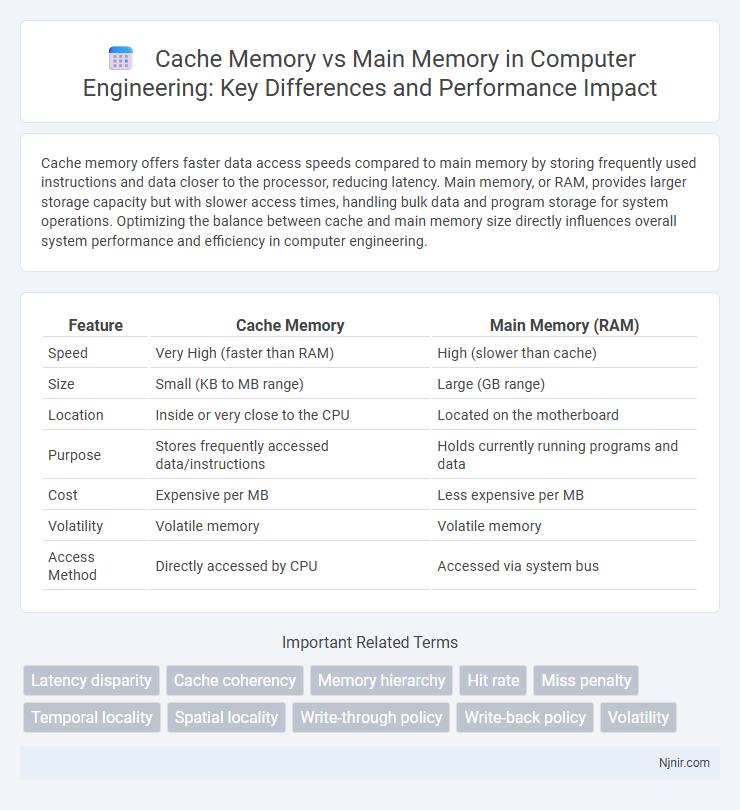

Table of Comparison

| Feature | Cache Memory | Main Memory (RAM) |

|---|---|---|

| Speed | Very High (faster than RAM) | High (slower than cache) |

| Size | Small (KB to MB range) | Large (GB range) |

| Location | Inside or very close to the CPU | Located on the motherboard |

| Purpose | Stores frequently accessed data/instructions | Holds currently running programs and data |

| Cost | Expensive per MB | Less expensive per MB |

| Volatility | Volatile memory | Volatile memory |

| Access Method | Directly accessed by CPU | Accessed via system bus |

Introduction to Cache Memory and Main Memory

Cache memory is a small, high-speed storage located close to the CPU, designed to temporarily hold frequently accessed data and instructions to accelerate processing speed. Main memory, or RAM, serves as the primary storage for active programs and data, offering larger capacity but slower access times compared to cache memory. The interplay between cache and main memory optimizes overall system performance by reducing latency and improving data retrieval efficiency.

Definitions and Roles in Computer Architecture

Cache memory is a small, high-speed storage located close to the CPU, designed to temporarily hold frequently accessed data and instructions to reduce access time and improve processing speed. Main memory, or RAM, is a larger, slower volatile storage used to hold data and programs currently in use by the CPU. Cache memory acts as a buffer between the CPU and main memory, optimizing performance by minimizing latency and expanding effective data throughput within computer architecture.

Structural Differences Between Cache and Main Memory

Cache memory is smaller in size, located closer to the CPU, and uses faster SRAM technology, enhancing data access speed. Main memory, or RAM, is larger, slower, and built with DRAM technology, serving as the primary storage for active programs and data. The structural hierarchy positions cache memory as a high-speed buffer that reduces latency compared to the bulkier, lower-speed main memory.

Speed and Performance Comparison

Cache memory operates at significantly higher speeds than main memory due to its proximity to the CPU and its use of faster semiconductor technology like SRAM. This speed advantage reduces latency and accelerates data access, resulting in improved overall system performance. Main memory, or RAM, while larger in capacity, is slower because it uses DRAM technology and is located farther from the CPU, making cache essential for high-speed processing tasks.

Hierarchical Levels and Access Times

Cache memory, situated at the top of the memory hierarchy, operates with significantly lower access times typically measured in nanoseconds, making it faster than main memory which functions at the subsequent hierarchical level with access times in the range of tens of nanoseconds. The hierarchical levels of memory design prioritize cache memory for frequently accessed data to minimize latency and improve CPU performance, while main memory serves as the larger, slower storage for ongoing processes. Effective utilization of cache memory substantially reduces the average access time to data compared to solely relying on main memory.

Cost and Storage Capacity Analysis

Cache memory offers faster data access speeds due to its proximity to the CPU but comes at a significantly higher cost per megabyte compared to main memory, making it more expensive to implement at larger sizes. Main memory, typically composed of DRAM, provides substantially greater storage capacity at a lower cost, supporting extensive data storage but with slower access times. Balancing cost and storage capacity often requires using small, high-speed cache memory alongside larger, cost-efficient main memory to optimize overall system performance.

Data Management and Retrieval Techniques

Cache memory utilizes faster, smaller storage cells near the CPU to manage frequently accessed data, enabling rapid retrieval through techniques like direct mapping, associative, or set-associative placement. Main memory, larger but slower, employs hierarchical memory management with page tables and virtual memory to handle larger datasets and support multitasking by storing and retrieving data blocks from secondary storage as needed. Effective data management balances cache replacement policies, such as Least Recently Used (LRU), with main memory's demand paging to optimize overall system performance and reduce access latency.

Impact on System Performance

Cache memory significantly enhances system performance by providing faster data access compared to main memory, reducing CPU wait times and improving execution speed. The proximity of cache to the CPU and its higher access speeds enable frequent data retrieval without the latency associated with main memory, leading to efficient processing and reduced bottlenecks. Main memory, while larger in capacity, has slower access times, making cache memory crucial for optimizing overall system responsiveness and throughput.

Use Cases and Practical Applications

Cache memory accelerates data access for the CPU by storing frequently used instructions and data, enhancing performance in tasks like gaming, video editing, and real-time processing where low latency is critical. Main memory (RAM) handles larger volumes of data and running applications, supporting multitasking and resource-intensive software such as databases, operating systems, and virtual machines. Efficient use of cache memory optimizes CPU cycles, while ample main memory enables smooth execution of complex applications and large datasets.

Future Trends in Memory Technologies

Future trends in memory technologies emphasize the integration of advanced cache memory with main memory to enhance processing speed and energy efficiency. Emerging non-volatile memory types like MRAM and ReRAM are poised to blur the traditional boundary between cache and main memory by offering faster access times and persistent data storage. Innovations in 3D stacking and heterogeneous memory architectures aim to optimize the hierarchy, reducing latency and increasing bandwidth for next-generation computing systems.

Latency disparity

Cache memory offers significantly lower latency, typically 1-10 nanoseconds, compared to main memory latency of 50-100 nanoseconds, enabling faster data access and improved CPU performance.

Cache coherency

Cache coherency ensures data consistency between cache memory and main memory by synchronizing updates across multiple cache copies in a multiprocessor system.

Memory hierarchy

Cache memory, positioned higher in the memory hierarchy than main memory, offers faster access speeds and lower latency by storing frequently accessed data closer to the CPU, thereby optimizing system performance.

Hit rate

Cache memory's higher hit rate significantly improves system performance by reducing access time compared to the lower hit rate and slower access speed of main memory.

Miss penalty

Cache memory reduces miss penalty by providing faster data access compared to main memory, which incurs higher latency when a cache miss occurs.

Temporal locality

Cache memory exploits temporal locality by storing recently accessed data to accelerate CPU access compared to slower main memory.

Spatial locality

Cache memory exploits spatial locality by storing data blocks near recently accessed addresses to reduce access time compared to slower main memory.

Write-through policy

Write-through cache memory policy immediately updates both the cache and main memory on data writes, ensuring data consistency but causing higher latency compared to write-back policies.

Write-back policy

Cache memory using the write-back policy improves system performance by temporarily storing modified data locally and updating main memory only when the cache block is replaced, reducing write operations and memory bus traffic.

Volatility

Cache memory is volatile and faster, providing temporary storage for frequently accessed data, while main memory is also volatile but larger and slower, serving as the primary workspace for active processes.

cache memory vs main memory Infographic

njnir.com

njnir.com