TensorFlow Lite and ONNX Runtime are both optimized for deploying machine learning models on edge devices, but they differ in framework compatibility and performance. TensorFlow Lite offers seamless integration with TensorFlow models and excels in mobile and embedded environments through efficient quantization and hardware acceleration. ONNX Runtime supports multiple frameworks like PyTorch and TensorFlow by providing a standardized format, delivering cross-platform flexibility and optimized execution across various hardware backends.

Table of Comparison

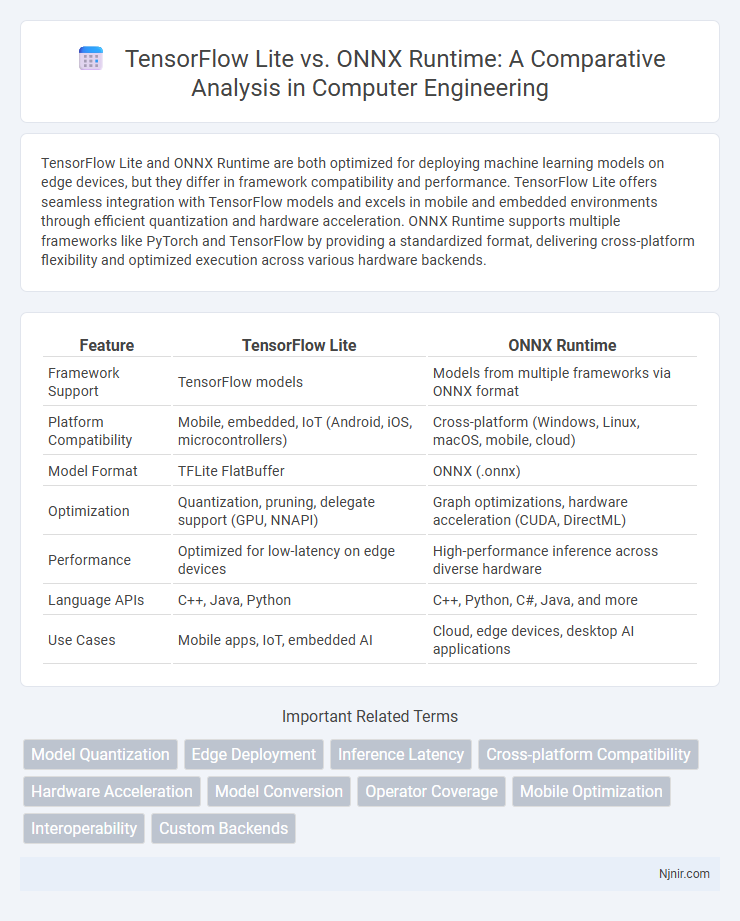

| Feature | TensorFlow Lite | ONNX Runtime |

|---|---|---|

| Framework Support | TensorFlow models | Models from multiple frameworks via ONNX format |

| Platform Compatibility | Mobile, embedded, IoT (Android, iOS, microcontrollers) | Cross-platform (Windows, Linux, macOS, mobile, cloud) |

| Model Format | TFLite FlatBuffer | ONNX (.onnx) |

| Optimization | Quantization, pruning, delegate support (GPU, NNAPI) | Graph optimizations, hardware acceleration (CUDA, DirectML) |

| Performance | Optimized for low-latency on edge devices | High-performance inference across diverse hardware |

| Language APIs | C++, Java, Python | C++, Python, C#, Java, and more |

| Use Cases | Mobile apps, IoT, embedded AI | Cloud, edge devices, desktop AI applications |

Introduction to TensorFlow Lite and ONNX Runtime

TensorFlow Lite is a lightweight, cross-platform deep learning framework optimized for mobile and edge devices, enabling fast inference with low latency and minimal binary size. ONNX Runtime, developed by Microsoft, supports a broad range of neural network models in the ONNX format, providing a versatile, high-performance inference engine across multiple hardware platforms. Both frameworks emphasize efficient model execution but cater to distinct ecosystems and deployment scenarios in machine learning applications.

Architecture Overview: TensorFlow Lite vs ONNX Runtime

TensorFlow Lite employs a lightweight interpreter designed for mobile and embedded devices, optimizing model execution through a flat buffer format and delegate mechanisms for hardware acceleration. ONNX Runtime features a modular architecture with a highly extensible execution provider interface, supporting a wide range of hardware backends and ensuring broad compatibility with ONNX models. Both frameworks prioritize efficient model inference but differ in their approach: TensorFlow Lite targets resource-constrained environments with a compact runtime, while ONNX Runtime emphasizes flexibility and cross-platform performance through its pluggable execution providers.

Model Compatibility and Supported Formats

TensorFlow Lite primarily supports TensorFlow models converted into its flatbuffer format (.tflite), offering optimized compatibility for TensorFlow-trained neural networks. ONNX Runtime provides broader model compatibility by supporting the Open Neural Network Exchange (ONNX) format, which enables running models trained in various frameworks such as PyTorch, TensorFlow, and scikit-learn. This flexibility makes ONNX Runtime suitable for deploying heterogeneous machine learning models across diverse platforms and devices.

Deployment Platforms and Ecosystem Integration

TensorFlow Lite excels in deployment on mobile and edge devices, offering optimized performance for Android and iOS platforms with strong integration into TensorFlow's comprehensive ecosystem and tools like TensorFlow Model Optimization Toolkit. ONNX Runtime supports a broader range of deployment platforms, including cloud, edge, and desktop environments, and provides interoperability by enabling models from various frameworks such as PyTorch and scikit-learn to run efficiently. The ecosystem integration of ONNX Runtime facilitates flexibility and cross-platform compatibility, while TensorFlow Lite benefits from tight coupling with TensorFlow's end-to-end ML pipeline and community resources.

Performance Benchmarking: Speed and Accuracy

TensorFlow Lite demonstrates optimized performance on mobile and edge devices with lower latency and quantized model support, enhancing speed without significant accuracy loss. ONNX Runtime offers versatile model compatibility across platforms, often delivering faster inference times on CPU and GPU by leveraging hardware acceleration and optimized graph execution. Benchmark comparisons indicate TensorFlow Lite excels in lightweight environments, while ONNX Runtime provides superior accuracy retention and multi-backend scalability for diverse deployment scenarios.

Memory Footprint and Resource Utilization

TensorFlow Lite utilizes a lightweight interpreter designed for mobile and embedded devices, optimizing memory footprint by employing model quantization and pruning techniques to reduce resource usage during inference. ONNX Runtime supports a broad range of hardware accelerators with efficient graph optimizations, but typically incurs a larger memory overhead due to its generalized execution environment and support for diverse model formats. Developers targeting minimal memory consumption and tailored resource management often prefer TensorFlow Lite for edge devices, while ONNX Runtime offers flexibility at the cost of increased resource utilization.

Hardware Acceleration and Device Support

TensorFlow Lite offers extensive hardware acceleration through its delegates, including GPU, NNAPI, and Edge TPU support that optimize performance on a wide range of Android and embedded devices. ONNX Runtime provides versatile acceleration options by integrating with CUDA, DirectML, and OpenVINO, enabling efficient execution across GPUs, CPUs, and specialized accelerators on Windows, Linux, and edge platforms. Both frameworks support diverse device ecosystems, but TensorFlow Lite is specifically tailored for mobile and IoT environments, while ONNX Runtime excels in cross-platform compatibility and enterprise AI deployments.

Developer Experience and Tooling

TensorFlow Lite offers a streamlined developer experience with extensive tooling support, including the TensorFlow Model Maker for easy model conversion and optimization on edge devices, as well as a comprehensive set of debugging and profiling tools integrated within the TensorFlow ecosystem. ONNX Runtime provides a versatile and hardware-agnostic environment with broad framework compatibility, supporting multiple languages and runtime optimizations for diverse accelerators, coupled with Microsoft's Model Optimizer and visual profiling utilities that enhance deployment workflows. Both platforms emphasize efficient model deployment, but TensorFlow Lite excels in mobile and embedded scenarios, while ONNX Runtime prioritizes cross-platform flexibility and interoperability.

Community Support and Documentation

TensorFlow Lite benefits from Google's extensive ecosystem, offering comprehensive documentation, active forums, and regular updates that simplify model deployment on mobile and embedded devices. ONNX Runtime is supported by a large open-source community backed by Microsoft and other tech giants, providing diverse language bindings, detailed guides, and continuous contributions that enhance interoperability across various platforms. Both frameworks emphasize robust community engagement and thorough documentation, but TensorFlow Lite often excels in mobile-specific resources while ONNX Runtime shines in cross-platform versatility.

Use Cases and Application Scenarios

TensorFlow Lite excels in mobile and embedded device deployment, offering optimized performance for Android and iOS applications with efficient model compression and acceleration on edge hardware. ONNX Runtime is highly versatile, supporting diverse hardware platforms and frameworks, making it ideal for cross-platform inference in cloud environments, desktops, and IoT devices. TensorFlow Lite is preferred for resource-constrained environments requiring real-time inference, while ONNX Runtime suits scenarios demanding interoperability and rapid deployment across multiple ecosystems.

Model Quantization

TensorFlow Lite offers extensive post-training quantization techniques for optimized mobile deployment, while ONNX Runtime supports dynamic and static quantization to enhance cross-platform model efficiency and inference speed.

Edge Deployment

TensorFlow Lite offers optimized performance and wide hardware support for edge deployment, while ONNX Runtime provides greater model interoperability and flexibility across diverse edge devices.

Inference Latency

TensorFlow Lite generally offers lower inference latency on mobile and embedded devices due to optimized hardware acceleration, while ONNX Runtime provides competitive latency with broader hardware support across CPUs, GPUs, and specialized accelerators.

Cross-platform Compatibility

TensorFlow Lite offers broad cross-platform compatibility with optimized support for mobile and embedded devices, while ONNX Runtime provides versatile cross-platform performance across diverse hardware and operating systems by supporting multiple deep learning frameworks.

Hardware Acceleration

TensorFlow Lite leverages specialized hardware acceleration on mobile and edge devices through GPU, NNAPI, and Hexagon DSP support, while ONNX Runtime offers extensive hardware acceleration compatibility across diverse platforms including CUDA, TensorRT, DirectML, and custom accelerators for optimized cross-device AI inference.

Model Conversion

TensorFlow Lite offers seamless model conversion with built-in support for TensorFlow models using the TFLite converter, while ONNX Runtime enables versatile model conversion across multiple frameworks by leveraging the Open Neural Network Exchange (ONNX) format for standardized interoperability.

Operator Coverage

TensorFlow Lite supports over 95% of TensorFlow operators for mobile deployment, while ONNX Runtime offers extensive coverage across diverse frameworks with ongoing operator support enhancements for cross-platform compatibility.

Mobile Optimization

TensorFlow Lite offers highly efficient mobile optimization with built-in support for hardware acceleration and low-latency inference, while ONNX Runtime provides cross-platform mobile compatibility and flexible optimization tailored for diverse device architectures.

Interoperability

TensorFlow Lite offers seamless integration within TensorFlow ecosystems for optimized mobile deployment, while ONNX Runtime excels in cross-framework interoperability by supporting multiple model formats and hardware platforms.

Custom Backends

TensorFlow Lite's custom backends enable hardware-specific optimizations through C++ and TFLite's delegate interface, while ONNX Runtime offers greater flexibility with its custom execution providers supporting diverse platforms and languages for enhanced AI model deployment.

TensorFlow Lite vs ONNX Runtime Infographic

njnir.com

njnir.com