Tensor Cores excel in accelerating matrix operations and deep learning algorithms by performing mixed-precision calculations, significantly boosting AI and machine learning workloads. CUDA Cores handle a wider range of parallel computing tasks, offering versatility for graphics rendering and general-purpose GPU processing. Optimizing performance in complex computing often involves leveraging Tensor Cores for specialized tensor math alongside CUDA Cores for broader parallel execution.

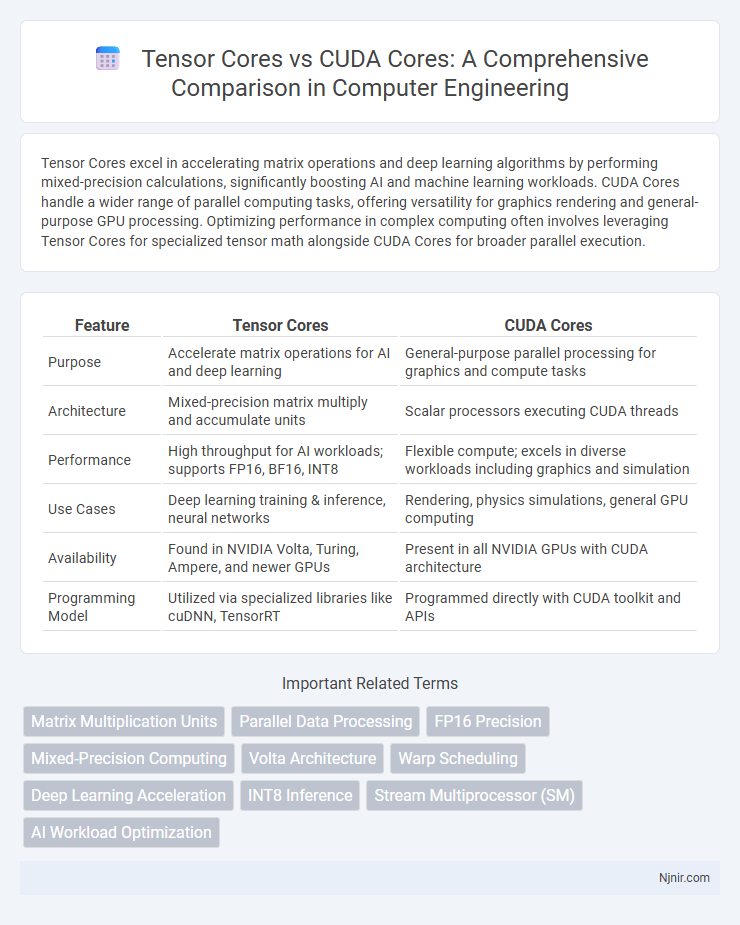

Table of Comparison

| Feature | Tensor Cores | CUDA Cores |

|---|---|---|

| Purpose | Accelerate matrix operations for AI and deep learning | General-purpose parallel processing for graphics and compute tasks |

| Architecture | Mixed-precision matrix multiply and accumulate units | Scalar processors executing CUDA threads |

| Performance | High throughput for AI workloads; supports FP16, BF16, INT8 | Flexible compute; excels in diverse workloads including graphics and simulation |

| Use Cases | Deep learning training & inference, neural networks | Rendering, physics simulations, general GPU computing |

| Availability | Found in NVIDIA Volta, Turing, Ampere, and newer GPUs | Present in all NVIDIA GPUs with CUDA architecture |

| Programming Model | Utilized via specialized libraries like cuDNN, TensorRT | Programmed directly with CUDA toolkit and APIs |

Introduction to Tensor Cores and CUDA Cores

Tensor Cores are specialized processing units designed by NVIDIA to accelerate deep learning and AI workloads by performing mixed-precision matrix multiplications efficiently, significantly enhancing training and inference speed. CUDA Cores serve as the general-purpose parallel processors within NVIDIA GPUs, executing a wide range of arithmetic and logical operations for diverse computational tasks beyond specialized AI. Tensor Cores complement CUDA Cores by offloading tensor operations, enabling higher performance and efficiency in matrix-heavy applications like neural networks.

Architectural Differences Between Tensor and CUDA Cores

Tensor Cores are specialized hardware units designed specifically for accelerating mixed-precision matrix multiplications commonly used in deep learning operations, delivering high throughput for AI workloads. CUDA Cores function as general-purpose parallel processors optimized for a wide range of tasks, including graphics rendering and compute-intensive applications, executing many threads simultaneously. Architecturally, Tensor Cores perform matrix operations at the hardware level using fused multiply-add units for improved efficiency, while CUDA Cores execute scalar operations sequentially or in parallel, offering more flexibility but less specialization.

Role of Tensor Cores in Deep Learning Workloads

Tensor Cores are specialized processing units designed to accelerate matrix multiplications and mixed-precision calculations crucial for deep learning workloads, significantly enhancing training and inference speeds. Unlike CUDA Cores, which perform general-purpose parallel computations, Tensor Cores optimize operations in neural networks such as convolutions and transformations by handling tensor operations at higher throughput. The integration of Tensor Cores in GPUs enables efficient execution of large-scale AI models, reducing computational time and power consumption in tasks like image recognition and natural language processing.

How CUDA Cores Power Traditional GPU Computing

CUDA Cores execute thousands of parallel threads, enabling high-throughput processing for traditional GPU tasks such as graphics rendering and general-purpose computing. They are optimized for diverse workloads including floating-point and integer calculations, making them essential for tasks requiring flexible, fine-grained parallelism. CUDA Cores form the backbone of GPU acceleration in applications like gaming, scientific simulations, and video encoding by efficiently handling complex algorithms and data-intensive operations.

Performance Comparison: Tensor Cores vs CUDA Cores

Tensor Cores deliver significantly higher performance in AI and deep learning workloads by performing mixed-precision matrix multiplications at blazing speeds, surpassing CUDA Cores which are optimized for general-purpose parallel processing tasks. Tensor Cores excel in accelerating neural network training and inference, providing up to 12x faster computation compared to CUDA Cores when executing tensor operations. CUDA Cores remain essential for diverse graphics rendering and compute-intensive applications but lack the specialized hardware acceleration efficiency that Tensor Cores offer for matrix-heavy computations.

Precision Handling: FP16, INT8, and Beyond

Tensor Cores excel in handling mixed-precision formats like FP16 and INT8, enabling faster matrix multiply-accumulate operations critical for AI and deep learning workloads. CUDA Cores primarily support FP32 precision but can handle FP16 with reduced performance, lacking the specialized hardware acceleration found in Tensor Cores. Advances in Tensor Core technology extend precision capabilities beyond FP16 and INT8, including support for BFLOAT16 and INT4, enhancing efficiency in diverse machine learning applications.

Programming Frameworks: Tensor Cores vs CUDA Cores

Tensor Cores leverage programming frameworks such as CUDA, cuDNN, and TensorRT to accelerate mixed-precision matrix operations commonly used in AI and deep learning workloads. CUDA Cores execute traditional parallel compute tasks through the core CUDA programming model, enabling flexible, fine-grained control for a broad range of applications, including graphics and scientific computing. Integration of Tensor Cores within CUDA frameworks allows developers to optimize performance by combining dense tensor computations with standard parallel algorithms.

Application Scenarios and Use Cases

Tensor Cores excel in accelerating matrix multiplications and deep learning workloads, making them ideal for AI model training and inferencing tasks such as neural network operations and scientific simulations. CUDA Cores handle a wide range of parallel computing applications, including graphics rendering, real-time ray tracing, and general-purpose GPU computing like physics simulations and video encoding. Developers leverage Tensor Cores primarily for machine learning frameworks like TensorFlow and PyTorch, while CUDA Cores support broader tasks through NVIDIA's CUDA platform, enabling diverse software optimization across gaming, visualization, and high-performance computing domains.

Limitations and Bottlenecks of Each Core Type

Tensor Cores excel in accelerating matrix multiplications and deep learning tasks but face limitations in supporting a wide range of general-purpose computing operations, restricting their flexibility. CUDA Cores provide broader functionality for diverse parallel computing workloads but can encounter bottlenecks in deep learning training due to lower throughput on matrix operations compared to Tensor Cores. Balancing workloads between Tensor Cores and CUDA Cores is essential to minimize latency and maximize GPU efficiency, especially in mixed-precision computations and large-scale AI inference.

Future Trends in GPU Core Technologies

Tensor Cores are specialized for deep learning and AI workloads, providing immense acceleration in matrix operations compared to traditional CUDA Cores, which excel in general-purpose parallel computing tasks. Emerging GPU architectures increasingly integrate enhanced Tensor Cores with improved precision and energy efficiency, reflecting a trend towards specialized cores optimized for machine learning inference and training. Future GPU core technologies will likely emphasize hybrid designs that balance the computational versatility of CUDA Cores with the high throughput and sparsity support of Tensor Cores to meet rising AI and graphics demands.

Matrix Multiplication Units

Tensor Cores accelerate matrix multiplication by performing mixed-precision operations in parallel, while CUDA Cores handle general-purpose parallel processing tasks including matrix multiplication but at lower efficiency.

Parallel Data Processing

Tensor Cores accelerate parallel matrix calculations for AI workloads, while CUDA Cores handle general parallel processing tasks in GPU architectures.

FP16 Precision

Tensor Cores deliver significantly higher FP16 precision throughput compared to CUDA Cores by performing mixed-precision matrix multiplications optimized for deep learning workloads.

Mixed-Precision Computing

Tensor Cores accelerate mixed-precision computing by performing matrix multiplications and accumulations in lower precision formats like FP16 and INT8 with high throughput, while CUDA Cores handle general-purpose parallel computing tasks primarily in single-precision (FP32).

Volta Architecture

Volta architecture's Tensor Cores accelerate mixed-precision matrix operations for deep learning, delivering up to 12x higher performance than CUDA Cores optimized for general-purpose parallel computation.

Warp Scheduling

Tensor Cores optimize warp scheduling by executing specialized matrix operations within warps, significantly accelerating deep learning tasks compared to the general-purpose CUDA Cores that handle diverse instructions in each warp.

Deep Learning Acceleration

Tensor Cores deliver significantly faster deep learning acceleration compared to CUDA Cores by performing mixed-precision matrix multiplications optimized for neural network training and inference.

INT8 Inference

Tensor Cores deliver up to 12x faster INT8 inference performance than CUDA Cores by enabling mixed-precision matrix multiplication optimized for deep learning workloads.

Stream Multiprocessor (SM)

Tensor Cores in NVIDIA GPUs accelerate AI computations by performing matrix operations within Stream Multiprocessors (SMs) alongside CUDA Cores, which handle general-purpose parallel processing tasks.

AI Workload Optimization

Tensor Cores accelerate AI workload optimization by performing mixed-precision matrix operations up to 12x faster than traditional CUDA Cores, enabling efficient deep learning model training and inference.

Tensor Cores vs CUDA Cores Infographic

njnir.com

njnir.com