Deep Learning Accelerators (DLAs) are specialized hardware designed to optimize neural network computations, offering flexibility across various deep learning models and frameworks. Tensor Processing Units (TPUs), developed by Google, are a type of DLA specifically engineered for high-throughput tensor operations, excelling in large-scale machine learning tasks with energy-efficient performance. TPUs typically deliver superior speed and efficiency for Google's TensorFlow applications, while general DLAs provide broader compatibility and customizable architectures for diverse AI workloads.

Table of Comparison

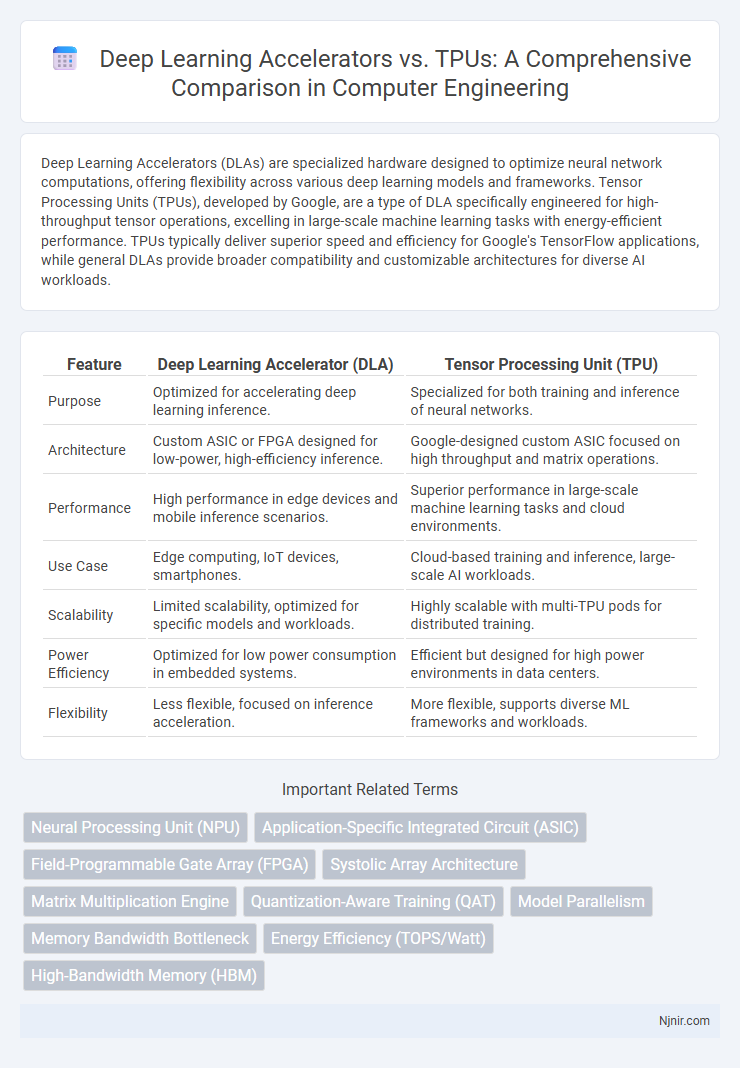

| Feature | Deep Learning Accelerator (DLA) | Tensor Processing Unit (TPU) |

|---|---|---|

| Purpose | Optimized for accelerating deep learning inference. | Specialized for both training and inference of neural networks. |

| Architecture | Custom ASIC or FPGA designed for low-power, high-efficiency inference. | Google-designed custom ASIC focused on high throughput and matrix operations. |

| Performance | High performance in edge devices and mobile inference scenarios. | Superior performance in large-scale machine learning tasks and cloud environments. |

| Use Case | Edge computing, IoT devices, smartphones. | Cloud-based training and inference, large-scale AI workloads. |

| Scalability | Limited scalability, optimized for specific models and workloads. | Highly scalable with multi-TPU pods for distributed training. |

| Power Efficiency | Optimized for low power consumption in embedded systems. | Efficient but designed for high power environments in data centers. |

| Flexibility | Less flexible, focused on inference acceleration. | More flexible, supports diverse ML frameworks and workloads. |

Introduction to Deep Learning Accelerators

Deep Learning Accelerators (DLAs) are specialized hardware designed to optimize neural network computations, enabling faster training and inference compared to traditional CPUs and GPUs. Tensor Processing Units (TPUs) are a specific type of DLA developed by Google, tailored for high-throughput matrix operations and efficient execution of large-scale deep learning models. Both DLAs and TPUs aim to improve performance and energy efficiency in AI workloads by leveraging custom architectures for parallel processing and reduced precision arithmetic.

What is a TPU?

A Tensor Processing Unit (TPU) is a custom-built application-specific integrated circuit (ASIC) developed by Google specifically to accelerate machine learning workloads, particularly deep learning models based on neural networks. Unlike general-purpose Deep Learning Accelerators (DLAs), TPUs are optimized for high-throughput matrix operations, enabling faster training and inference of complex models such as convolutional neural networks (CNNs) and transformers. TPUs deliver significant performance improvements and energy efficiency by leveraging specialized hardware components like systolic arrays and high-bandwidth memory tailored for tensor computations.

Architecture Comparison: General Accelerators vs. TPUs

Deep Learning Accelerators typically leverage generalized architectures such as GPUs or FPGAs optimized for parallel processing and flexibility in various neural network models. Tensor Processing Units (TPUs), designed by Google, employ specialized systolic array architectures focused exclusively on matrix multiplication and convolution operations, delivering superior performance and energy efficiency for TensorFlow workloads. While general accelerators prioritize adaptability across diverse AI tasks, TPUs optimize hardware-software integration for accelerated deep learning inference and training in large-scale models.

Performance Benchmarks in Deep Learning Tasks

Deep Learning Accelerators (DLAs) and Tensor Processing Units (TPUs) exhibit significant differences in performance benchmarks across deep learning tasks, with TPUs often delivering superior throughput on matrix multiplication and convolution operations essential for neural network training and inference. Benchmarks reveal TPUs' custom systolic array architecture enables higher FLOPS (floating-point operations per second), reducing latency and increasing efficiency in large-scale models like transformers compared to general DLAs. Performance metrics such as training time reduction, energy efficiency, and scalability consistently favor TPUs in tasks involving vast datasets and complex architectures used in natural language processing and computer vision.

Power Efficiency and Thermal Management

Deep Learning Accelerators (DLAs) are optimized for low power consumption, often featuring customizable architectures that reduce energy usage per inference compared to traditional GPUs. Tensor Processing Units (TPUs) achieve high power efficiency by integrating specialized matrix multiplication units and extensive on-chip memory, minimizing data movement and thermal output under heavy workloads. Effective thermal management in both DLAs and TPUs is critical, with TPU designs typically incorporating advanced cooling solutions and dynamic voltage scaling to maintain optimal performance within power constraints.

Scalability and Deployment Scenarios

Deep Learning Accelerators (DLAs) offer flexible scalability across diverse hardware platforms, enabling deployment in edge devices and data centers with varying performance requirements. Tensor Processing Units (TPUs) provide specialized scalability optimized for large-scale cloud environments, delivering high throughput for massive neural network training and inference workloads. Deployment scenarios for DLAs emphasize adaptability and energy efficiency in constrained environments, while TPUs focus on accelerating enterprise-grade AI applications within Google's cloud ecosystem.

Programming Support and Ecosystem

Deep Learning Accelerators (DLAs) offer broad compatibility with common AI frameworks such as TensorFlow and PyTorch, supported by flexible APIs that facilitate custom model development and deployment. Tensor Processing Units (TPUs), developed by Google, provide highly optimized programming support primarily through TensorFlow and the XLA compiler, enabling efficient execution of TensorFlow-specific workloads with robust integration into Google Cloud's AI ecosystem. The TPU ecosystem includes tailored tools like TPU Pods, TPUEstimator, and TPU-specific libraries, providing a streamlined environment for scalable deep learning training and inference compared to the more general-purpose support found in DLAs.

Cost Analysis: TPUs vs. Other Accelerators

TPUs typically offer superior cost-efficiency for large-scale machine learning tasks due to their specialized architecture optimized for tensor operations and reduced power consumption. Compared to general-purpose deep learning accelerators, TPUs provide higher throughput per dollar, leading to better performance-to-cost ratios in cloud-based and on-premises deployments. However, initial investment and ecosystem compatibility with popular frameworks like TensorFlow should be considered to maximize return on investment.

Industry Applications and Use Cases

Deep Learning Accelerators (DLAs) and Tensor Processing Units (TPUs) are specialized hardware designed to optimize AI workloads, with DLAs commonly integrated into edge devices for real-time applications like autonomous vehicles and smart cameras, while TPUs excel in large-scale cloud-based neural network training and inference, notably powering Google's AI services. DLAs focus on low power consumption and efficient processing in constrained environments, making them ideal for industrial automation, robotics, and IoT solutions, whereas TPUs provide massive parallelism and throughput suited for natural language processing, image recognition, and data center AI applications. Both technologies drive innovation across sectors by enabling faster AI computations, but DLAs emphasize edge deployment versatility, and TPUs prioritize high-performance cloud operations.

Future Trends in Deep Learning Hardware

Deep Learning Accelerators (DLAs) and Tensor Processing Units (TPUs) drive innovation in AI by optimizing neural network computations for higher efficiency and lower latency, with TPUs specifically designed by Google for large-scale matrix operations. Future trends point towards increased specialization of hardware, integrating AI-specific instruction sets and tighter hardware-software co-design to support complex model architectures and sparsity. Energy-efficient and scalable solutions, including advancements in mixed-precision computing and optical or neuromorphic components, are expected to dominate deep learning hardware development.

Neural Processing Unit (NPU)

Neural Processing Units (NPUs) in Deep Learning Accelerators optimize AI workloads by providing efficient, parallel processing tailored for neural network operations, whereas TPUs are specialized ASICs designed by Google specifically to accelerate tensor computations within machine learning models.

Application-Specific Integrated Circuit (ASIC)

TPUs are specialized ASICs designed by Google specifically for accelerating deep learning tasks, offering optimized performance and efficiency compared to general-purpose deep learning accelerators.

Field-Programmable Gate Array (FPGA)

Field-Programmable Gate Arrays (FPGAs) offer customizable deep learning acceleration with lower latency and energy efficiency compared to TPUs, enabling flexible hardware optimization for diverse neural network architectures.

Systolic Array Architecture

TPUs utilize a specialized systolic array architecture to efficiently perform matrix multiplications in deep learning workloads, offering higher throughput and energy efficiency compared to general-purpose deep learning accelerators.

Matrix Multiplication Engine

TPUs feature a specialized Matrix Multiply Unit with systolic arrays optimized for high-throughput, energy-efficient matrix multiplications, outperforming general deep learning accelerators in large-scale tensor operations.

Quantization-Aware Training (QAT)

Quantization-Aware Training (QAT) optimizes Deep Learning Accelerators by simulating low-precision arithmetic during model training, enhancing performance and power efficiency, whereas TPUs integrate hardware-level support for QAT to deliver faster integer operations and reduced latency in inference tasks.

Model Parallelism

TPUs offer optimized hardware support for model parallelism by efficiently distributing large neural networks across multiple cores, whereas general deep learning accelerators may require more manual optimization to achieve similar scalability.

Memory Bandwidth Bottleneck

Deep Learning Accelerators often face memory bandwidth bottlenecks limiting performance, whereas TPUs utilize high-bandwidth memory architectures and specialized data flow designs to significantly alleviate these constraints.

Energy Efficiency (TOPS/Watt)

TPUs achieve higher energy efficiency with up to 30 TOPS/Watt compared to general deep learning accelerators averaging around 10-15 TOPS/Watt in AI inference tasks.

High-Bandwidth Memory (HBM)

Deep Learning Accelerators equipped with High-Bandwidth Memory (HBM) deliver significantly faster data transfer rates compared to TPUs, enhancing neural network training speed and efficiency.

Deep Learning Accelerator vs TPU Infographic

njnir.com

njnir.com