MLIR (Multi-Level Intermediate Representation) extends LLVM by providing a more flexible and extensible compiler infrastructure tailored for machine learning workloads and domain-specific optimizations. Unlike LLVM, which primarily targets low-level code generation, MLIR supports multiple levels of abstraction, enabling easier representation and transformation of high-level operations. This multi-level approach accelerates optimization and code generation for heterogeneous hardware in computer engineering applications.

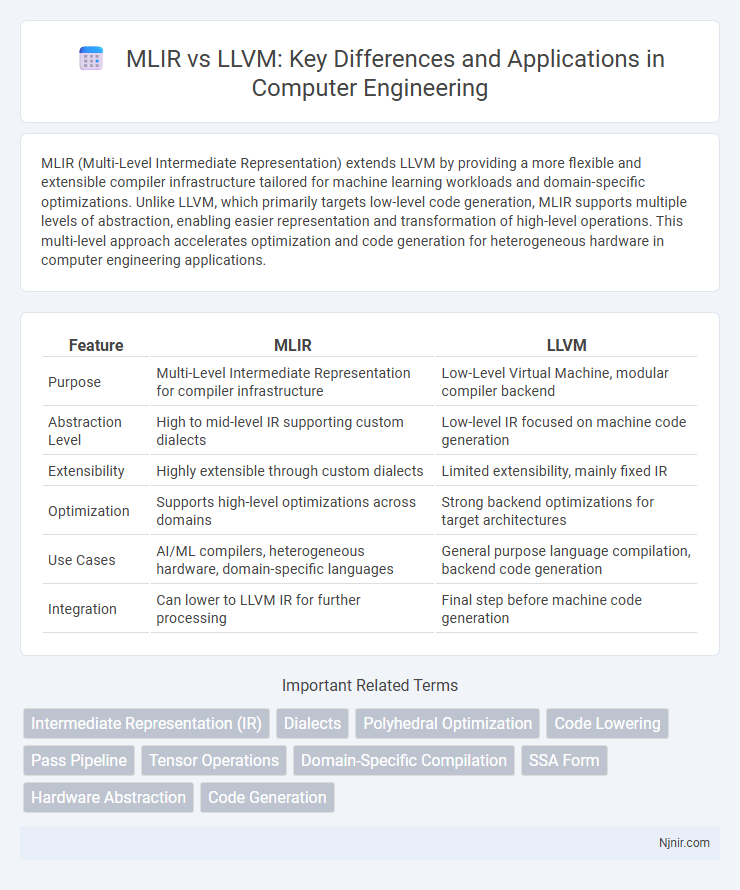

Table of Comparison

| Feature | MLIR | LLVM |

|---|---|---|

| Purpose | Multi-Level Intermediate Representation for compiler infrastructure | Low-Level Virtual Machine, modular compiler backend |

| Abstraction Level | High to mid-level IR supporting custom dialects | Low-level IR focused on machine code generation |

| Extensibility | Highly extensible through custom dialects | Limited extensibility, mainly fixed IR |

| Optimization | Supports high-level optimizations across domains | Strong backend optimizations for target architectures |

| Use Cases | AI/ML compilers, heterogeneous hardware, domain-specific languages | General purpose language compilation, backend code generation |

| Integration | Can lower to LLVM IR for further processing | Final step before machine code generation |

Introduction to MLIR and LLVM

MLIR (Multi-Level Intermediate Representation) is designed to improve compiler infrastructure by providing extensible and reusable intermediate representations optimized for machine learning and domain-specific code generation. LLVM (Low Level Virtual Machine) is a mature compiler framework widely used for code optimization and generation across diverse hardware architectures. While LLVM focuses on low-level optimizations and backend code generation, MLIR introduces multiple abstraction levels enabling high-level optimizations and seamless integration of domain-specific dialects before lowering to LLVM IR.

Core Concepts: MLIR and LLVM Architectures

MLIR (Multi-Level Intermediate Representation) features a flexible, extensible architecture supporting multiple abstraction levels, enabling custom dialects and heterogeneous hardware targeting. LLVM (Low-Level Virtual Machine) centers on a single, low-level intermediate representation designed for optimization and code generation across diverse platforms. MLIR's modular design complements LLVM's backend by transforming high-level abstractions into LLVM IR, facilitating scalable compiler development and complex language support.

Design Goals: Modular vs. Monolithic Approaches

MLIR emphasizes a modular design with reusable, extensible components to facilitate domain-specific compiler development and support multiple intermediate representations. LLVM follows a more monolithic approach, offering a unified infrastructure primarily targeting low-level code generation and optimization. This modular versus monolithic contrast highlights MLIR's flexibility in handling diverse compiler workflows compared to LLVM's focus on a streamlined, cohesive pipeline.

Intermediate Representation: Abstraction Levels

MLIR and LLVM differ primarily in their intermediate representation (IR) abstraction levels, with MLIR supporting multiple, extensible IR dialects tailored to various domains, enabling high-level optimizations and flexible transformations. LLVM, by contrast, employs a single, low-level IR focused on hardware-level code generation and optimization, limiting abstraction to machine operations. MLIR's multi-level IR hierarchy allows seamless lowering from high-level to machine-specific representations, enhancing modularity and reuse in compiler design.

Extensibility and Custom Dialects

MLIR (Multi-Level Intermediate Representation) surpasses LLVM in extensibility by supporting custom dialects that allow domain-specific optimizations and transformations tailored to diverse hardware and application needs. While LLVM focuses on low-level code generation and optimization, MLIR's modular design enables developers to define specialized operations and types, facilitating seamless integration with various frontends and backends. This extensibility makes MLIR particularly effective for machine learning compilers and heterogeneous computing environments requiring flexible intermediate representations.

Performance Optimization Capabilities

MLIR offers advanced performance optimization capabilities by enabling multi-level intermediate representations tailored for specific domains, which enhances parallelism and hardware acceleration beyond what LLVM's traditional single-level IR can achieve. Its extensible infrastructure supports custom transformations and dialects, allowing more fine-grained and target-specific optimizations that improve compilation efficiency and runtime speed. LLVM excels in low-level optimization and code generation for mature targets but benefits from MLIR's higher abstraction layers to optimize complex workloads across heterogeneous systems.

Use Cases in Computer Engineering

MLIR (Multi-Level Intermediate Representation) enhances compiler infrastructure by enabling customizable and reusable compiler components, making it ideal for complex machine learning frameworks and domain-specific optimizations in computer engineering. LLVM excels in general-purpose compiler backend tasks, providing robust code generation and optimization for a wide range of hardware architectures, including CPUs, GPUs, and embedded systems. Use cases of MLIR include accelerating AI model compilation and hardware-specific tuning, while LLVM is widely used for CPU instruction set optimization, embedded system development, and supporting multiple programming languages.

Integration within Compiler Toolchains

MLIR offers a multi-level intermediate representation designed for easy extensibility and integration within compiler toolchains, enabling seamless transformations across diverse domains. LLVM provides a robust, low-level intermediate representation widely used for backend optimization and code generation, forming the foundation for many compiler frameworks. Integration of MLIR with LLVM allows leveraging MLIR's high-level abstractions while benefiting from LLVM's mature optimization passes and target support.

Community Support and Ecosystem Evolution

MLIR benefits from a rapidly growing community driven by its modular design, attracting contributors focused on domain-specific optimizations and hardware acceleration, while LLVM boasts a mature and extensive ecosystem backed by decades of development and widespread industry adoption. LLVM's robust tooling, comprehensive library support, and broad compiler front-end integrations provide a stable foundation, whereas MLIR's flexible intermediate representation fosters experimentation with novel compilation techniques and cross-domain interoperability. Both projects leverage active open-source communities, but LLVM's entrenched presence ensures a richer repository of resources and industry-backed extensions.

Future Trends: MLIR and LLVM in Next-Gen Computing

MLIR (Multi-Level Intermediate Representation) and LLVM are evolving to address the complex requirements of next-gen computing by enabling more flexible and extensible compiler infrastructures. MLIR's multi-level abstraction supports diverse hardware targets and domain-specific optimizations, complementing LLVM's mature backend capabilities to improve performance and portability. Future trends emphasize tighter integration of MLIR within LLVM workflows to accelerate AI, machine learning, and heterogeneous computing workloads.

Intermediate Representation (IR)

MLIR extends LLVM's Intermediate Representation by supporting multiple levels of abstraction and domain-specific dialects, enhancing compiler flexibility and optimization capabilities.

Dialects

MLIR's extensible dialect framework enables customized intermediate representations tailored for domain-specific optimizations, whereas LLVM primarily offers a fixed, low-level IR focused on general-purpose code generation.

Polyhedral Optimization

MLIR enables advanced polyhedral optimizations by providing a higher-level, extensible intermediate representation that complements LLVM's low-level code generation capabilities, enhancing loop transformations and dependence analysis for improved performance in compiler pipelines.

Code Lowering

MLIR enhances code lowering by providing a flexible, multi-level intermediate representation that simplifies transforming high-level abstractions into LLVM's low-level IR for optimized machine code generation.

Pass Pipeline

MLIR enables customizable pass pipelines with multi-level intermediate representations improving optimization flexibility compared to LLVM's primarily single-level pass pipeline.

Tensor Operations

MLIR enhances Tensor operations by providing a flexible, extensible infrastructure with high-level abstractions, enabling more efficient optimization and transformation compared to LLVM's lower-level, traditional compiler framework.

Domain-Specific Compilation

MLIR enhances domain-specific compilation by enabling customizable intermediate representations tailored to diverse hardware and software environments, while LLVM provides a robust, low-level infrastructure optimized for general-purpose code generation.

SSA Form

MLIR extends LLVM's SSA form by enabling multi-level intermediate representations that improve optimization and code generation across diverse domains.

Hardware Abstraction

MLIR provides a more flexible and extensible hardware abstraction framework than LLVM by supporting multiple intermediate representations tailored for diverse hardware accelerators.

Code Generation

MLIR enables modular, reusable compiler infrastructure with high-level optimizations, improving code generation by providing extensible intermediate representations that integrate seamlessly with LLVM's low-level backend for optimized machine code emission.

MLIR vs LLVM Infographic

njnir.com

njnir.com