Batch processing involves executing large volumes of data at scheduled intervals, optimizing throughput and resource utilization for scenarios where real-time insights are not critical. Stream processing handles data continuously and in real-time, enabling immediate analysis and response for time-sensitive applications such as fraud detection or monitoring. Choosing between batch and stream processing depends on factors like latency requirements, data velocity, and system complexity.

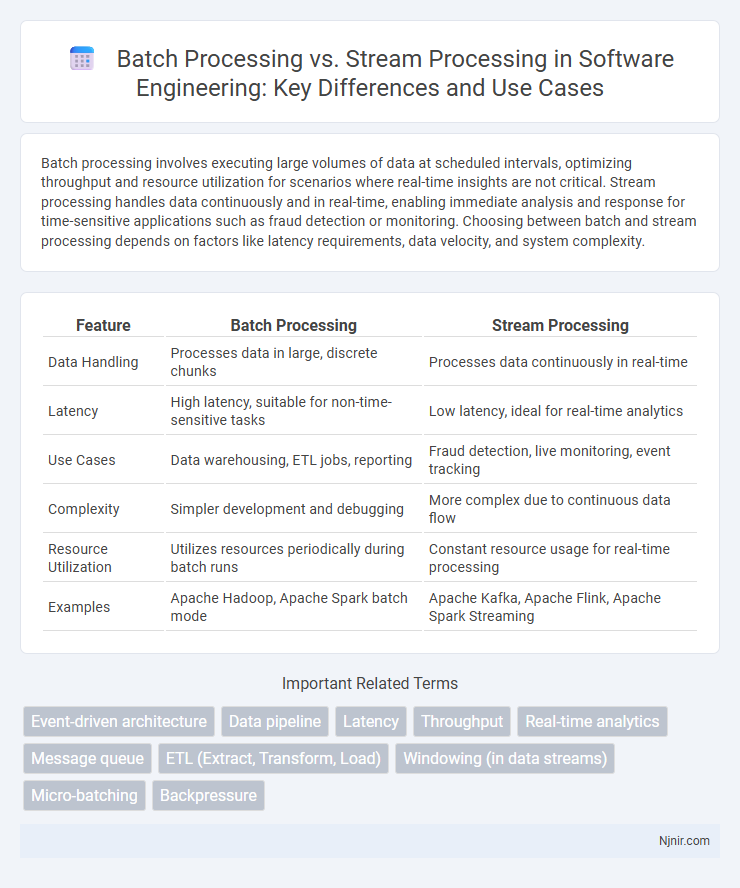

Table of Comparison

| Feature | Batch Processing | Stream Processing |

|---|---|---|

| Data Handling | Processes data in large, discrete chunks | Processes data continuously in real-time |

| Latency | High latency, suitable for non-time-sensitive tasks | Low latency, ideal for real-time analytics |

| Use Cases | Data warehousing, ETL jobs, reporting | Fraud detection, live monitoring, event tracking |

| Complexity | Simpler development and debugging | More complex due to continuous data flow |

| Resource Utilization | Utilizes resources periodically during batch runs | Constant resource usage for real-time processing |

| Examples | Apache Hadoop, Apache Spark batch mode | Apache Kafka, Apache Flink, Apache Spark Streaming |

Introduction to Batch Processing and Stream Processing

Batch processing involves collecting and storing data over a period, then processing it as a single, large dataset to achieve efficient throughput and scalability in tasks like billing or report generation. Stream processing handles data in real-time, continuously analyzing and reacting to events as they occur, which is essential for applications requiring low latency such as fraud detection or live analytics. Both methods offer distinct advantages based on data velocity and processing requirements, making them fundamental to big data and real-time computing ecosystems.

Core Differences Between Batch and Stream Processing

Batch processing handles large volumes of data collected over time and processes them as a single unit, emphasizing throughput and latency tolerance. Stream processing manages continuous, real-time data flows, prioritizing low-latency and immediate insights. The core difference lies in batch processing's discrete data sets with delay tolerance versus stream processing's real-time event handling for instantaneous analytics.

Common Use Cases for Batch Processing

Batch processing is commonly used for large-scale data transformations, data warehousing, and offline analytics where processing can be scheduled during off-peak hours. Industries such as finance and retail utilize batch jobs for end-of-day transaction processing, payroll calculations, and inventory updates. This method excels when dealing with massive datasets that do not require real-time insights but demand high throughput and system resource optimization.

Typical Applications of Stream Processing

Stream processing is commonly used in real-time analytics for monitoring financial transactions, detecting fraud, and managing network security events. It supports applications requiring immediate data insights, such as IoT device monitoring, live social media feeds, and dynamic recommendation engines. Systems like Apache Kafka and Apache Flink enable continuous data ingestion and low-latency processing crucial for these real-time applications.

Architecture and System Design Considerations

Batch processing architecture typically involves collecting and storing large volumes of data before processing them in scheduled jobs, optimizing resource utilization and throughput for high-latency, compute-intensive tasks. Stream processing systems require real-time data ingestion with low-latency event processing, necessitating architecture that supports continuous data flow, fault tolerance, and stateful computations distributed across nodes. System design considerations include data velocity, scalability, fault tolerance mechanisms, and consistency models, where batch architectures favor eventual consistency and stream architectures often require strong consistency guarantees for real-time analytics.

Performance and Scalability Comparison

Batch processing handles large volumes of data in scheduled intervals, enabling efficient resource utilization but introducing latency due to processing delays. Stream processing processes data in real-time, offering low-latency responses and immediate insights but requiring robust infrastructure to handle continuous data flow. Scalability in batch systems depends on job size and available resources, while stream processing demands horizontal scalability with distributed architectures to manage high-throughput, low-latency workloads efficiently.

Data Consistency and Fault Tolerance

Batch processing ensures strong data consistency by processing large volumes of data in discrete chunks, making it easier to detect and correct errors before completion. Stream processing handles real-time data flows with built-in fault tolerance mechanisms like checkpointing and state replication, enabling continuous data consistency despite failures. Both methods implement different strategies to balance latency, consistency, and recovery, with batch prioritizing accuracy and stream focusing on availability.

Tools and Frameworks: Batch vs. Stream

Apache Hadoop and Apache Spark are leading tools for batch processing, designed to handle large volumes of static data with high fault tolerance and scalability. For stream processing, Apache Kafka Streams and Apache Flink stand out, offering low-latency, real-time data processing with event-driven architectures. Both categories leverage distributed computing but differ fundamentally in processing models, with batch focusing on complete datasets and stream emphasizing continuous data flow.

Choosing the Right Processing Paradigm

Selecting the optimal processing paradigm depends on data velocity, volume, and latency requirements; batch processing excels with large, static datasets and high throughput needs, while stream processing supports real-time analytics and immediate event handling. Batch systems like Apache Hadoop provide cost-effective, fault-tolerant solutions for complex computations, whereas stream platforms such as Apache Kafka and Apache Flink enable continuous, low-latency data ingestion and processing. Evaluating use cases based on processing delay tolerance, data freshness, and system scalability ensures effective alignment between business objectives and processing methodologies.

Future Trends in Data Processing Technologies

Future trends in data processing technologies emphasize the integration of batch processing and stream processing into unified platforms that support real-time analytics alongside large-scale historical data analysis. Advancements in distributed computing frameworks such as Apache Flink and Apache Spark are driving the evolution toward hybrid architectures that dynamically switch between batch and stream modes depending on workload characteristics. The increasing adoption of edge computing and AI-powered data pipelines enhances the scalability and responsiveness of data systems, enabling proactive decision-making in industries like finance, healthcare, and IoT.

Event-driven architecture

Event-driven architecture leverages stream processing for real-time data analysis and responsiveness, while batch processing suits large-scale, scheduled data workloads with latency tolerance.

Data pipeline

Batch processing in data pipelines handles large volumes of data at scheduled intervals for comprehensive analysis, while stream processing manages real-time data flows to enable immediate insights and faster decision-making.

Latency

Batch processing incurs high latency due to data accumulation and periodic execution, whereas stream processing minimizes latency by analyzing data in real-time as it arrives.

Throughput

Batch processing achieves higher throughput by processing large volumes of data in scheduled intervals, while stream processing handles continuous data with lower latency but generally lower throughput.

Real-time analytics

Stream processing enables real-time analytics by continuously ingesting and analyzing data streams, whereas batch processing analyzes large data sets at scheduled intervals, resulting in higher latency.

Message queue

Message queues efficiently support both batch processing for high-throughput data handling and stream processing for real-time event-driven applications by enabling scalable, reliable message delivery.

ETL (Extract, Transform, Load)

Batch processing handles large volumes of ETL data at scheduled intervals for comprehensive transformation and loading, while stream processing executes real-time ETL on continuous data flows for immediate insights and updates.

Windowing (in data streams)

Windowing in stream processing enables real-time data aggregation over defined time intervals, contrasting with batch processing that analyzes large, static datasets after collection.

Micro-batching

Micro-batching in stream processing divides data into small batches to combine the efficiency of batch processing with the low latency of real-time data analysis.

Backpressure

Backpressure in stream processing dynamically manages data flow to prevent system overload, while batch processing inherently avoids backpressure by processing fixed data chunks sequentially.

batch processing vs stream processing Infographic

njnir.com

njnir.com