Deep learning, a subset of machine learning, leverages neural networks with multiple layers to automatically extract features from complex biomedical data, enabling more accurate diagnosis and disease prediction. Machine learning techniques often require manual feature extraction and domain expertise, which can limit their performance on large and unstructured biomedical datasets. In biomedical engineering, deep learning models outperform traditional machine learning algorithms by effectively handling high-dimensional data such as medical images and genomic sequences.

Table of Comparison

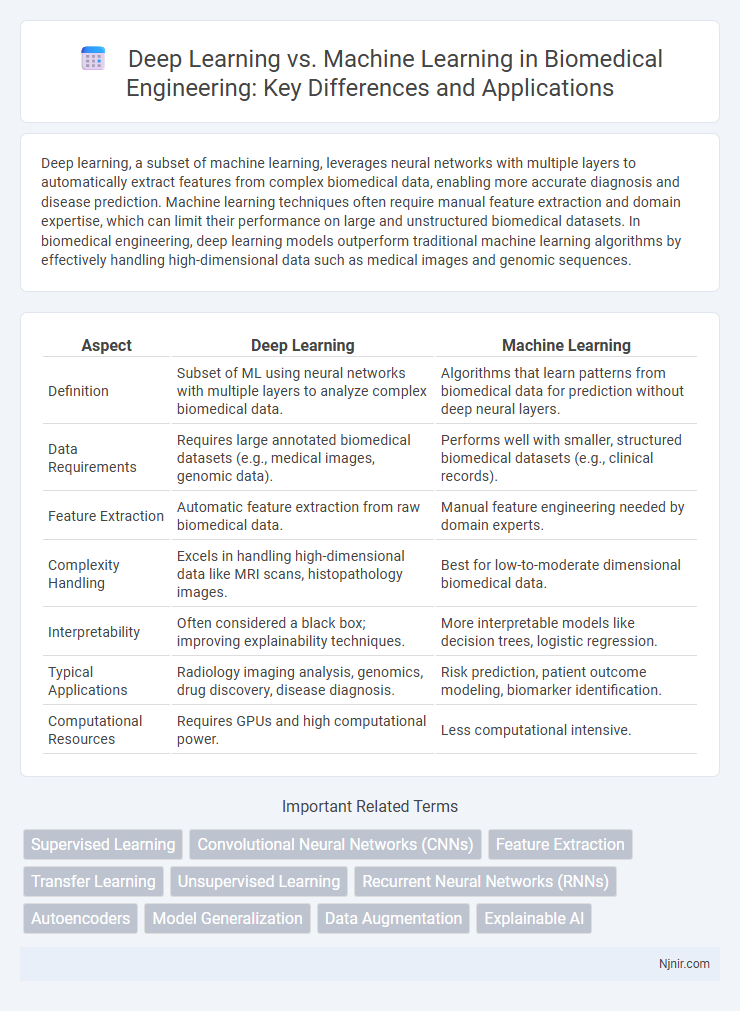

| Aspect | Deep Learning | Machine Learning |

|---|---|---|

| Definition | Subset of ML using neural networks with multiple layers to analyze complex biomedical data. | Algorithms that learn patterns from biomedical data for prediction without deep neural layers. |

| Data Requirements | Requires large annotated biomedical datasets (e.g., medical images, genomic data). | Performs well with smaller, structured biomedical datasets (e.g., clinical records). |

| Feature Extraction | Automatic feature extraction from raw biomedical data. | Manual feature engineering needed by domain experts. |

| Complexity Handling | Excels in handling high-dimensional data like MRI scans, histopathology images. | Best for low-to-moderate dimensional biomedical data. |

| Interpretability | Often considered a black box; improving explainability techniques. | More interpretable models like decision trees, logistic regression. |

| Typical Applications | Radiology imaging analysis, genomics, drug discovery, disease diagnosis. | Risk prediction, patient outcome modeling, biomarker identification. |

| Computational Resources | Requires GPUs and high computational power. | Less computational intensive. |

Introduction to Artificial Intelligence in Biomedical Engineering

Deep learning, a subset of machine learning, leverages neural networks with multiple layers to analyze complex biomedical data, enabling more accurate disease diagnosis and predictive modeling. Machine learning encompasses broader algorithms like decision trees and support vector machines, which are effective for structured biomedical datasets but may struggle with high-dimensional data. Integrating both approaches enhances artificial intelligence applications in biomedical engineering, improving tasks such as image analysis, genomics, and personalized medicine development.

Defining Machine Learning and Deep Learning

Machine learning is a subset of artificial intelligence that enables systems to learn from data patterns and improve performance without explicit programming, primarily using algorithms like decision trees, support vector machines, and linear regression. Deep learning, a specialized branch of machine learning, employs artificial neural networks with multiple layers (deep neural networks) to automatically extract high-level features from large volumes of unstructured data such as images, text, and speech. The core difference lies in deep learning's ability to perform complex representation learning through hierarchical architectures, making it particularly effective for tasks like image recognition, natural language processing, and autonomous driving.

Core Differences Between Machine Learning and Deep Learning

Machine learning relies on algorithms that learn from structured data and often requires feature extraction done manually by experts. Deep learning, a subset of machine learning, utilizes artificial neural networks with multiple layers to automatically discover complex patterns and representations from unstructured data such as images and text. Core differences include the complexity of neural network architecture in deep learning versus simpler models in machine learning, as well as the scale of data and computational power required for training.

Data Requirements in Biomedical Applications

Deep learning in biomedical applications demands vast amounts of annotated data to accurately model complex patterns, while machine learning techniques often perform adequately with smaller, well-curated datasets. High-dimensional biomedical data, such as genomic sequences, medical images, and electronic health records, provide rich inputs for deep learning models but require substantial preprocessing and normalization. Limited data availability and quality remain a significant challenge for both approaches, prompting the use of data augmentation, transfer learning, and synthetic data generation to enhance model robustness.

Model Interpretability and Explainability in Healthcare

Deep learning models in healthcare often provide higher accuracy in complex tasks like medical image analysis but suffer from lower interpretability compared to traditional machine learning algorithms such as decision trees or logistic regression. Model interpretability is crucial for clinical decision-making, as healthcare professionals require transparent explanations to trust and validate AI-driven diagnoses or treatment recommendations. Efforts to enhance explainability in deep learning include techniques like attention mechanisms, layer-wise relevance propagation, and SHAP values, which help bridge the gap between performance and understanding in medical AI applications.

Performance and Accuracy in Biomedical Tasks

Deep learning techniques often outperform traditional machine learning algorithms in biomedical tasks due to their ability to automatically extract and learn intricate features from large-scale data such as medical images and genomic sequences. Convolutional neural networks (CNNs) and recurrent neural networks (RNNs) provide higher accuracy in disease diagnosis, drug discovery, and patient outcome prediction by capturing complex patterns that shallow models may miss. Despite higher computational costs, deep learning models deliver superior performance metrics like sensitivity, specificity, and area under the curve (AUC), making them ideal for precision medicine applications.

Computational Resources and Scalability

Deep learning requires significantly more computational resources than traditional machine learning due to its reliance on large neural networks and extensive training data, often utilizing GPUs or TPUs for efficient processing. Machine learning algorithms generally demand less processing power and memory, making them more scalable for smaller datasets and less complex tasks. Scalability in deep learning benefits from parallel computing and cloud infrastructure, enabling models to handle vast amounts of data, whereas machine learning scalability is typically constrained by algorithmic complexity and resource availability.

Real-World Case Studies: Machine Learning vs Deep Learning

Real-world case studies demonstrate that machine learning excels in structured data tasks like fraud detection in banking, where algorithms analyze transactional patterns efficiently. Deep learning outperforms in unstructured data scenarios such as image recognition in autonomous vehicles and natural language processing in virtual assistants, leveraging neural networks to capture complex patterns. Business applications reveal that machine learning offers faster model training with less computational power, while deep learning requires extensive data and GPU resources for superior accuracy in complex tasks.

Challenges and Limitations in Biomedical Engineering

Deep learning in biomedical engineering faces challenges such as requiring large annotated datasets, high computational costs, and difficulty in model interpretability, which limits clinical trust and adoption. Machine learning struggles with feature engineering, generalization across diverse patient populations, and handling noisy or imbalanced biomedical data. Both approaches contend with data privacy concerns, regulatory compliance, and integration complexities within existing healthcare systems.

Future Trends and Evolving Perspectives

Deep learning is expected to drive future advancements in AI through increased model complexity and enhanced neural network architectures, enabling more precise and adaptive systems. Machine learning frameworks are evolving toward integrating automated feature engineering and hybrid models combining symbolic reasoning with deep learning techniques. Emerging trends emphasize scalable, energy-efficient algorithms and ethical AI deployment to address growing demands across industries.

Supervised Learning

Supervised learning in deep learning utilizes multilayer neural networks to automatically extract features from large labeled datasets, whereas traditional machine learning relies on manual feature engineering and simpler models for pattern recognition.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs), a specialized deep learning architecture, excel in image recognition and processing tasks by automatically learning hierarchical features, unlike traditional machine learning methods that rely on manual feature extraction.

Feature Extraction

Deep learning automates feature extraction through hierarchical neural networks, while traditional machine learning relies on manual feature engineering for effective data representation.

Transfer Learning

Transfer learning accelerates deep learning model training by leveraging pre-trained machine learning models on related tasks to improve accuracy and reduce data requirements.

Unsupervised Learning

Unsupervised learning in deep learning leverages neural networks to automatically extract complex patterns from unlabeled data, surpassing traditional machine learning methods that often rely on simpler algorithms like clustering and dimensionality reduction.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs), a key architecture in deep learning, excel at processing sequential data by maintaining memory of previous inputs, unlike traditional machine learning models that lack temporal context.

Autoencoders

Autoencoders, a deep learning technique, excel in unsupervised feature learning by encoding input data into compressed representations, outperforming traditional machine learning methods in tasks like anomaly detection and data reconstruction.

Model Generalization

Deep learning models often achieve superior model generalization on large-scale and complex datasets compared to traditional machine learning algorithms due to their ability to automatically extract hierarchical features.

Data Augmentation

Data augmentation significantly enhances deep learning performance by artificially expanding training datasets, whereas machine learning often relies on traditional feature engineering with limited augmentation techniques.

Explainable AI

Explainable AI enhances transparency in Deep Learning and Machine Learning models by providing interpretable insights into complex algorithms and decision-making processes.

Deep Learning vs Machine Learning Infographic

njnir.com

njnir.com