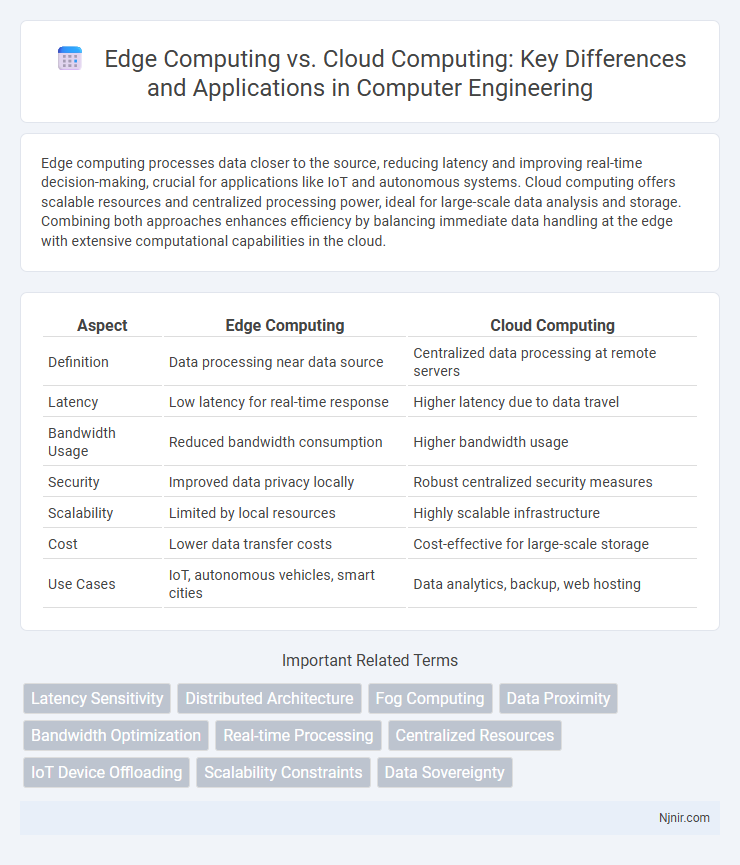

Edge computing processes data closer to the source, reducing latency and improving real-time decision-making, crucial for applications like IoT and autonomous systems. Cloud computing offers scalable resources and centralized processing power, ideal for large-scale data analysis and storage. Combining both approaches enhances efficiency by balancing immediate data handling at the edge with extensive computational capabilities in the cloud.

Table of Comparison

| Aspect | Edge Computing | Cloud Computing |

|---|---|---|

| Definition | Data processing near data source | Centralized data processing at remote servers |

| Latency | Low latency for real-time response | Higher latency due to data travel |

| Bandwidth Usage | Reduced bandwidth consumption | Higher bandwidth usage |

| Security | Improved data privacy locally | Robust centralized security measures |

| Scalability | Limited by local resources | Highly scalable infrastructure |

| Cost | Lower data transfer costs | Cost-effective for large-scale storage |

| Use Cases | IoT, autonomous vehicles, smart cities | Data analytics, backup, web hosting |

Introduction to Edge Computing and Cloud Computing

Edge computing processes data near the source of generation, reducing latency and bandwidth use compared to centralized cloud computing, which relies on data centers for storage and computation. Cloud computing offers scalable resources and centralized management, ideal for heavy data processing and global accessibility. Edge computing enhances real-time data handling for applications like IoT and autonomous vehicles by bringing computation closer to devices.

Key Architectural Differences

Edge computing processes data locally on devices or edge servers, minimizing latency and reducing bandwidth usage by handling time-sensitive tasks close to data sources. Cloud computing relies on centralized data centers with vast computational resources, providing scalable storage and processing but often incurring higher latency due to data transmission to remote servers. Key architectural differences include distributed nodes in edge computing versus centralized infrastructure in cloud computing, which influences performance, scalability, and real-time decision-making capabilities.

Data Processing and Latency Comparison

Edge computing processes data locally on devices or nearby edge servers, significantly reducing latency by minimizing data travel distance compared to cloud computing, which relies on centralized data centers often located far from end users. This proximity in edge computing enables real-time analytics and faster response times essential for applications like autonomous vehicles and IoT sensors. Cloud computing offers massive computational power and scalability but typically incurs higher latency due to data transmission delays, making it less ideal for latency-sensitive operations.

Scalability and Flexibility Analysis

Edge computing offers localized data processing, enabling scalable, real-time applications with reduced latency, which is essential for IoT and autonomous systems requiring immediate response. Cloud computing provides virtually unlimited scalability and flexible resource allocation through centralized data centers, supporting large-scale data storage and complex computational tasks. Enterprises often leverage hybrid architectures to balance edge's low-latency benefits with cloud's expansive scalability and flexible infrastructure.

Security and Privacy Considerations

Edge computing enhances security and privacy by processing sensitive data locally, minimizing exposure to external threats and reducing latency in data transmission. Cloud computing centralizes data storage and processing, introducing risks related to data breaches, unauthorized access, and compliance with regulations such as GDPR and HIPAA. Implementing robust encryption, access control, and decentralized security protocols is crucial for both models to safeguard data integrity and user privacy.

Cost Implications and Resource Management

Edge computing reduces latency and bandwidth costs by processing data locally, minimizing the need for extensive cloud infrastructure and expensive data transfer fees. Cloud computing centralizes resources, offering scalable and flexible services but often incurs higher operational costs due to data storage, continuous connectivity, and extensive resource allocation. Efficient resource management in edge computing relies on distributed hardware optimization, while cloud computing leverages dynamic resource provisioning and virtualization to balance performance and cost.

Network Bandwidth and Connectivity Requirements

Edge computing significantly reduces network bandwidth usage by processing data closer to the source, minimizing the need to transmit large volumes of information to centralized cloud servers. Cloud computing relies heavily on stable, high-bandwidth connectivity to transfer data between devices and distant data centers, which can introduce latency and increase network congestion. Edge computing enhances real-time responsiveness and reliability in environments with limited or intermittent connectivity, alleviating the strain on cloud network infrastructure.

Use Cases and Industry Applications

Edge computing enables real-time data processing for applications like autonomous vehicles, industrial automation, and smart healthcare devices by reducing latency and bandwidth usage. Cloud computing excels in scalable data storage, big data analytics, and enterprise applications such as customer relationship management (CRM) and large-scale AI model training. Industries such as manufacturing, automotive, and healthcare increasingly adopt edge solutions for critical, low-latency tasks, while finance, e-commerce, and entertainment benefit from cloud-based resources for extensive data processing and collaboration.

Challenges and Limitations of Each Approach

Edge computing faces challenges such as limited processing power, storage capacity, and security vulnerabilities due to its distributed nature, making it difficult to manage and scale. Cloud computing encounters limitations in latency, bandwidth dependency, and potential data privacy concerns, especially for real-time applications requiring instant response. Both approaches require balancing trade-offs between data processing speed, resource availability, and security protocols to optimize performance and reliability.

Future Trends in Edge and Cloud Computing

Future trends in edge computing emphasize the integration of AI-driven analytics at the network edge, reducing latency for real-time applications such as autonomous vehicles and smart cities. Cloud computing continues to evolve with enhanced scalability and hybrid cloud models that combine public and private infrastructures to optimize workload distribution. The convergence of edge and cloud computing is expected to facilitate seamless data processing, higher security through decentralized architectures, and improved support for Internet of Things (IoT) ecosystems.

Latency Sensitivity

Edge computing reduces latency by processing data closer to the source, making it ideal for latency-sensitive applications compared to cloud computing, which relies on centralized data centers and longer transmission times.

Distributed Architecture

Edge computing enhances distributed architecture by processing data locally on edge devices, reducing latency and bandwidth use compared to centralized cloud computing models.

Fog Computing

Fog computing extends cloud computing by processing data closer to IoT devices at the network edge, reducing latency and improving real-time analytics compared to traditional centralized cloud models.

Data Proximity

Edge computing processes data near the source, reducing latency and bandwidth use, while cloud computing relies on centralized data centers often located far from the data origin.

Bandwidth Optimization

Edge computing reduces data transmission by processing information locally, significantly optimizing bandwidth usage compared to cloud computing, which relies on centralized data centers.

Real-time Processing

Edge computing minimizes latency by processing data locally near the source, enabling real-time processing, whereas cloud computing relies on centralized servers that introduce higher latency and delay.

Centralized Resources

Edge computing minimizes latency by processing data locally near the source, while cloud computing relies on centralized data centers for resource-intensive tasks and large-scale storage.

IoT Device Offloading

Edge computing reduces latency and bandwidth use by processing IoT device offloading locally, whereas cloud computing centralizes processing but may introduce higher latency and network dependency.

Scalability Constraints

Edge computing faces scalability constraints due to limited local resources, whereas cloud computing offers virtually unlimited scalability through centralized data centers.

Data Sovereignty

Edge computing enhances data sovereignty by processing data locally on devices or nearby servers, reducing reliance on centralized cloud facilities that may be subject to foreign jurisdiction laws.

edge computing vs cloud computing Infographic

njnir.com

njnir.com