Parallel computing processes multiple tasks simultaneously by dividing workloads across multiple processors, leading to significant speed improvements in complex computations. In contrast, serial computing executes tasks sequentially, one after another, which can result in slower performance for large-scale problems. Effective parallelization requires careful consideration of data dependencies and communication overhead to maximize efficiency.

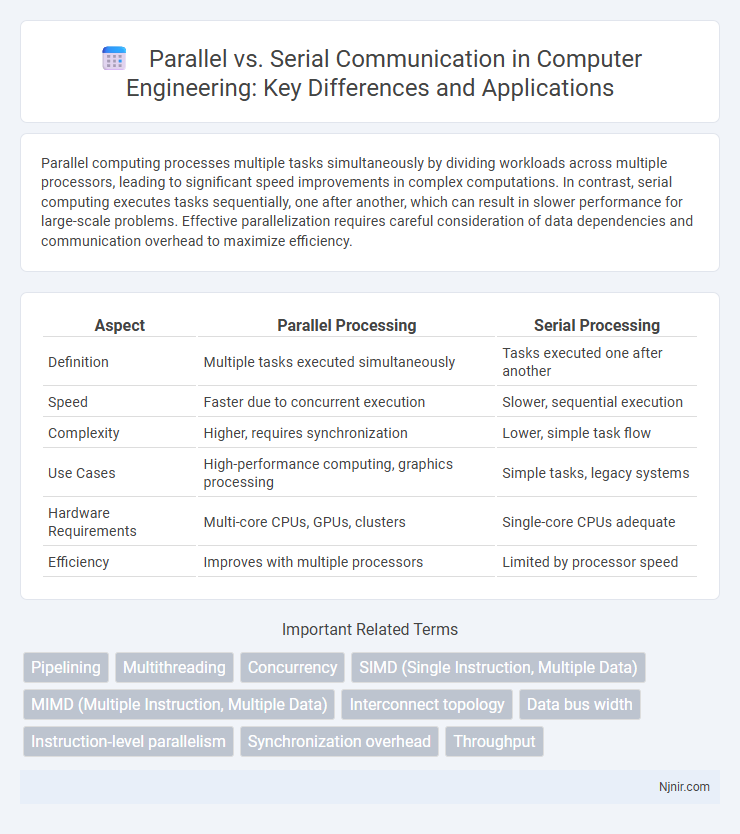

Table of Comparison

| Aspect | Parallel Processing | Serial Processing |

|---|---|---|

| Definition | Multiple tasks executed simultaneously | Tasks executed one after another |

| Speed | Faster due to concurrent execution | Slower, sequential execution |

| Complexity | Higher, requires synchronization | Lower, simple task flow |

| Use Cases | High-performance computing, graphics processing | Simple tasks, legacy systems |

| Hardware Requirements | Multi-core CPUs, GPUs, clusters | Single-core CPUs adequate |

| Efficiency | Improves with multiple processors | Limited by processor speed |

Introduction to Parallel and Serial Processing

Parallel processing involves executing multiple tasks or instructions simultaneously by dividing them across multiple processors or cores, enhancing computational speed and efficiency. Serial processing, in contrast, handles tasks sequentially, processing one instruction at a time, which can limit performance in complex or large-scale computing environments. Understanding the differences between parallel and serial processing is crucial for optimizing system design, especially in applications requiring high-performance computing and data-intensive operations.

Key Differences Between Parallel and Serial Architectures

Parallel architectures process multiple instructions or data simultaneously, significantly enhancing computational speed by dividing tasks across multiple processors or cores, while serial architectures handle one instruction at a time, resulting in slower overall performance. In terms of data transmission, parallel communication sends multiple bits simultaneously over multiple channels, increasing throughput but requiring more complex wiring and synchronization, whereas serial communication transmits bits sequentially over a single channel, offering simpler design and longer-distance communication. Parallel systems excel in high-performance computing scenarios like scientific simulations, whereas serial systems are favored for their reliability and simplicity in applications such as USB and network communication.

Advantages of Parallel Computing

Parallel computing significantly accelerates data processing by dividing tasks into smaller sub-tasks executed simultaneously across multiple processors. This approach enhances performance and efficiency, especially in computationally intensive applications like scientific simulations, big data analytics, and machine learning. Parallel computing also offers improved scalability and resource utilization, enabling faster problem-solving and real-time data handling in complex systems.

Limitations of Serial Processing

Serial processing is limited by its inherently sequential nature, where tasks are executed one after another, leading to slower overall performance and inefficiency in handling large-scale or complex computations. This constraint results in increased latency and bottlenecks, especially in applications requiring real-time processing or multitasking. The inability to leverage concurrent execution hinders scalability and responsiveness compared to parallel processing systems.

Parallelism in Modern Microprocessors

Parallelism in modern microprocessors enables simultaneous execution of multiple instructions through techniques like superscalar architecture, multi-core processors, and simultaneous multithreading (SMT). This approach significantly enhances computational throughput, reduces latency, and improves energy efficiency by leveraging hardware resources more effectively than serial processing. Key technologies such as pipelining, vector processing, and cache hierarchies further optimize parallel operations, driving performance gains in applications ranging from data centers to mobile devices.

Application Areas: When to Use Parallel vs Serial

Parallel processing excels in data-intensive applications such as scientific simulations, big data analytics, and machine learning where tasks can be divided into smaller, independent units executed simultaneously to enhance performance. Serial processing remains optimal for sequential tasks with interdependent steps, such as transaction processing, simple control systems, and legacy software requiring deterministic execution. Choosing between parallel and serial depends on workload structure, data dependencies, and the need for speed versus simplicity.

Scalability and Performance Impacts

Parallel processing significantly enhances scalability and performance by distributing workloads across multiple processors or cores, reducing execution time and increasing throughput for complex, data-intensive tasks. Serial processing, constrained by sequential execution, limits scalability as performance improvements depend solely on faster individual processors rather than concurrent task handling. Systems leveraging parallelism achieve higher efficiency in large-scale environments such as high-performance computing, big data analytics, and real-time simulations.

Power Consumption Considerations

Parallel processing reduces power consumption by completing tasks faster and allowing processors to enter low-power states sooner, whereas serial processing often results in longer execution times and increased energy use. Parallel architectures benefit from workload distribution across multiple cores, minimizing the need for high-frequency operation that typically raises power draw. In contrast, serial execution relies on single-core performance, which demands sustained power consumption over extended periods, leading to inefficiencies in energy usage.

Software and Programming Challenges

Parallel programming requires managing concurrent execution threads, which introduces complexity in synchronization, data sharing, and race condition prevention. Serial programming simplifies debugging and control flow but limits performance gains as tasks run sequentially without exploiting multi-core processors. Efficient parallel software demands robust tools and algorithms to handle thread communication, load balancing, and deadlock avoidance for maximizing hardware utilization.

Future Trends in Parallel and Serial Computing

Future trends in parallel computing emphasize the rise of heterogeneous architectures combining CPUs, GPUs, and specialized accelerators to maximize performance and energy efficiency. Serial computing continues to evolve with improved single-core speeds and advanced instruction-level parallelism, targeting applications with sequential dependencies. Emerging technologies like quantum computing and neuromorphic processors will complement traditional parallel and serial frameworks, driving innovation in computing paradigms.

Pipelining

Pipelining improves processor performance by executing multiple instruction stages simultaneously in parallel, unlike serial execution which processes instructions sequentially one at a time.

Multithreading

Multithreading improves performance by executing multiple threads in parallel within a single process, whereas serial execution processes tasks sequentially, resulting in slower completion times.

Concurrency

Parallel execution processes multiple tasks simultaneously to maximize throughput, while serial execution handles tasks one after another, impacting concurrency by limiting simultaneous operations.

SIMD (Single Instruction, Multiple Data)

SIMD processes multiple data points with a single instruction in parallel, significantly enhancing computational efficiency compared to serial execution.

MIMD (Multiple Instruction, Multiple Data)

MIMD (Multiple Instruction, Multiple Data) architectures execute multiple instructions on multiple data streams simultaneously, enabling parallel processing that significantly outperforms serial execution in complex computing tasks.

Interconnect topology

Parallel interconnect topology transmits multiple bits simultaneously across multiple channels, offering higher data transfer rates, whereas serial interconnect topology sends bits sequentially over a single channel, resulting in simpler wiring and better scalability for long-distance communication.

Data bus width

Parallel data buses transfer multiple bits simultaneously, offering wider data bus widths and higher data throughput compared to serial buses that transmit bits sequentially over narrower data paths.

Instruction-level parallelism

Instruction-level parallelism (ILP) enables multiple instructions to be executed simultaneously in parallel processors, significantly improving performance over serial execution that handles one instruction at a time.

Synchronization overhead

Parallel processing reduces execution time but often incurs significant synchronization overhead compared to serial execution, which avoids these delays by running tasks sequentially.

Throughput

Parallel processing significantly increases throughput by executing multiple tasks simultaneously, while serial processing handles tasks sequentially, resulting in lower overall throughput.

Parallel vs Serial Infographic

njnir.com

njnir.com