Pipelining improves processor throughput by breaking down instruction execution into discrete stages, allowing multiple instructions to overlap in execution. Superscalar architecture increases instruction-level parallelism by issuing multiple instructions per clock cycle across several execution units. Combining both techniques enhances CPU performance by maximizing instruction throughput and minimizing execution latency.

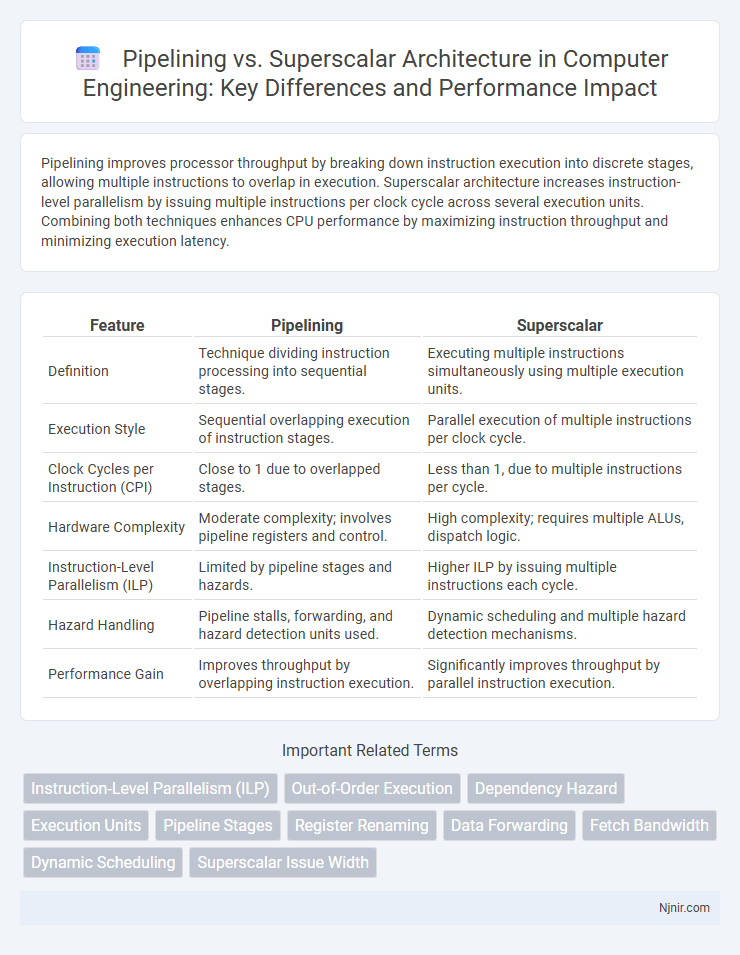

Table of Comparison

| Feature | Pipelining | Superscalar |

|---|---|---|

| Definition | Technique dividing instruction processing into sequential stages. | Executing multiple instructions simultaneously using multiple execution units. |

| Execution Style | Sequential overlapping execution of instruction stages. | Parallel execution of multiple instructions per clock cycle. |

| Clock Cycles per Instruction (CPI) | Close to 1 due to overlapped stages. | Less than 1, due to multiple instructions per cycle. |

| Hardware Complexity | Moderate complexity; involves pipeline registers and control. | High complexity; requires multiple ALUs, dispatch logic. |

| Instruction-Level Parallelism (ILP) | Limited by pipeline stages and hazards. | Higher ILP by issuing multiple instructions each cycle. |

| Hazard Handling | Pipeline stalls, forwarding, and hazard detection units used. | Dynamic scheduling and multiple hazard detection mechanisms. |

| Performance Gain | Improves throughput by overlapping instruction execution. | Significantly improves throughput by parallel instruction execution. |

Introduction to Instruction-Level Parallelism

Instruction-Level Parallelism (ILP) enhances processor performance by executing multiple instructions simultaneously. Pipelining divides instruction execution into discrete stages, increasing throughput by overlapping instruction processing, while superscalar architectures dispatch multiple instructions per clock cycle through parallel functional units. Superscalar designs typically achieve higher ILP by exploiting hardware-level parallelism beyond simple pipeline stages, enabling more efficient utilization of CPU resources.

Defining Pipelining in Computer Architecture

Pipelining in computer architecture is a technique that breaks down instruction execution into discrete stages, allowing multiple instructions to overlap in processing for improved throughput. Each pipeline stage completes a part of an instruction, enabling a new instruction to enter the pipeline before the previous one finishes. This method contrasts with superscalar architectures, which issue multiple instructions simultaneously in parallel pipelines to achieve higher instruction-level parallelism.

Understanding Superscalar Processors

Superscalar processors execute multiple instructions per clock cycle by dispatching them to parallel execution units, significantly increasing instruction throughput compared to traditional pipelining, which processes instructions sequentially in stages. These processors leverage dynamic scheduling, out-of-order execution, and register renaming to overcome pipeline hazards and resource conflicts, enhancing performance beyond basic pipeline improvements. Understanding superscalar architecture involves recognizing how multiple instructions are fetched, decoded, and issued simultaneously, optimizing processor efficiency and reducing execution latency.

Key Differences Between Pipelining and Superscalar

Pipelining enhances CPU instruction throughput by dividing execution into sequential stages, allowing multiple instructions to overlap in processing, whereas superscalar architecture issues multiple instructions per clock cycle through parallel execution units. Superscalar designs rely on dynamic instruction dispatch and multiple functional units to execute instructions simultaneously, while pipelining primarily focuses on increasing instruction processing speed by stage segmentation. The key difference lies in pipelining improving instruction flow in a linear sequence, contrasted with superscalar architectures' ability to exploit instruction-level parallelism for concurrent execution.

Performance Implications of Pipelined Designs

Pipelining improves processor throughput by overlapping instruction execution stages, reducing instruction latency and increasing instruction per cycle (IPC) rates. However, pipeline hazards such as data, control, and structural hazards can introduce stalls and degrade performance, requiring hazard detection and forwarding mechanisms. The depth and complexity of pipeline stages critically influence clock speed and overall processor efficiency in pipelined architectures.

Superscalar Architecture: Advantages and Challenges

Superscalar architecture enhances processor performance by issuing multiple instructions per clock cycle through parallel execution units, significantly increasing instruction throughput compared to traditional pipelining. Advantages include improved utilization of CPU resources, reduced instruction latency, and higher efficiency in handling instruction-level parallelism. Challenges involve increased hardware complexity, difficulties in dynamic instruction scheduling, and the necessity for sophisticated branch prediction and hazard detection mechanisms.

Hazard Detection: Pipelining vs Superscalar

Hazard detection in pipelining involves identifying data, control, and structural hazards that can stall the pipeline or require forwarding to maintain instruction flow. Superscalar architectures intensify hazard detection complexity due to parallel instruction issue, requiring more advanced mechanisms like dynamic scheduling and register renaming to prevent pipeline stalls and resource conflicts. Efficient hazard detection enhances instruction throughput and minimizes execution delays in both pipeline types.

Real-World Examples of Pipelined and Superscalar CPUs

The Intel Pentium processor exemplifies superscalar architecture by executing multiple instructions per clock cycle using dual pipelines, enhancing instruction-level parallelism compared to the MIPS R2000 pipelined CPU, which processes one instruction per cycle through a five-stage pipeline. ARM Cortex-A72 demonstrates superscalar design with out-of-order execution and simultaneous multiple instruction dispatch, whereas the classic RISC-V RV32I core serves as a real-world example of a deeply pipelined scalar CPU prioritizing efficiency. These architectures highlight the performance trade-offs, with superscalar CPUs achieving higher throughput for complex workloads and pipelined CPUs offering simpler, energy-efficient instruction processing.

When to Use Pipelining or Superscalar Approaches

Pipelining is ideal for workloads with predictable instruction sequences and limited data dependencies, maximizing throughput by overlapping instruction phases. Superscalar architectures excel when executing multiple independent instructions simultaneously, leveraging parallel execution units to improve performance in complex or varied instruction streams. Choosing between them depends on workload characteristics: use pipelining for steady, uniform tasks and superscalar designs for dynamic, parallelizable instruction flows.

Future Trends in Processor Parallelism

Future trends in processor parallelism emphasize enhanced pipelining and superscalar techniques, integrating deeper pipeline stages with wider superscalar widths to maximize instruction-level parallelism. Innovations in dynamic scheduling and speculative execution improve utilization of pipeline resources and execute multiple instructions per cycle, driving performance gains in multicore and heterogeneous architectures. Emerging technologies like AI-focused accelerators and quantum-inspired processors further expand parallelism beyond traditional pipelining and superscalar designs, shaping next-generation computing efficiency.

Instruction-Level Parallelism (ILP)

Superscalar architectures exploit higher Instruction-Level Parallelism (ILP) by issuing multiple instructions per cycle through multiple functional units, whereas pipelining increases ILP by overlapping instruction stages to improve throughput without executing multiple instructions simultaneously.

Out-of-Order Execution

Out-of-order execution in superscalar processors enables multiple instructions to be processed simultaneously by dynamically reordering them for optimal utilization of execution units, whereas pipelining processes instructions in a fixed sequential order, limiting parallelism.

Dependency Hazard

Dependency hazards in pipelining occur due to instruction data dependencies limiting parallelism, whereas superscalar architectures mitigate these hazards by enabling multiple instructions to execute simultaneously through dynamic scheduling and register renaming.

Execution Units

Superscalar processors achieve higher instruction throughput by dispatching multiple instructions simultaneously to several execution units, whereas pipelining improves performance by dividing instruction execution into sequential stages within a single execution unit.

Pipeline Stages

Pipeline stages in pipelining sequentially divide instruction execution into distinct steps, while superscalar architectures deploy multiple pipelines simultaneously to execute several instructions per cycle.

Register Renaming

Register renaming in superscalar architectures eliminates false data dependencies and increases instruction-level parallelism beyond the fixed pipeline stages of traditional pipelining.

Data Forwarding

Data forwarding minimizes pipeline hazards by directly transferring data between pipeline stages in pipelining, whereas superscalar architectures rely on multiple parallel execution units and complex scheduling to reduce data dependencies without solely depending on forwarding mechanisms.

Fetch Bandwidth

Superscalar processors achieve higher fetch bandwidth than pipelined processors by fetching multiple instructions per cycle, enabling greater instruction-level parallelism and improved overall performance.

Dynamic Scheduling

Dynamic scheduling in superscalar architectures outperforms traditional pipelining by enabling out-of-order instruction execution and better resource utilization for improved CPU throughput.

Superscalar Issue Width

Superscalar processors achieve higher instruction throughput than pipelining by dispatching multiple instructions per cycle through wider issue widths, typically ranging from 2 to 8 or more, which enables parallel execution units to operate simultaneously.

pipelining vs superscalar Infographic

njnir.com

njnir.com