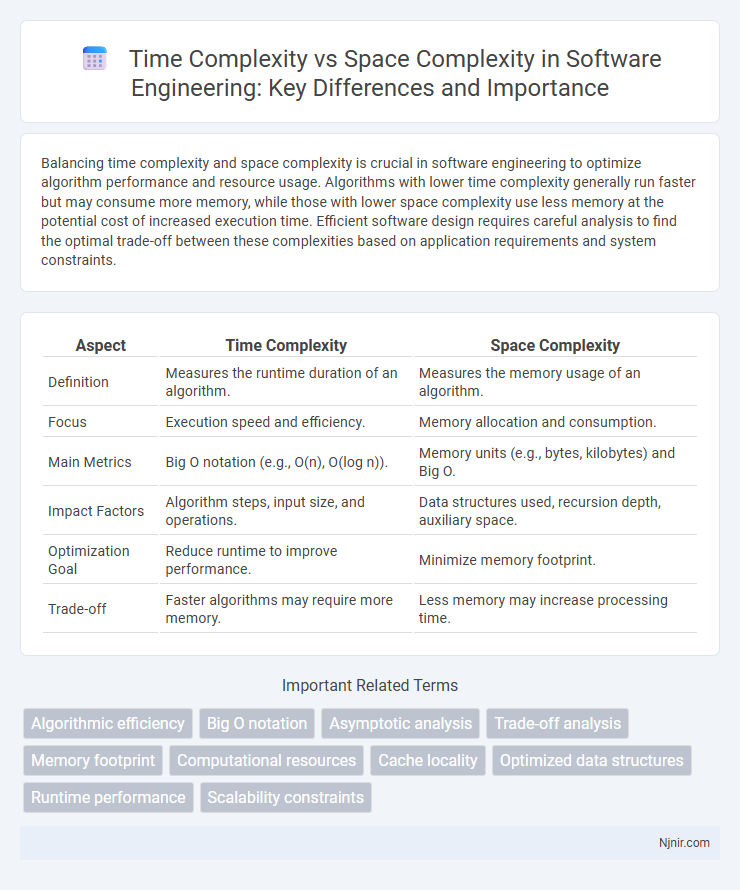

Balancing time complexity and space complexity is crucial in software engineering to optimize algorithm performance and resource usage. Algorithms with lower time complexity generally run faster but may consume more memory, while those with lower space complexity use less memory at the potential cost of increased execution time. Efficient software design requires careful analysis to find the optimal trade-off between these complexities based on application requirements and system constraints.

Table of Comparison

| Aspect | Time Complexity | Space Complexity |

|---|---|---|

| Definition | Measures the runtime duration of an algorithm. | Measures the memory usage of an algorithm. |

| Focus | Execution speed and efficiency. | Memory allocation and consumption. |

| Main Metrics | Big O notation (e.g., O(n), O(log n)). | Memory units (e.g., bytes, kilobytes) and Big O. |

| Impact Factors | Algorithm steps, input size, and operations. | Data structures used, recursion depth, auxiliary space. |

| Optimization Goal | Reduce runtime to improve performance. | Minimize memory footprint. |

| Trade-off | Faster algorithms may require more memory. | Less memory may increase processing time. |

Understanding Time Complexity in Software Engineering

Time complexity in software engineering measures the amount of computational time an algorithm takes to complete relative to input size, commonly expressed using Big O notation such as O(n), O(log n), or O(n2). It critically impacts algorithm efficiency and performance, especially in large-scale applications where optimizing execution time reduces latency and resource consumption. Analyzing time complexity aids developers in selecting or designing algorithms that meet performance requirements without excessive processing delays.

Defining Space Complexity and Its Importance

Space complexity measures the amount of memory an algorithm requires relative to the input size, encompassing variables, data structures, and call stack usage. Understanding space complexity is crucial for optimizing resource allocation, particularly in environments with limited memory capacity. Efficient space management prevents memory overflow and enhances overall system performance by balancing storage demands with execution speed.

Key Differences Between Time and Space Complexity

Time complexity measures the amount of computational time an algorithm takes relative to input size, while space complexity quantifies the amount of memory an algorithm requires during execution. Time complexity is often expressed using Big O notation, such as O(n), reflecting the number of operations, whereas space complexity denotes storage needs, including variables, data structures, and function calls. Key differences include time complexity focusing on execution speed and space complexity emphasizing memory usage, which both impact overall algorithm efficiency in distinct ways.

Big O Notation: Measuring Efficiency

Big O notation quantifies the efficiency of algorithms by describing their time complexity and space complexity in terms of input size. Time complexity measures the number of operations an algorithm performs as input scales, while space complexity tracks the additional memory required during execution. Understanding these complexities aids in selecting algorithms that balance speed and memory usage for optimal performance.

Trade-offs: Balancing Speed and Memory Usage

Balancing time complexity and space complexity in algorithms often requires trade-offs where improving speed may demand increased memory usage, while reducing memory can slow down execution. Algorithms like caching or memoization increase space consumption to achieve faster results, whereas in-place algorithms minimize memory but may run slower. Understanding the specific constraints and requirements of a problem helps optimize the balance between computational time and memory resources effectively.

Practical Examples: Time vs Space Optimization

In practical programming, time complexity versus space complexity trade-offs often determine algorithm efficiency. For example, hash tables improve search time to O(1) by using extra memory, whereas binary search trees save space but may incur O(log n) time. Compression algorithms favor space optimization by reducing data size at the expense of increased time for encoding and decoding processes.

Common Pitfalls in Complexity Analysis

Common pitfalls in complexity analysis include neglecting the impact of input size variability on both time and space complexity, leading to over- or underestimation of algorithm efficiency. Confusing worst-case scenarios with average-case or best-case can result in misleading performance expectations. Ignoring space complexity while optimizing for time complexity often causes excessive memory consumption, negatively affecting overall system performance.

Real-World Case Studies: Complexity Impacts

In real-world case studies, time complexity directly affects application responsiveness and user experience, as seen in online platforms like e-commerce sites where faster search algorithms reduce latency. Space complexity influences resource allocation and scalability, exemplified by mobile apps that must optimize memory usage to function efficiently on devices with limited storage. Balancing time and space complexities ensures optimal performance and cost-effectiveness in software solutions deployed at scale.

Best Practices for Managing Complexity

Efficient algorithm design balances time complexity and space complexity by minimizing resource usage without sacrificing performance. Optimal data structures such as hash tables or balanced trees improve time complexity while controlling memory consumption. Profiling tools and complexity analysis guide developers in making informed decisions to manage trade-offs effectively in software applications.

Future Trends in Algorithmic Complexity

Future trends in algorithmic complexity emphasize the development of algorithms that balance time complexity and space complexity more efficiently, adapting to evolving hardware architectures like quantum and neuromorphic computing. Innovations in approximation algorithms and machine learning-driven heuristics aim to optimize resource utilization by minimizing both execution time and memory consumption. Research in parameterized complexity and fine-grained complexity reveals potential for more precise trade-offs, enabling scalable solutions for big data and real-time processing applications.

Algorithmic efficiency

Algorithmic efficiency balances time complexity, measuring execution speed, and space complexity, assessing memory usage, to optimize overall performance.

Big O notation

Big O notation quantifies algorithm efficiency by expressing time complexity as the growth rate of runtime with input size and space complexity as the growth rate of memory usage relative to input size.

Asymptotic analysis

Asymptotic analysis evaluates time complexity by measuring how the runtime of an algorithm scales with input size, while space complexity assesses memory usage growth relative to input size, both expressed using Big O notation to provide performance bounds for large inputs.

Trade-off analysis

Optimizing algorithms involves a trade-off analysis between time complexity, which measures execution speed, and space complexity, which evaluates memory usage, requiring careful balance based on system constraints and performance goals.

Memory footprint

Memory footprint, a key aspect of space complexity, measures the amount of RAM required by an algorithm, affecting system performance and scalability more significantly than time complexity in memory-constrained environments.

Computational resources

Time complexity measures the amount of computational time required by an algorithm, while space complexity quantifies the memory resources needed during execution.

Cache locality

Cache locality improves time complexity by reducing memory access latency while slightly increasing space complexity through additional data storage or cache structures.

Optimized data structures

Optimized data structures balance time complexity by enabling faster access and manipulation while minimizing space complexity through efficient memory usage.

Runtime performance

Runtime performance primarily depends on time complexity, which measures the algorithm's execution speed relative to input size, while space complexity affects memory usage without directly determining runtime speed.

Scalability constraints

Time complexity determines how execution duration scales with input size, while space complexity measures memory usage growth, both critically influencing scalability constraints of algorithms under limited computational resources.

time complexity vs space complexity Infographic

njnir.com

njnir.com