Edge AI processes data locally on devices, reducing latency and enhancing privacy by minimizing data transmission to central servers. Cloud AI leverages powerful centralized systems for complex computations and large-scale model training, enabling more extensive data analysis and updates. Balancing Edge AI and Cloud AI optimizes performance, scalability, and security in computer engineering applications.

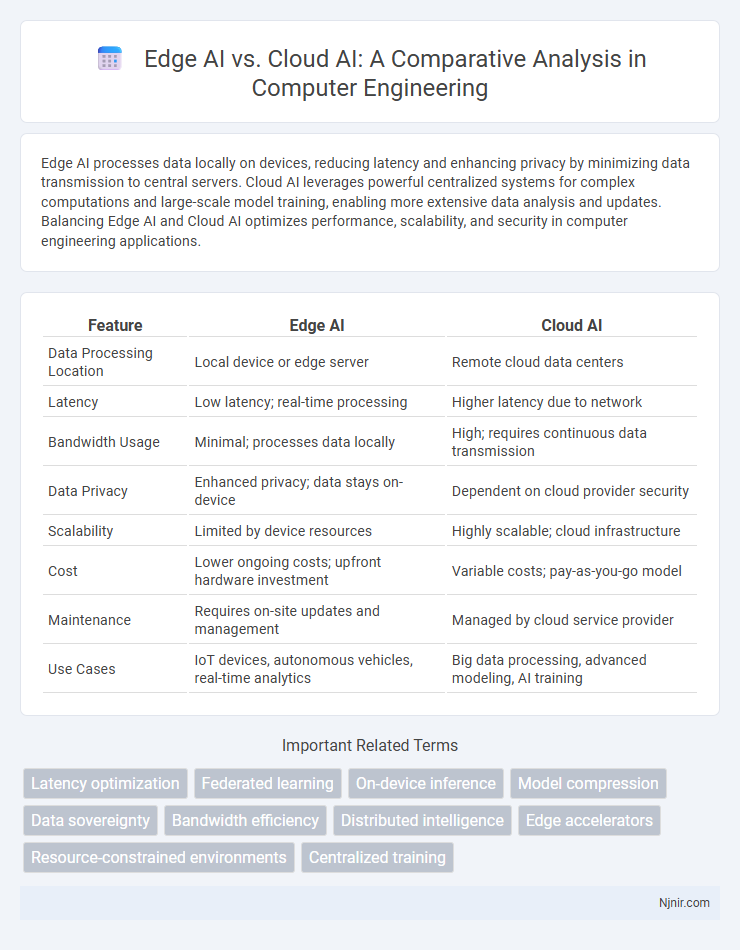

Table of Comparison

| Feature | Edge AI | Cloud AI |

|---|---|---|

| Data Processing Location | Local device or edge server | Remote cloud data centers |

| Latency | Low latency; real-time processing | Higher latency due to network |

| Bandwidth Usage | Minimal; processes data locally | High; requires continuous data transmission |

| Data Privacy | Enhanced privacy; data stays on-device | Dependent on cloud provider security |

| Scalability | Limited by device resources | Highly scalable; cloud infrastructure |

| Cost | Lower ongoing costs; upfront hardware investment | Variable costs; pay-as-you-go model |

| Maintenance | Requires on-site updates and management | Managed by cloud service provider |

| Use Cases | IoT devices, autonomous vehicles, real-time analytics | Big data processing, advanced modeling, AI training |

Introduction to Edge AI and Cloud AI

Edge AI processes data locally on devices, reducing latency and enhancing real-time decision-making for applications like autonomous vehicles and IoT devices. Cloud AI relies on centralized data centers with vast computational power, providing scalable machine learning services and enabling complex data analysis and model training. Choosing between Edge AI and Cloud AI depends on factors such as response time requirements, data privacy, and computational resources.

Core Differences Between Edge AI and Cloud AI

Edge AI processes data locally on devices, enabling real-time decision-making with low latency and enhanced privacy by minimizing data transmission to external servers. Cloud AI relies on centralized data centers with vast computational power, allowing for complex algorithm training and large-scale data integration but often experiencing higher latency and potential privacy concerns. Key differences include data processing location, latency levels, computational resource distribution, and privacy control mechanisms.

Architecture Overview: Edge Devices vs. Cloud Platforms

Edge AI architecture deploys machine learning models directly on edge devices such as IoT sensors, smartphones, and embedded systems, enabling real-time data processing with minimal latency and enhanced privacy. Cloud AI relies on centralized cloud platforms like AWS, Google Cloud, and Azure, which provide vast computational resources and scalability for training complex models and handling massive datasets. Edge AI reduces dependency on continuous internet connectivity, while Cloud AI excels in managing high volumes of data and performing resource-intensive analytics.

Latency and Real-Time Processing Considerations

Edge AI significantly reduces latency by processing data locally on devices such as smartphones or IoT sensors, enabling real-time decision-making without relying on cloud connectivity. Cloud AI, while offering extensive computational power and large-scale data analysis, often struggles with higher latency due to data transmission delays between devices and centralized data centers. In applications requiring immediate responses, such as autonomous vehicles or industrial automation, Edge AI's low-latency processing is critical for ensuring timely and accurate outcomes.

Data Privacy and Security Implications

Edge AI processes data locally on devices, significantly reducing the risk of exposing sensitive information compared to Cloud AI, which relies on transmitting data to centralized servers. By minimizing data transfer, Edge AI enhances privacy and mitigates vulnerabilities related to network interception or cloud storage breaches. Cloud AI, while offering scalable computing power, demands robust encryption and strict compliance measures to protect data during transmission and storage, highlighting a critical trade-off in security strategies.

Scalability and Resource Management

Edge AI offers improved scalability by processing data locally on devices, reducing the dependency on centralized cloud infrastructure and minimizing latency for real-time applications. It optimizes resource management by distributing computational workloads across edge devices, lowering bandwidth usage and enhancing energy efficiency. Cloud AI, while highly scalable with virtually unlimited computational power and storage, can face challenges in resource allocation and latency due to centralized data processing and network congestion.

Power Efficiency and Hardware Constraints

Edge AI optimizes power efficiency by processing data locally on devices with limited battery capacity, minimizing energy consumption compared to continuous data transmission to the cloud. Hardware constraints on edge devices, such as limited compute power and memory, necessitate specialized chips like AI accelerators and low-power processors to balance performance and energy use. Cloud AI offers scalable compute resources but incurs higher power costs due to data center operations and network latency, making Edge AI more suitable for real-time, energy-sensitive applications.

Use Cases: Where Edge AI Excels vs. Cloud AI

Edge AI excels in real-time data processing for autonomous vehicles, industrial automation, and smart cameras, where low latency and immediate decision-making are critical. Cloud AI is ideal for large-scale data analysis, complex model training, and applications requiring vast computational resources, such as natural language processing and advanced analytics. Use cases like predictive maintenance and personalized healthcare benefit from Edge AI's local processing, while cloud-driven AI supports global pattern recognition and extensive data aggregation.

Challenges and Limitations of Both Approaches

Edge AI faces challenges such as limited computational power, energy constraints, and difficulties in handling complex models locally, leading to potential sacrifices in accuracy and scalability. Cloud AI struggles with latency, bandwidth dependency, and privacy concerns due to centralized data processing, which can hinder real-time applications and data security. Both approaches must balance trade-offs between performance, cost, data privacy, and infrastructure complexity to optimize AI deployment.

Future Trends in Edge AI and Cloud AI Integration

Future trends in Edge AI and Cloud AI integration emphasize hybrid architectures that leverage low-latency processing at the edge with the expansive computational power of cloud infrastructures. Advances in 5G and IoT device proliferation enhance real-time data analytics, enabling seamless collaboration between edge devices and cloud platforms for optimized decision-making. Enhanced security protocols and federated learning models are increasingly adopted to maintain data privacy while maximizing AI efficiency across both edge and cloud environments.

Latency optimization

Edge AI dramatically reduces latency by processing data locally on devices, enabling real-time decision-making compared to Cloud AI's higher latency due to data transmission to remote servers.

Federated learning

Edge AI leverages federated learning to enable decentralized model training on local devices, reducing latency and preserving data privacy compared to Cloud AI's centralized approach.

On-device inference

On-device inference in Edge AI enables real-time data processing with lower latency and enhanced privacy compared to Cloud AI, which relies on remote servers for computation.

Model compression

Edge AI utilizes advanced model compression techniques such as quantization and pruning to enable efficient on-device inference, while Cloud AI leverages scalable resources to run larger, uncompressed models for higher accuracy.

Data sovereignty

Edge AI ensures data sovereignty by processing sensitive information locally on devices, reducing reliance on centralized Cloud AI platforms that often transfer data across international borders.

Bandwidth efficiency

Edge AI processes data locally on devices, significantly reducing bandwidth usage compared to Cloud AI, which requires continuous data transmission to centralized servers.

Distributed intelligence

Edge AI processes data locally on devices for real-time responsiveness and reduced latency, while Cloud AI leverages centralized servers for extensive computational power and scalable distributed intelligence.

Edge accelerators

Edge AI leverages specialized edge accelerators such as NVIDIA Jetson and Google Coral to perform low-latency, on-device processing, contrasting with Cloud AI's reliance on centralized servers for extensive computational resources and large-scale data analytics.

Resource-constrained environments

Edge AI enables real-time data processing and decision-making directly on devices with limited computing power, reducing latency and bandwidth use, while Cloud AI relies on powerful centralized servers that may struggle with performance and connectivity in resource-constrained environments.

Centralized training

Centralized training in Cloud AI enables large-scale data aggregation and powerful model optimization, whereas Edge AI requires decentralized training approaches to accommodate limited local computational resources and real-time processing needs.

Edge AI vs Cloud AI Infographic

njnir.com

njnir.com