TensorFlow Lite excels in deploying machine learning models on mobile and embedded devices due to its lightweight architecture and extensive optimization for Android and iOS platforms. ONNX provides a versatile open-source format that facilitates interoperability between various deep learning frameworks, enabling seamless model conversion and deployment across different environments. Choosing between TensorFlow Lite and ONNX depends on specific project requirements, such as device constraints and framework compatibility.

Table of Comparison

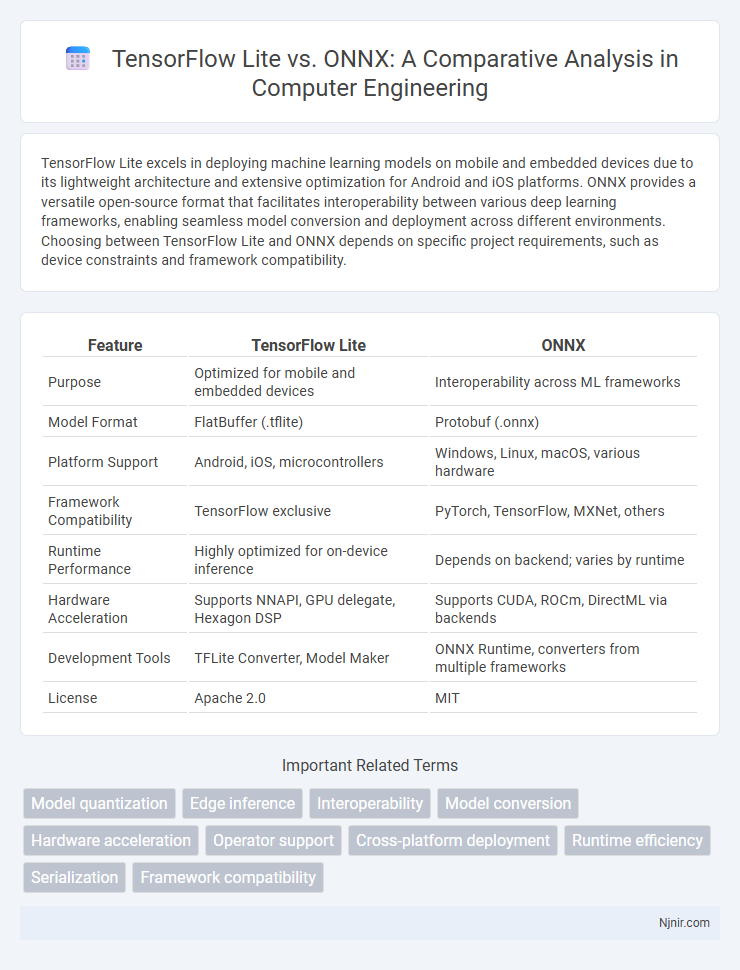

| Feature | TensorFlow Lite | ONNX |

|---|---|---|

| Purpose | Optimized for mobile and embedded devices | Interoperability across ML frameworks |

| Model Format | FlatBuffer (.tflite) | Protobuf (.onnx) |

| Platform Support | Android, iOS, microcontrollers | Windows, Linux, macOS, various hardware |

| Framework Compatibility | TensorFlow exclusive | PyTorch, TensorFlow, MXNet, others |

| Runtime Performance | Highly optimized for on-device inference | Depends on backend; varies by runtime |

| Hardware Acceleration | Supports NNAPI, GPU delegate, Hexagon DSP | Supports CUDA, ROCm, DirectML via backends |

| Development Tools | TFLite Converter, Model Maker | ONNX Runtime, converters from multiple frameworks |

| License | Apache 2.0 | MIT |

Overview of TensorFlow Lite and ONNX

TensorFlow Lite is a lightweight, cross-platform deep learning framework designed for deploying machine learning models on mobile and embedded devices, providing optimized performance and reduced latency. ONNX (Open Neural Network Exchange) is an open-source format that facilitates interoperability between different deep learning frameworks, enabling models trained in one framework to be deployed across various platforms. Both technologies support edge AI implementations, with TensorFlow Lite focusing on efficient on-device inference and ONNX emphasizing model portability and compatibility.

Supported Platforms and Device Compatibility

TensorFlow Lite supports a wide range of platforms including Android, iOS, embedded Linux, and microcontrollers, offering extensive device compatibility for mobile and edge deployments. ONNX provides interoperability by supporting multiple frameworks and can be deployed on platforms such as Windows, Linux, and cloud environments, with device compatibility extending to GPUs, CPUs, and custom accelerators via ONNX Runtime. Both frameworks enable optimized inference on diverse hardware but TensorFlow Lite's specialization in mobile and embedded systems contrasts with ONNX's broader ecosystem integration and flexibility across varied devices.

Model Format and Conversion Workflow

TensorFlow Lite utilizes a flatbuffer-based model format (.tflite) designed for low-latency inference on edge devices, enabling efficient model compression and optimization. ONNX employs a protocol buffer format (.onnx) with a standardized operator set for interoperability between diverse AI frameworks, streamlining model exchange and integration. The conversion workflow for TensorFlow Lite involves TensorFlow model export followed by TFLite Converter optimization, whereas ONNX models are commonly exported from PyTorch or TensorFlow using ONNX exporters, supporting seamless framework transitions and hardware deployment flexibility.

Performance and Latency Benchmarks

TensorFlow Lite delivers superior performance on ARM-based mobile devices, leveraging optimized delegates like NNAPI and GPU acceleration to reduce inference latency significantly. ONNX Runtime excels in cross-platform deployment with hardware acceleration support such as CUDA and DirectML, showing lower latency in CPU-heavy workloads and edge devices with heterogeneous resources. Benchmarks reveal TensorFlow Lite achieves faster startup times and smaller binary sizes, whereas ONNX Runtime provides more flexible integration and often outperforms in mixed-precision and transformer-based model inference scenarios.

Supported Machine Learning Models and Operations

TensorFlow Lite supports a wide range of TensorFlow models, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer models, optimized for mobile and edge deployments with efficient operations like quantization and pruning. ONNX provides extensive compatibility across various frameworks such as PyTorch, Caffe2, and Microsoft Cognitive Toolkit, supporting numerous model types including CNNs, RNNs, and custom operators with flexibility for diverse hardware accelerators. Both frameworks emphasize optimized execution of core machine learning operations like convolutions, matrix multiplications, and activation functions but differ in their model conversion and operation support, with ONNX offering a broader cross-platform interoperability and TensorFlow Lite focusing on lightweight inference.

Integration with Edge and Mobile Devices

TensorFlow Lite offers seamless integration with a wide range of edge and mobile devices through optimized libraries and pre-built kernels, enabling efficient model deployment on Android and iOS platforms. ONNX provides interoperability across diverse frameworks and supports edge device execution via runtimes like ONNX Runtime and integration with hardware accelerators, ensuring flexibility in deployment scenarios. Both frameworks facilitate reduced latency and lower power consumption by leveraging quantization and hardware-specific optimizations tailored for on-device AI inference.

Community Support and Documentation

TensorFlow Lite benefits from a vast and active community supported by Google, with comprehensive, regularly updated documentation and extensive tutorials that facilitate mobile and embedded deployment. ONNX, backed by a broad ecosystem including Microsoft and Meta, offers robust documentation and support, though community size and resources are growing compared to TensorFlow Lite's maturity. Both frameworks provide strong developer forums and GitHub repositories, but TensorFlow Lite's larger user base enables quicker issue resolution and richer knowledge-sharing.

Deployment Flexibility and Ecosystem

TensorFlow Lite offers extensive deployment flexibility across mobile, embedded, and IoT devices with optimized performance for Android and iOS platforms, leveraging TensorFlow's broad ecosystem. ONNX provides interoperability between various deep learning frameworks like PyTorch, Caffe2, and MXNet, enabling seamless model deployment across diverse hardware accelerators and runtime environments. TensorFlow Lite's ecosystem benefits from integrated support with TensorFlow Hub and TensorFlow Model Garden, while ONNX's growing ecosystem is boosted by ONNX Runtime's cross-platform support and compatibility with numerous hardware vendors.

Security and Model Protection Features

TensorFlow Lite offers model encryption and hardware-backed key management for enhanced security on Android devices, while ONNX relies on platform-specific secure execution environments without a unified encryption standard. TensorFlow Lite's support for secure model deployment integrates with Android's TEE (Trusted Execution Environment), providing robust protection against model extraction and tampering. ONNX models benefit from flexible runtime compatibility but require additional measures to ensure comparable model protection, making TensorFlow Lite a preferred choice for security-centric edge AI applications.

Choosing Between TensorFlow Lite and ONNX

Choosing between TensorFlow Lite and ONNX depends on the target deployment environment and model compatibility requirements. TensorFlow Lite excels in optimized performance on mobile and embedded devices with seamless integration in TensorFlow ecosystems, while ONNX offers broader interoperability across different deep learning frameworks such as PyTorch, MXNet, and Caffe2. Developers prioritize TensorFlow Lite for TensorFlow-based models demanding low-latency inference on edge devices and select ONNX when cross-platform model exportability and flexibility are essential.

Model quantization

TensorFlow Lite offers advanced post-training quantization techniques including full integer quantization for efficient edge deployment, while ONNX supports dynamic and static quantization through its quantization toolkit, enabling cross-framework model optimization with reduced size and latency.

Edge inference

TensorFlow Lite and ONNX both enable efficient edge inference, with TensorFlow Lite offering seamless integration for Android devices and Google hardware, while ONNX provides broad interoperability across diverse frameworks and hardware accelerators.

Interoperability

TensorFlow Lite offers optimized mobile and embedded device deployment with strong TensorFlow ecosystem integration, while ONNX provides broad interoperability across diverse AI frameworks like PyTorch, enabling seamless model exchange and deployment flexibility.

Model conversion

TensorFlow Lite supports direct model conversion from TensorFlow models using the TFLite Converter, while ONNX enables interoperability by converting models from multiple frameworks including PyTorch and TensorFlow through ONNX Runtime.

Hardware acceleration

TensorFlow Lite supports hardware acceleration through delegates like the GPU, NNAPI, and Hexagon DSP on various devices, while ONNX utilizes backend-specific runtimes such as ONNX Runtime with DirectML and CUDA for efficient hardware acceleration across diverse platforms.

Operator support

TensorFlow Lite supports a broad range of operators optimized for mobile and edge devices, while ONNX offers extensive cross-platform operator compatibility with a robust, continuously updated operator set ideal for diverse hardware and frameworks.

Cross-platform deployment

TensorFlow Lite and ONNX both enable cross-platform deployment, with TensorFlow Lite optimized for mobile and embedded devices, while ONNX offers broader framework interoperability across various platforms and hardware.

Runtime efficiency

TensorFlow Lite achieves superior runtime efficiency for mobile and embedded devices through optimized kernels and hardware acceleration, while ONNX Runtime excels in cross-platform compatibility and supports diverse hardware backends for versatile deployment.

Serialization

TensorFlow Lite uses FlatBuffers for efficient serialization and fast loading of machine learning models, while ONNX employs a protobuf-based format that prioritizes interoperability across different frameworks.

Framework compatibility

TensorFlow Lite primarily supports TensorFlow models optimized for mobile and edge devices, while ONNX offers broader framework compatibility by enabling interoperability between various deep learning frameworks like PyTorch, TensorFlow, and MXNet.

TensorFlow Lite vs ONNX Infographic

njnir.com

njnir.com